sync code from commit ef184dd6b06bcbce8f9ec35a5811ce2a6254b43b

This commit is contained in:

parent

8948b02af6

commit

1bfb7568ea

|

|

@ -0,0 +1,28 @@

|

||||||

|

# Python

|

||||||

|

__pycache__/

|

||||||

|

*.pyc

|

||||||

|

*.pyo

|

||||||

|

*.pyd

|

||||||

|

*.pyi

|

||||||

|

*.pyw

|

||||||

|

*.egg-info/

|

||||||

|

dist/

|

||||||

|

build/

|

||||||

|

*.egg

|

||||||

|

*.eggs

|

||||||

|

*.whl

|

||||||

|

|

||||||

|

# Virtual Environment

|

||||||

|

venv/

|

||||||

|

env/

|

||||||

|

ENV/

|

||||||

|

|

||||||

|

# IDE

|

||||||

|

.vscode/

|

||||||

|

*.code-workspace

|

||||||

|

*.idea

|

||||||

|

|

||||||

|

# Miscellaneous

|

||||||

|

*.log

|

||||||

|

*.swp

|

||||||

|

.DS_Store

|

||||||

|

|

@ -0,0 +1,200 @@

|

||||||

|

Version 2.0, January 2004

|

||||||

|

http://www.apache.org/licenses/

|

||||||

|

|

||||||

|

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

||||||

|

|

||||||

|

1. Definitions.

|

||||||

|

|

||||||

|

"License" shall mean the terms and conditions for use, reproduction,

|

||||||

|

and distribution as defined by Sections 1 through 9 of this document.

|

||||||

|

|

||||||

|

"Licensor" shall mean the copyright owner or entity authorized by

|

||||||

|

the copyright owner that is granting the License.

|

||||||

|

|

||||||

|

"Legal Entity" shall mean the union of the acting entity and all

|

||||||

|

other entities that control, are controlled by, or are under common

|

||||||

|

control with that entity. For the purposes of this definition,

|

||||||

|

"control" means (i) the power, direct or indirect, to cause the

|

||||||

|

direction or management of such entity, whether by contract or

|

||||||

|

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

||||||

|

outstanding shares, or (iii) beneficial ownership of such entity.

|

||||||

|

|

||||||

|

"You" (or "Your") shall mean an individual or Legal Entity

|

||||||

|

exercising permissions granted by this License.

|

||||||

|

|

||||||

|

"Source" form shall mean the preferred form for making modifications,

|

||||||

|

including but not limited to software source code, documentation

|

||||||

|

source, and configuration files.

|

||||||

|

|

||||||

|

"Object" form shall mean any form resulting from mechanical

|

||||||

|

transformation or translation of a Source form, including but

|

||||||

|

not limited to compiled object code, generated documentation,

|

||||||

|

and conversions to other media types.

|

||||||

|

|

||||||

|

"Work" shall mean the work of authorship, whether in Source or

|

||||||

|

Object form, made available under the License, as indicated by a

|

||||||

|

copyright notice that is included in or attached to the work

|

||||||

|

(an example is provided in the Appendix below).

|

||||||

|

|

||||||

|

"Derivative Works" shall mean any work, whether in Source or Object

|

||||||

|

form, that is based on (or derived from) the Work and for which the

|

||||||

|

editorial revisions, annotations, elaborations, or other modifications

|

||||||

|

represent, as a whole, an original work of authorship. For the purposes

|

||||||

|

of this License, Derivative Works shall not include works that remain

|

||||||

|

separable from, or merely link (or bind by name) to the interfaces of,

|

||||||

|

the Work and Derivative Works thereof.

|

||||||

|

|

||||||

|

"Contribution" shall mean any work of authorship, including

|

||||||

|

the original version of the Work and any modifications or additions

|

||||||

|

to that Work or Derivative Works thereof, that is intentionally

|

||||||

|

submitted to Licensor for inclusion in the Work by the copyright owner

|

||||||

|

or by an individual or Legal Entity authorized to submit on behalf of

|

||||||

|

the copyright owner. For the purposes of this definition, "submitted"

|

||||||

|

means any form of electronic, verbal, or written communication sent

|

||||||

|

to the Licensor or its representatives, including but not limited to

|

||||||

|

communication on electronic mailing lists, source code control systems,

|

||||||

|

and issue tracking systems that are managed by, or on behalf of, the

|

||||||

|

Licensor for the purpose of discussing and improving the Work, but

|

||||||

|

excluding communication that is conspicuously marked or otherwise

|

||||||

|

designated in writing by the copyright owner as "Not a Contribution."

|

||||||

|

|

||||||

|

"Contributor" shall mean Licensor and any individual or Legal Entity

|

||||||

|

on behalf of whom a Contribution has been received by Licensor and

|

||||||

|

subsequently incorporated within the Work.

|

||||||

|

|

||||||

|

2. Grant of Copyright License. Subject to the terms and conditions of

|

||||||

|

this License, each Contributor hereby grants to You a perpetual,

|

||||||

|

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

||||||

|

copyright license to reproduce, prepare Derivative Works of,

|

||||||

|

publicly display, publicly perform, sublicense, and distribute the

|

||||||

|

Work and such Derivative Works in Source or Object form.

|

||||||

|

|

||||||

|

3. Grant of Patent License. Subject to the terms and conditions of

|

||||||

|

this License, each Contributor hereby grants to You a perpetual,

|

||||||

|

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

||||||

|

(except as stated in this section) patent license to make, have made,

|

||||||

|

use, offer to sell, sell, import, and otherwise transfer the Work,

|

||||||

|

where such license applies only to those patent claims licensable

|

||||||

|

by such Contributor that are necessarily infringed by their

|

||||||

|

Contribution(s) alone or by combination of their Contribution(s)

|

||||||

|

with the Work to which such Contribution(s) was submitted. If You

|

||||||

|

institute patent litigation against any entity (including a

|

||||||

|

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

||||||

|

or a Contribution incorporated within the Work constitutes direct

|

||||||

|

or contributory patent infringement, then any patent licenses

|

||||||

|

granted to You under this License for that Work shall terminate

|

||||||

|

as of the date such litigation is filed.

|

||||||

|

|

||||||

|

4. Redistribution. You may reproduce and distribute copies of the

|

||||||

|

Work or Derivative Works thereof in any medium, with or without

|

||||||

|

modifications, and in Source or Object form, provided that You

|

||||||

|

meet the following conditions:

|

||||||

|

|

||||||

|

(a) You must give any other recipients of the Work or

|

||||||

|

Derivative Works a copy of this License; and

|

||||||

|

|

||||||

|

(b) You must cause any modified files to carry prominent notices

|

||||||

|

stating that You changed the files; and

|

||||||

|

|

||||||

|

(c) You must retain, in the Source form of any Derivative Works

|

||||||

|

that You distribute, all copyright, patent, trademark, and

|

||||||

|

attribution notices from the Source form of the Work,

|

||||||

|

excluding those notices that do not pertain to any part of

|

||||||

|

the Derivative Works; and

|

||||||

|

|

||||||

|

(d) If the Work includes a "NOTICE" text file as part of its

|

||||||

|

distribution, then any Derivative Works that You distribute must

|

||||||

|

include a readable copy of the attribution notices contained

|

||||||

|

within such NOTICE file, excluding those notices that do not

|

||||||

|

pertain to any part of the Derivative Works, in at least one

|

||||||

|

of the following places: within a NOTICE text file distributed

|

||||||

|

as part of the Derivative Works; within the Source form or

|

||||||

|

documentation, if provided along with the Derivative Works; or,

|

||||||

|

within a display generated by the Derivative Works, if and

|

||||||

|

wherever such third-party notices normally appear. The contents

|

||||||

|

of the NOTICE file are for informational purposes only and

|

||||||

|

do not modify the License. You may add Your own attribution

|

||||||

|

notices within Derivative Works that You distribute, alongside

|

||||||

|

or as an addendum to the NOTICE text from the Work, provided

|

||||||

|

that such additional attribution notices cannot be construed

|

||||||

|

as modifying the License.

|

||||||

|

|

||||||

|

You may add Your own copyright statement to Your modifications and

|

||||||

|

may provide additional or different license terms and conditions

|

||||||

|

for use, reproduction, or distribution of Your modifications, or

|

||||||

|

for any such Derivative Works as a whole, provided Your use,

|

||||||

|

reproduction, and distribution of the Work otherwise complies with

|

||||||

|

the conditions stated in this License.

|

||||||

|

|

||||||

|

5. Submission of Contributions. Unless You explicitly state otherwise,

|

||||||

|

any Contribution intentionally submitted for inclusion in the Work

|

||||||

|

by You to the Licensor shall be under the terms and conditions of

|

||||||

|

this License, without any additional terms or conditions.

|

||||||

|

Notwithstanding the above, nothing herein shall supersede or modify

|

||||||

|

the terms of any separate license agreement you may have executed

|

||||||

|

with Licensor regarding such Contributions.

|

||||||

|

|

||||||

|

6. Trademarks. This License does not grant permission to use the trade

|

||||||

|

names, trademarks, service marks, or product names of the Licensor,

|

||||||

|

except as required for reasonable and customary use in describing the

|

||||||

|

origin of the Work and reproducing the content of the NOTICE file.

|

||||||

|

|

||||||

|

7. Disclaimer of Warranty. Unless required by applicable law or

|

||||||

|

agreed to in writing, Licensor provides the Work (and each

|

||||||

|

Contributor provides its Contributions) on an "AS IS" BASIS,

|

||||||

|

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

||||||

|

implied, including, without limitation, any warranties or conditions

|

||||||

|

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

||||||

|

PARTICULAR PURPOSE. You are solely responsible for determining the

|

||||||

|

appropriateness of using or redistributing the Work and assume any

|

||||||

|

risks associated with Your exercise of permissions under this License.

|

||||||

|

|

||||||

|

8. Limitation of Liability. In no event and under no legal theory,

|

||||||

|

whether in tort (including negligence), contract, or otherwise,

|

||||||

|

unless required by applicable law (such as deliberate and grossly

|

||||||

|

negligent acts) or agreed to in writing, shall any Contributor be

|

||||||

|

liable to You for damages, including any direct, indirect, special,

|

||||||

|

incidental, or consequential damages of any character arising as a

|

||||||

|

result of this License or out of the use or inability to use the

|

||||||

|

Work (including but not limited to damages for loss of goodwill,

|

||||||

|

work stoppage, computer failure or malfunction, or any and all

|

||||||

|

other commercial damages or losses), even if such Contributor

|

||||||

|

has been advised of the possibility of such damages.

|

||||||

|

|

||||||

|

9. Accepting Warranty or Additional Liability. While redistributing

|

||||||

|

the Work or Derivative Works thereof, You may choose to offer,

|

||||||

|

and charge a fee for, acceptance of support, warranty, indemnity,

|

||||||

|

or other liability obligations and/or rights consistent with this

|

||||||

|

License. However, in accepting such obligations, You may act only

|

||||||

|

on Your own behalf and on Your sole responsibility, not on behalf

|

||||||

|

of any other Contributor, and only if You agree to indemnify,

|

||||||

|

defend, and hold each Contributor harmless for any liability

|

||||||

|

incurred by, or claims asserted against, such Contributor by reason

|

||||||

|

of your accepting any such warranty or additional liability.

|

||||||

|

|

||||||

|

END OF TERMS AND CONDITIONS

|

||||||

|

|

||||||

|

APPENDIX: How to apply the Apache License to your work.

|

||||||

|

|

||||||

|

To apply the Apache License to your work, attach the following

|

||||||

|

boilerplate notice, with the fields enclosed by brackets "{}"

|

||||||

|

replaced with your own identifying information. (Don't include

|

||||||

|

the brackets!) The text should be enclosed in the appropriate

|

||||||

|

comment syntax for the file format. We also recommend that a

|

||||||

|

file or class name and description of purpose be included on the

|

||||||

|

same "printed page" as the copyright notice for easier

|

||||||

|

identification within third-party archives.

|

||||||

|

|

||||||

|

Copyright {yyyy} {name of copyright owner}

|

||||||

|

|

||||||

|

Licensed under the Apache License, Version 2.0 (the "License");

|

||||||

|

you may not use this file except in compliance with the License.

|

||||||

|

You may obtain a copy of the License at

|

||||||

|

|

||||||

|

http://www.apache.org/licenses/LICENSE-2.0

|

||||||

|

|

||||||

|

Unless required by applicable law or agreed to in writing, software

|

||||||

|

distributed under the License is distributed on an "AS IS" BASIS,

|

||||||

|

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||||

|

See the License for the specific language governing permissions and

|

||||||

|

limitations under the License.

|

||||||

|

|

@ -1,20 +1,21 @@

|

||||||

## 什么是 JYCache for Model?

|

|

||||||

|

|

||||||

JYCache for Model (简称 "jycache-model") 目标成为一款“小而美”的工具,帮助用户能够方便地从模型仓库下载、管理、分享模型文件。

|

# 什么是 HuggingFace FS ?

|

||||||

|

|

||||||

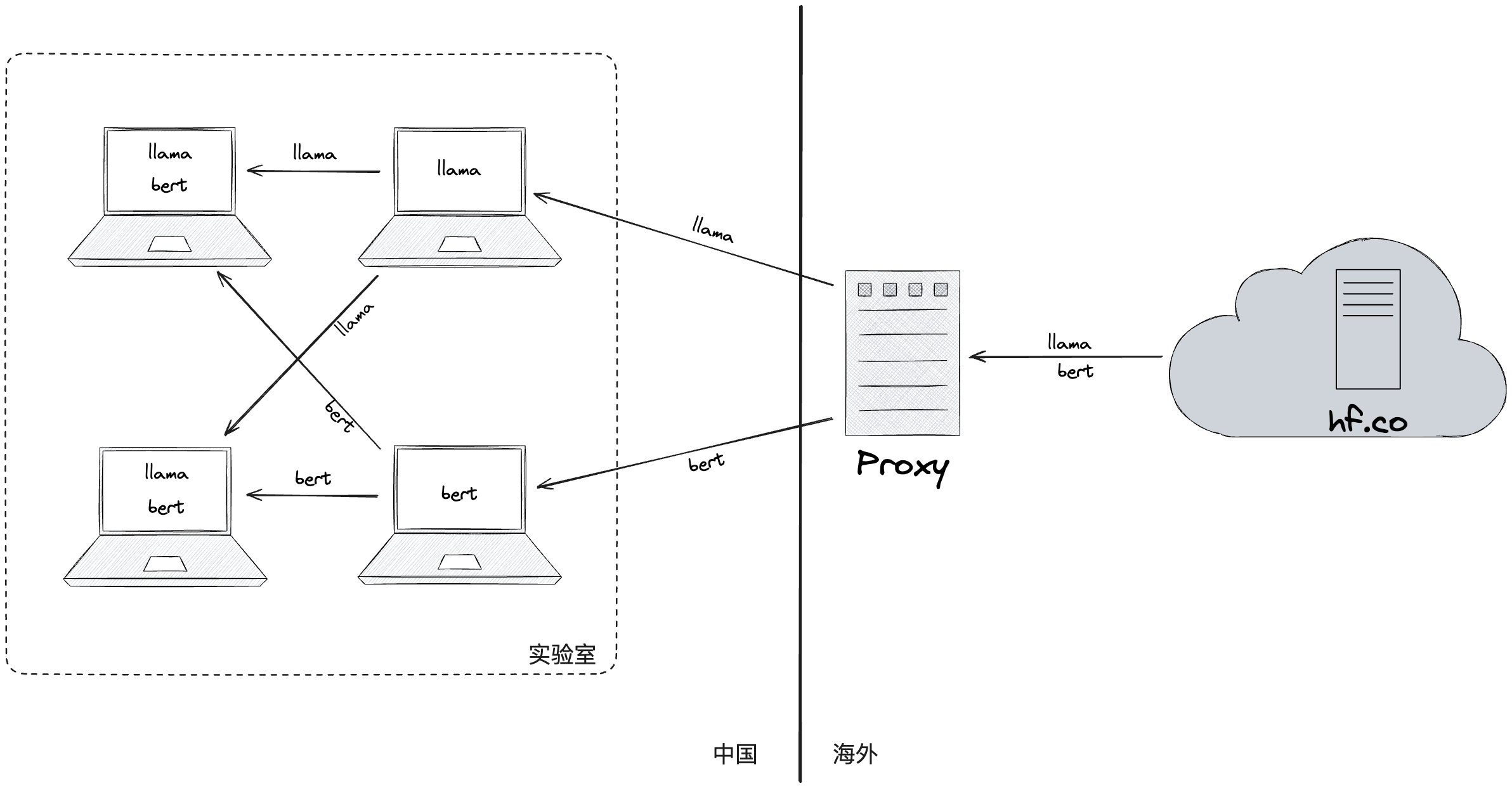

通常,模型文件很大,模型仓库的网络带宽不高而且不稳定,有些模型仓库需要设置代理或者使用镜像网站才能访问。因此,用户下载模型文件的时间往往很长。jycache-model 提供了 P2P 的模型共享方式,让用户能够以更快的速度获得所需要的模型。

|

HuggingFace FS (简称 "HFFS") 目标成为一款“小而美”的工具,帮助中国大陆用户在使用 [HuggingFace](huggingface.co) 的时候,能够方便地下载、管理、分享来自 HF 的模型。

|

||||||

|

|

||||||

|

中国大陆用户需要配置代理服务才能访问 HuggingFace 主站。大陆地区和 HuggingFace 主站之间的网络带宽较低而且不稳定,可靠的镜像网站很少,模型文件又很大。因此,下载 HuggingFace 上模型文件的时间往往很长。HFFS 在 hf.co 的基础上增加了 P2P 的模型共享方式,让大陆地区用户能够以更快的速度获得所需要的模型。

|

||||||

|

|

||||||

jycache-model 的典型使用场景有:

|

|

||||||

|

|

||||||

|

HFFS 的典型使用场景有:

|

||||||

|

|

||||||

- **同伴之间模型共享**:如果实验室或者开发部门的其他小伙伴已经下载了你需要的模型文件**,HFFS 的 P2P 共享方式能让你从他们那里得到模型,模型的下载速度不再是个头疼的问题。当然,如果目标模型还没有被其他人下载过,jycache-model 会自动从模型仓库下载模型,然后你可以通过 jycache-model 把模型分享给其他小伙伴。

|

- **同伴之间模型共享**:如果实验室或者开发部门的其他小伙伴已经下载了你需要的模型文件**,HFFS 的 P2P 共享方式能让你从他们那里得到模型,模型的下载速度不再是个头疼的问题。当然,如果目标模型还没有被其他人下载过,jycache-model 会自动从模型仓库下载模型,然后你可以通过 jycache-model 把模型分享给其他小伙伴。

|

||||||

- **机器之间模型传输**:有些小伙伴需要两台主机(Windows 和 Linux)完成模型的下载和使用:Windows 上的 VPN 很容易配置,所以它负责下载模型;Linux 的开发环境很方便,所以它负责模型的微调、推理等任务。通过 jycache-model 的 P2P 共享功能,两台主机之间的模型下载和拷贝就不再需要手动操作了。

|

- **机器之间模型传输**:有些小伙伴需要两台主机(Windows 和 Linux)完成模型的下载和使用:Windows 上的 VPN 很容易配置,所以它负责下载模型;Linux 的开发环境很方便,所以它负责模型的微调、推理等任务。通过 jycache-model 的 P2P 共享功能,两台主机之间的模型下载和拷贝就不再需要手动操作了。

|

||||||

- **多源断点续传**:浏览器默认不支持模型下载的断点续传,但是 jycache-model 支持该功能。无论模型文件从哪里下载(模型仓库或者其他同伴的机器),jycache-model 支持不同下载源之间的断点续传。

|

- **多源断点续传**:浏览器默认不支持模型下载的断点续传,但是 jycache-model 支持该功能。无论模型文件从哪里下载(模型仓库或者其他同伴的机器),jycache-model 支持不同下载源之间的断点续传。

|

||||||

|

|

||||||

## jycache-model 如何工作

|

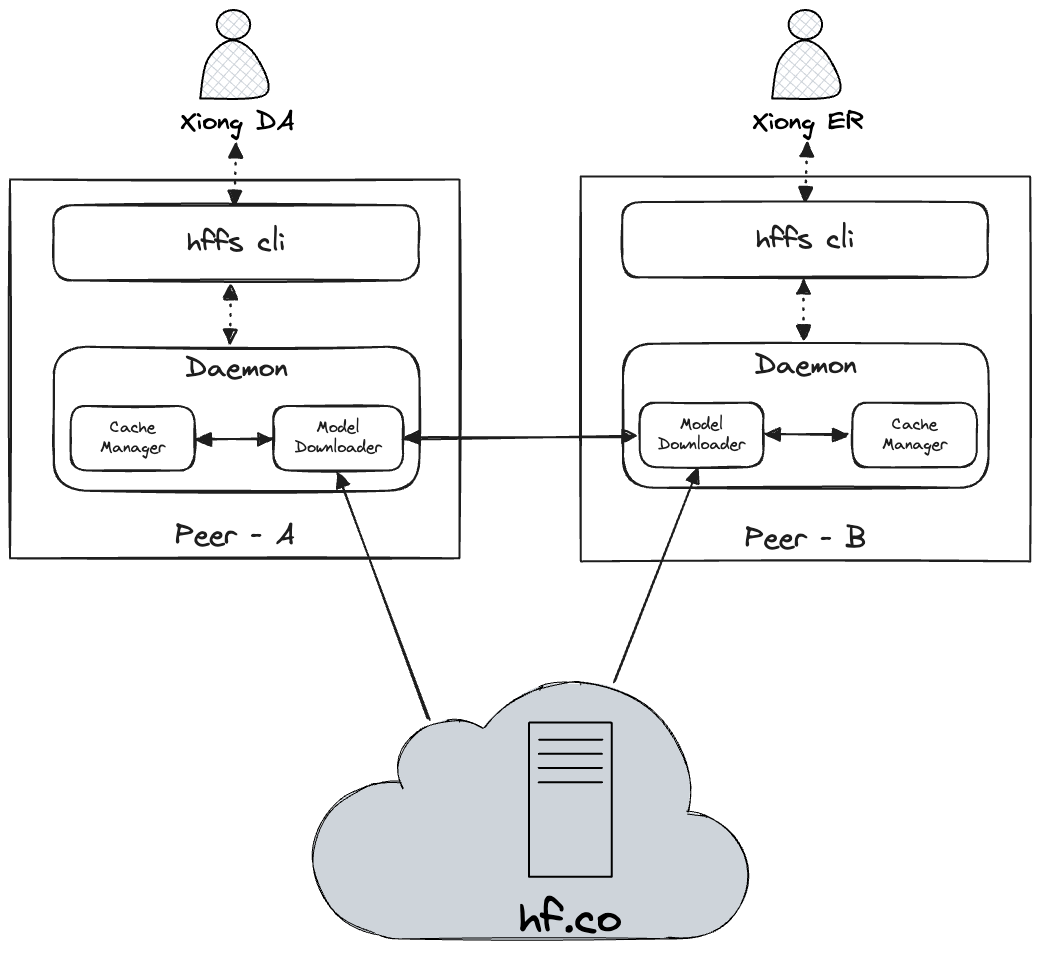

## HFFS 如何工作

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

1. 通过 `hffs daemon start` 命令启动 HFFS daemon 服务;

|

1. 通过 `hffs daemon start` 命令启动 HFFS daemon 服务;

|

||||||

2. 通过 `hffs peer add` 相关命令把局域网内其他机器作为 peer 和本机配对;

|

2. 通过 `hffs peer add` 相关命令把局域网内其他机器作为 peer 和本机配对;

|

||||||

|

|

@ -24,20 +25,19 @@ jycache-model 的典型使用场景有:

|

||||||

|

|

||||||

`hffs daemon`、`hffs peer`、`hffs model` 命令还包括其他的功能,请见下面的文档说明。

|

`hffs daemon`、`hffs peer`、`hffs model` 命令还包括其他的功能,请见下面的文档说明。

|

||||||

|

|

||||||

## 安装

|

## 安装 HFFS

|

||||||

|

|

||||||

> 注意:

|

> [!NOTE]

|

||||||

> 确保你安装了 Python 3.11+ 版本并且安装了 pip。

|

>

|

||||||

> 可以考虑使用 [Miniconda](https://docs.anaconda.com/miniconda/miniconda-install/) 安装和管理不同版本的 Python。

|

> - 确保你安装了 Python 3.11+ 版本并且安装了 pip。

|

||||||

> pip 的使用见 [这里](https://pip.pypa.io/en/stable/cli/pip_install/)。

|

> - 可以考虑使用 [Miniconda](https://docs.anaconda.com/miniconda/miniconda-install/) 安装和管理不同版本的 Python。

|

||||||

|

> - pip 的使用见 [这里](https://pip.pypa.io/en/stable/cli/pip_install/)。

|

||||||

|

|

||||||

```bash

|

```bash

|

||||||

|

|

||||||

pip install -i https://test.pypi.org/simple/ hffs

|

pip install -i https://test.pypi.org/simple/ hffs

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

## 命令行

|

## HFFS 命令

|

||||||

|

|

||||||

### HFFS Daemon 服务管理

|

### HFFS Daemon 服务管理

|

||||||

|

|

||||||

|

|

@ -57,10 +57,8 @@ hffs daemon stop

|

||||||

|

|

||||||

### Peer 管理

|

### Peer 管理

|

||||||

|

|

||||||

> 注意:

|

> [!NOTE]

|

||||||

> 关于自动 Peer 管理:为了提高易用性,HFFS 计划加入自动 Peer 管理功能(HFFS 自动发现、连接 Peer)。在该功能发布以前,用户可以通过下面的命令手动管理 Peer。

|

> 关于自动 Peer 管理:为了提高易用性,HFFS 计划加入自动 Peer 管理功能(HFFS 自动发现、连接 Peer)。在该功能发布以前,用户可以通过下面的命令手动管理 Peer。在 Unix-like 操作系统上,可以使用 [这里](https://www.51cto.com/article/720658.html) 介绍的 `ifconfig` 或者 `hostname` 命令行查找机器的 IP 地址。 在 Windows 操作系统上,可以使用 [这里](https://support.microsoft.com/zh-cn/windows/%E5%9C%A8-windows-%E4%B8%AD%E6%9F%A5%E6%89%BE-ip-%E5%9C%B0%E5%9D%80-f21a9bbc-c582-55cd-35e0-73431160a1b9) 介绍的方式找到机器的 IP 地址。

|

||||||

|

|

||||||

在 Unix-like 操作系统上,可以使用 [这里](https://www.51cto.com/article/720658.html) 介绍的 `ifconfig` 或者 `hostname` 命令行查找机器的 IP 地址。 在 Windows 操作系统上,可以使用 [这里](https://support.microsoft.com/zh-cn/windows/%E5%9C%A8-windows-%E4%B8%AD%E6%9F%A5%E6%89%BE-ip-%E5%9C%B0%E5%9D%80-f21a9bbc-c582-55cd-35e0-73431160a1b9) 介绍的方式找到机器的 IP 地址。

|

|

||||||

|

|

||||||

#### 添加 Peer

|

#### 添加 Peer

|

||||||

|

|

||||||

|

|

@ -83,7 +81,7 @@ hffs peer ls

|

||||||

|

|

||||||

在 Daemon 已经启动的情况下, Daemon 会定期查询其他 peer 是否在线。`hffs peer ls` 命令会把在线的 peer 标注为 "_active_"。

|

在 Daemon 已经启动的情况下, Daemon 会定期查询其他 peer 是否在线。`hffs peer ls` 命令会把在线的 peer 标注为 "_active_"。

|

||||||

|

|

||||||

> 注意:

|

> [!NOTE]

|

||||||

> 如果 peer 互通在 Windows 上出现问题,请检查:1. Daemon 是否已经启动,2. Windows 的防火墙是否打开(参见 [这里](https://support.microsoft.com/zh-cn/windows/%E5%85%81%E8%AE%B8%E5%BA%94%E7%94%A8%E9%80%9A%E8%BF%87-windows-defender-%E9%98%B2%E7%81%AB%E5%A2%99%E7%9A%84%E9%A3%8E%E9%99%A9-654559af-3f54-3dcf-349f-71ccd90bcc5c))

|

> 如果 peer 互通在 Windows 上出现问题,请检查:1. Daemon 是否已经启动,2. Windows 的防火墙是否打开(参见 [这里](https://support.microsoft.com/zh-cn/windows/%E5%85%81%E8%AE%B8%E5%BA%94%E7%94%A8%E9%80%9A%E8%BF%87-windows-defender-%E9%98%B2%E7%81%AB%E5%A2%99%E7%9A%84%E9%A3%8E%E9%99%A9-654559af-3f54-3dcf-349f-71ccd90bcc5c))

|

||||||

|

|

||||||

#### 删除 Peer

|

#### 删除 Peer

|

||||||

|

|

@ -96,67 +94,166 @@ hffs peer rm IP [--port PORT_NUM]

|

||||||

|

|

||||||

### 模型管理

|

### 模型管理

|

||||||

|

|

||||||

|

#### 添加模型

|

||||||

|

|

||||||

|

```bash

|

||||||

|

hffs model add REPO_ID [--file FILE] [--revision REVISION]

|

||||||

|

```

|

||||||

|

|

||||||

|

使用 HFFS 下载并管理指定的模型。

|

||||||

|

|

||||||

|

下载顺序为 peer $\rightarrow$ 镜像网站 $\rightarrow$ hf.co 原站;如果 peer 节点中并未找到目标模型,并且镜像网站(hf-mirror.com 等)和 hf.co 原站都无法访问(镜像网站关闭、原站由于代理设置不当而无法联通等原因),则下载失败。

|

||||||

|

|

||||||

|

参数说明

|

||||||

|

|

||||||

|

- `REPO_ID` 的 [相关文档](https://huggingface.co/docs/hub/en/api#get-apimodelsrepoid-or-apimodelsrepoidrevisionrevision)

|

||||||

|

- `FILE` 是模型文件相对 git root 目录的相对路径

|

||||||

|

- 该路径可以在 huggingface 的网页上查看

|

||||||

|

- 在执行添加、删除模型文件命令的时候,都需要使用该路径作为参数指定目标文件

|

||||||

|

- 例如,模型 [google/timesfm-1.0-200m](https://hf-mirror.com/google/timesfm-1.0-200m) 中 [checkpoint](https://hf-mirror.com/google/timesfm-1.0-200m/tree/main/checkpoints/checkpoint_1100000/state) 文件的路径为 `checkpoints/checkpoint_1100000/state`

|

||||||

|

- `REVISION` 的 [相关文档](https://huggingface.co/docs/hub/en/api#get-apimodelsrepoid-or-apimodelsrepoidrevisionrevision)

|

||||||

|

- revision 可以是 git 分支名称/ref(如 `main`, `master` 等),或是模型在 git 中提交时的 commit 哈希值

|

||||||

|

- 如果 revision 是 ref,HFFS 会把它映射成 commit 哈希值。

|

||||||

|

|

||||||

|

如果只提供了 `REPO_ID` 参数(没有制定 `FILE` 参数)

|

||||||

|

|

||||||

|

1. HFFS 会先从镜像网站(hf-mirror.com 等)或 hf.co 原站扫描 repo 中所有文件的文件列表;如果列表获取失败,则下载失败,HFFS 会在终端显示相关的失败原因

|

||||||

|

2. 成功获取文件列表后,HFFS 根据文件列表中的信息依次下载各个模型文件。

|

||||||

|

|

||||||

|

如果同时提供了 `REPO_ID` 参数和 `FILE` 参数,HFFS 和以 “peer $\rightarrow$ 镜像网站 $\rightarrow$ hf.co 原站”的顺序下载指定文件。

|

||||||

|

|

||||||

|

> [!NOTE] 什么时候需要使用 `FILE` 参数?

|

||||||

|

> 下列情况可以使用 `FILE` 参数

|

||||||

|

>

|

||||||

|

> 1. 只需要下载某些模型文件,而不是 repo 中所有文件

|

||||||

|

> 2. 用户自己编写脚本进行文件下载

|

||||||

|

> 3. 由于网络原因,终端无法访问 hf.co,但是浏览器可以访问 hf.co

|

||||||

|

|

||||||

#### 查看模型

|

#### 查看模型

|

||||||

|

|

||||||

```bash

|

```bash

|

||||||

hffs model ls [--repo_id REPO_ID] [--file FILE]

|

hffs model ls [--repo_id REPO_ID] [--file FILE]

|

||||||

```

|

```

|

||||||

|

|

||||||

扫描已经下载的模型。该命令返回如下信息:

|

扫描已经下载到 HFFS 中的模型。

|

||||||

|

|

||||||

- 如果没有指定 REPO_ID,返回 repo 列表

|

`REPO_ID` 和 `FILE` 参数的说明见 [[#添加模型]] 部分。

|

||||||

- `REPO_ID` 的 [相关文档](https://huggingface.co/docs/hub/en/api#get-apimodelsrepoid-or-apimodelsrepoidrevisionrevision)

|

|

||||||

- 如果制定了 REPO_ID,但是没有指定 FILE,返回 repo 中所有缓存的文件

|

该命令返回如下信息:

|

||||||

- `FILE` 是模型文件相对 git root 目录的相对路径,该路径可以在 huggingface 的网页上查看

|

|

||||||

- 在执行添加、删除模型文件命令的时候,都需要使用该路径作为参数指定目标文件;

|

- 如果没有指定 `REPO_ID`,返回 repo 列表

|

||||||

|

- 如果制定了 `REPO_ID`,但是没有指定 `FILE`,返回 repo 中所有缓存的文件

|

||||||

- 如果同时制定了 `REPO_ID` 和 `FILE`,返回指定文件在本机文件系统中的绝对路径

|

- 如果同时制定了 `REPO_ID` 和 `FILE`,返回指定文件在本机文件系统中的绝对路径

|

||||||

- 用户可以使用该绝对路径访问模型文件

|

- 用户可以使用该绝对路径访问模型文件

|

||||||

- 注意:在 Unix-like 的操作系统中,由于缓存内部使用了软连接的方式保存文件,目标模型文件的 git 路径以及文件系统中的路径别没有直接关系

|

- 注意:在 Unix-like 的操作系统中,由于缓存内部使用了软连接的方式保存文件,目标模型文件的 git 路径(即 `FILE` 值)和文件在本地的存放路径并没有直接关系

|

||||||

|

|

||||||

#### 搜索模型

|

|

||||||

|

|

||||||

```bash

|

|

||||||

hffs model search REPO_ID FILE [--revision REVISION]

|

|

||||||

```

|

|

||||||

|

|

||||||

搜索目标模型文件在哪些 peer 上已经存在。

|

|

||||||

|

|

||||||

- 如果模型还未下载到本地,从 peer 节点或者 hf.co 下载目标模型

|

|

||||||

- `REPO_ID` 参数说明见 `hffs model ls` 命令

|

|

||||||

- `FILE` 参数说明见 `hffs model ls` 命令

|

|

||||||

- `REVISION` 的 [相关文档](https://huggingface.co/docs/hub/en/api#get-apimodelsrepoid-or-apimodelsrepoidrevisionrevision)

|

|

||||||

|

|

||||||

#### 添加模型

|

|

||||||

|

|

||||||

```bash

|

|

||||||

hffs model add REPO_ID FILE [--revision REVISION]

|

|

||||||

```

|

|

||||||

|

|

||||||

下载指定的模型。

|

|

||||||

|

|

||||||

- 如果模型还未下载到本地,从 peer 节点或者 hf.co 下载目标模型

|

|

||||||

- `REPO_ID` 参数说明见 `hffs model ls` 命令

|

|

||||||

- `FILE` 参数说明见 `hffs model ls` 命令

|

|

||||||

- `REVISION` 参数说明见 `hffs model search` 命令

|

|

||||||

|

|

||||||

#### 删除模型

|

#### 删除模型

|

||||||

|

|

||||||

```bash

|

```bash

|

||||||

hffs model rm REPO_ID FILE [--revision REVISION]

|

hffs model rm REPO_ID [--file FILE] [--revision REVISION]

|

||||||

```

|

```

|

||||||

|

|

||||||

删除已经下载的模型数据。

|

删除 HFFS 下载的模型文件。

|

||||||

|

|

||||||

- 如果模型还未下载到本地,从 peer 节点或者 hf.co 下载目标模型

|

`REPO_ID`, `FILE`, 和 `REVISION` 参数的说明见 [[#添加模型]] 部分。

|

||||||

- `REPO_ID` 参数说明见 `hffs model ls` 命令

|

|

||||||

- `FILE` 参数说明见 `hffs model ls` 命令

|

|

||||||

- `REVISION` 的 [相关文档](https://huggingface.co/docs/hub/en/api#get-apimodelsrepoid-or-apimodelsrepoidrevisionrevision)

|

|

||||||

|

|

||||||

### 卸载管理

|

工作原理:

|

||||||

|

|

||||||

#### 卸载软件

|

- 如果没有指定 `REVISION` 参数,默认删除 `main` 中的模型文件,否则删除 `REVISION` 指定版本的文件;如果本地找不到匹配的 `REVISION` 值,则不删除任何文件

|

||||||

|

- 如果制定了 `FILE` 参数,只删除目标文件;如果没有指定 `FILE` 参数,删除整个 repo;如果本地找不到匹配的 `FILE`,则不删除任何文件

|

||||||

|

|

||||||

> 警告:

|

#### 导入模型

|

||||||

|

|

||||||

|

```bash

|

||||||

|

hffs model import SRC_PATH REPO_ID \

|

||||||

|

[--file FILE] \

|

||||||

|

[--revision REVISION] \

|

||||||

|

[--method IMPORT_METHOD]

|

||||||

|

```

|

||||||

|

|

||||||

|

将已经下载到本机的模型导入给 HFFS 管理。

|

||||||

|

|

||||||

|

交给 HFFS 管理模型的好处有:

|

||||||

|

|

||||||

|

1. 通过 [[#查看模型|hffs model ls 命令]] 查看本机模型文件的统计信息(文件数量、占用硬盘空间的大小等)

|

||||||

|

2. 通过 [[#删除模型|hffs model rm 命令]] 方便地删除模型文件、优化硬盘空间的使用率

|

||||||

|

3. 通过 HFFS 和其他 peer 节点分享模型文件

|

||||||

|

|

||||||

|

参数说明:

|

||||||

|

|

||||||

|

1. `REPO_ID`, `FILE`, 和 `REVISION` 参数的说明见 [[#添加模型]] 部分

|

||||||

|

2. `SRC_PATH` 指向待导入模型在本地的路径

|

||||||

|

3. `IMPORT_METHOD` 指定导入的方法,默认值是 `cp`(拷贝目标文件)

|

||||||

|

|

||||||

|

工作原理:

|

||||||

|

|

||||||

|

- HFFS 会把放在 `SRC_PATH` 中的模型导入到 HFFS 管理的 [[#工作目录管理|工作目录]] 中

|

||||||

|

- 如果 `SRC_PATH`

|

||||||

|

- 指向一个文件,则必须提供 `FILE` 参数,作为该文件在 repo 根目录下的相对路径

|

||||||

|

- 指向一个目录,则 `SRC_PATH` 会被看作 repo 的根目录,该目录下所有文件都会被导入 HFFS 的工作目录中,并保持原始目录结构;同时,`FILE` 参数的值会被忽略

|

||||||

|

- 如果 `REVISION`

|

||||||

|

- 没有指定,HFFS 内部会用 `0000000000000000000000000000000000000000` 作为文件的 revision 值,并创建 `main` ref 指向该 revision

|

||||||

|

- 是 40 位的 hash 值,HFFS 会使用该值作为文件的 revision 值,并创建 `main` ref 指向该 revision

|

||||||

|

- 是一个字符串,HFFS 会使用该值作为分支名称/ref,并将 revision 值设置为 `0000000000000000000000000000000000000000`,然后将 ref 指向这个 revision

|

||||||

|

- `IMPORT_METHOD` 有支持下列值

|

||||||

|

- `cp` (默认)- 拷贝目标文件

|

||||||

|

- `mv` - 拷贝目标文件,成功后删除原始文件

|

||||||

|

- `ln` - 在目标位置位原始文件创建连接(Windows 平台不支持)

|

||||||

|

|

||||||

|

#### 搜索模型

|

||||||

|

|

||||||

|

```bash

|

||||||

|

hffs model search REPO_ID [--file FILE] [--revision REVISION]

|

||||||

|

```

|

||||||

|

|

||||||

|

搜索 peer 节点,查看目标模型文件在哪些 peer 上已经存在。

|

||||||

|

|

||||||

|

`REPO_ID`, `FILE`, 和 `REVISION` 参数的说明见 [[#添加模型]] 部分。

|

||||||

|

|

||||||

|

工作原理:

|

||||||

|

|

||||||

|

- 如果没有指定 `REVISION` 参数,默认搜索 `main` 中的模型文件,否则搜索 `REVISION` 指定版本的文件

|

||||||

|

- 如果制定了 `FILE` 参数,只搜索目标模型文件;如果没有指定 `FILE` 参数,搜索和 repo 相关的所有文件

|

||||||

|

- HFFS 在终端中打印的结果包含如下信息:`peer-id:port,repo-id,file`

|

||||||

|

|

||||||

|

### 配置管理

|

||||||

|

|

||||||

|

#### 工作目录管理

|

||||||

|

|

||||||

|

HFFS 的工作目录中包括

|

||||||

|

|

||||||

|

- HFFS 的配置文件

|

||||||

|

- HFFS 所下载和管理的模型文件,以及其他相关文件

|

||||||

|

|

||||||

|

##### 工作目录设置

|

||||||

|

|

||||||

|

```bash

|

||||||

|

hffs conf cache set HOME_PATH

|

||||||

|

```

|

||||||

|

|

||||||

|

设置服务的工作目录,包括配置存放目录和文件下载目录

|

||||||

|

|

||||||

|

- `HOME_PATH` 工作目录的路径,路径必须已存在

|

||||||

|

|

||||||

|

##### 工作目录获取

|

||||||

|

|

||||||

|

```bash

|

||||||

|

hffs conf cache get

|

||||||

|

```

|

||||||

|

|

||||||

|

获取当前生效的工作目录。注意:此路径非 set 设置的路径,环境变量会覆盖 set 设置的路径。

|

||||||

|

|

||||||

|

##### 工作目录重置

|

||||||

|

|

||||||

|

```bash

|

||||||

|

hffs conf cache reset

|

||||||

|

```

|

||||||

|

|

||||||

|

恢复配置的工作目录路径为默认路径。注意:此操作无法重置环境变量的设置。

|

||||||

|

|

||||||

|

## 卸载 HFFS

|

||||||

|

|

||||||

|

> [!WARNING]

|

||||||

> 卸载软件将会清除所有添加的配置以及已下载的模型,无法恢复,请谨慎操作!

|

> 卸载软件将会清除所有添加的配置以及已下载的模型,无法恢复,请谨慎操作!

|

||||||

|

|

||||||

```bash

|

```bash

|

||||||

|

|

|

||||||

|

|

@ -1,10 +0,0 @@

|

||||||

[model]

|

|

||||||

download_dir="download"

|

|

||||||

|

|

||||||

[model.aria2]

|

|

||||||

exec_path=""

|

|

||||||

conf_path=""

|

|

||||||

|

|

||||||

[peer]

|

|

||||||

data_path="peers.json"

|

|

||||||

|

|

||||||

|

|

@ -1,83 +0,0 @@

|

||||||

#!/usr/bin/env python3

|

|

||||||

# -*- coding: utf-8 -*-

|

|

||||||

|

|

||||||

import psutil

|

|

||||||

import logging

|

|

||||||

import time

|

|

||||||

import shutil

|

|

||||||

import platform

|

|

||||||

import signal

|

|

||||||

import subprocess

|

|

||||||

import asyncio

|

|

||||||

|

|

||||||

from ..common.settings import HFFS_EXEC_NAME

|

|

||||||

from .http_client import get_service_status, post_stop_service

|

|

||||||

|

|

||||||

|

|

||||||

async def is_service_running():

|

|

||||||

try:

|

|

||||||

_ = await get_service_status()

|

|

||||||

return True

|

|

||||||

except ConnectionError:

|

|

||||||

return False

|

|

||||||

except Exception as e:

|

|

||||||

logging.info(f"If error not caused by service not start, may need check it! ERROR: {e}")

|

|

||||||

return False

|

|

||||||

|

|

||||||

|

|

||||||

async def stop_service():

|

|

||||||

try:

|

|

||||||

await post_stop_service()

|

|

||||||

logging.info("Service stopped success!")

|

|

||||||

except ConnectionError:

|

|

||||||

logging.info("Can not connect to service, may already stopped!")

|

|

||||||

except Exception as e:

|

|

||||||

raise SystemError(f"Failed to stop service! ERROR: {e}")

|

|

||||||

|

|

||||||

|

|

||||||

async def daemon_start(args):

|

|

||||||

if await is_service_running():

|

|

||||||

raise LookupError("Service already start!")

|

|

||||||

|

|

||||||

exec_path = shutil.which(HFFS_EXEC_NAME)

|

|

||||||

|

|

||||||

if not exec_path:

|

|

||||||

raise FileNotFoundError(HFFS_EXEC_NAME)

|

|

||||||

|

|

||||||

creation_flags = 0

|

|

||||||

|

|

||||||

if platform.system() in ["Linux"]:

|

|

||||||

# deal zombie process

|

|

||||||

signal.signal(signal.SIGCHLD, signal.SIG_IGN)

|

|

||||||

elif platform.system() in ["Windows"]:

|

|

||||||

creation_flags = subprocess.CREATE_NO_WINDOW

|

|

||||||

|

|

||||||

cmdline_daemon_false = "--daemon=false"

|

|

||||||

|

|

||||||

_ = subprocess.Popen([exec_path, "daemon", "start", "--port={}".format(args.port), cmdline_daemon_false],

|

|

||||||

stdin=subprocess.DEVNULL,

|

|

||||||

stdout=subprocess.DEVNULL,

|

|

||||||

stderr=subprocess.DEVNULL,

|

|

||||||

creationflags=creation_flags)

|

|

||||||

|

|

||||||

wait_start_time = 3

|

|

||||||

await asyncio.sleep(wait_start_time)

|

|

||||||

|

|

||||||

if await is_service_running():

|

|

||||||

logging.info("Daemon process started successfully")

|

|

||||||

else:

|

|

||||||

raise LookupError("Daemon start but not running, check service or retry!")

|

|

||||||

|

|

||||||

|

|

||||||

async def daemon_stop():

|

|

||||||

if not await is_service_running():

|

|

||||||

logging.info("Service not running, stop nothing!")

|

|

||||||

return

|

|

||||||

|

|

||||||

await stop_service()

|

|

||||||

|

|

||||||

wait_stop_time = 3

|

|

||||||

await asyncio.sleep(wait_stop_time)

|

|

||||||

|

|

||||||

if await is_service_running():

|

|

||||||

raise LookupError("Stopped service but still running, check service or retry!")

|

|

||||||

|

|

@ -1,188 +0,0 @@

|

||||||

import asyncio

|

|

||||||

import time

|

|

||||||

import os

|

|

||||||

|

|

||||||

import aiohttp

|

|

||||||

import aiohttp.client_exceptions

|

|

||||||

import logging

|

|

||||||

|

|

||||||

from ..common.peer import Peer

|

|

||||||

from huggingface_hub import hf_hub_url, get_hf_file_metadata

|

|

||||||

from ..common.settings import load_local_service_port, HFFS_API_PING, HFFS_API_PEER_CHANGE, HFFS_API_ALIVE_PEERS

|

|

||||||

from ..common.settings import HFFS_API_STATUS, HFFS_API_STOP

|

|

||||||

|

|

||||||

logger = logging.getLogger(__name__)

|

|

||||||

|

|

||||||

LOCAL_HOST = "127.0.0.1"

|

|

||||||

|

|

||||||

|

|

||||||

def timeout_sess(timeout=60):

|

|

||||||

return aiohttp.ClientSession(timeout=aiohttp.ClientTimeout(total=timeout))

|

|

||||||

|

|

||||||

|

|

||||||

async def ping(peer, timeout=15):

|

|

||||||

alive = False

|

|

||||||

seq = os.urandom(4).hex()

|

|

||||||

url = f"http://{peer.ip}:{peer.port}" + HFFS_API_PING + f"?seq={seq}"

|

|

||||||

|

|

||||||

logger.debug(f"probing {peer.ip}:{peer.port}, seq = {seq}")

|

|

||||||

|

|

||||||

try:

|

|

||||||

async with timeout_sess(timeout) as session:

|

|

||||||

async with session.get(url) as response:

|

|

||||||

if response.status == 200:

|

|

||||||

alive = True

|

|

||||||

except TimeoutError:

|

|

||||||

pass

|

|

||||||

except Exception as e:

|

|

||||||

logger.warning(e)

|

|

||||||

|

|

||||||

peer.set_alive(alive)

|

|

||||||

peer.set_epoch(int(time.time()))

|

|

||||||

|

|

||||||

status_msg = "alive" if alive else "dead"

|

|

||||||

logger.debug(f"Peer {peer.ip}:{peer.port} (seq:{seq}) is {status_msg}")

|

|

||||||

return peer

|

|

||||||

|

|

||||||

|

|

||||||

async def alive_peers(timeout=2):

|

|

||||||

port = load_local_service_port()

|

|

||||||

url = f"http://{LOCAL_HOST}:{port}" + HFFS_API_ALIVE_PEERS

|

|

||||||

peers = []

|

|

||||||

|

|

||||||

try:

|

|

||||||

async with timeout_sess(timeout) as session:

|

|

||||||

async with session.get(url) as response:

|

|

||||||

if response.status == 200:

|

|

||||||

peer_list = await response.json()

|

|

||||||

peers = [Peer.from_dict(peer) for peer in peer_list]

|

|

||||||

else:

|

|

||||||

err = f"Failed to get alive peers, HTTP status: {response.status}"

|

|

||||||

logger.error(err)

|

|

||||||

except aiohttp.client_exceptions.ClientConnectionError:

|

|

||||||

logger.warning("Prompt: connect local service failed, may not start, "

|

|

||||||

"execute hffs daemon start to see which peers are active")

|

|

||||||

except TimeoutError:

|

|

||||||

logger.error("Prompt: connect local service timeout, may not start, "

|

|

||||||

"execute hffs daemon start to see which peers are active")

|

|

||||||

except Exception as e:

|

|

||||||

logger.warning(e)

|

|

||||||

logger.warning("Connect service error, please check it, usually caused by service not start!")

|

|

||||||

|

|

||||||

return peers

|

|

||||||

|

|

||||||

|

|

||||||

async def search_coro(peer, repo_id, revision, file_name):

|

|

||||||

"""Check if a certain file exists in a peer's model repository

|

|

||||||

|

|

||||||

Returns:

|

|

||||||

Peer or None: if the peer has the target file, return the peer, otherwise None

|

|

||||||

"""

|

|

||||||

try:

|

|

||||||

async with timeout_sess(10) as session:

|

|

||||||

async with session.head(f"http://{peer.ip}:{peer.port}/{repo_id}/resolve/{revision}/{file_name}") as response:

|

|

||||||

if response.status == 200:

|

|

||||||

return peer

|

|

||||||

except Exception:

|

|

||||||

return None

|

|

||||||

|

|

||||||

|

|

||||||

async def do_search(peers, repo_id, revision, file_name):

|

|

||||||

tasks = []

|

|

||||||

|

|

||||||

def all_finished(tasks):

|

|

||||||

return all([task.done() for task in tasks])

|

|

||||||

|

|

||||||

async with asyncio.TaskGroup() as g:

|

|

||||||

for peer in peers:

|

|

||||||

coro = search_coro(peer, repo_id, revision, file_name)

|

|

||||||

tasks.append(g.create_task(coro))

|

|

||||||

|

|

||||||

while not all_finished(tasks):

|

|

||||||

await asyncio.sleep(1)

|

|

||||||

print(".", end="")

|

|

||||||

|

|

||||||

# add new line after the dots

|

|

||||||

print("")

|

|

||||||

|

|

||||||

return [task.result() for task in tasks if task.result() is not None]

|

|

||||||

|

|

||||||

|

|

||||||

async def search_model(peers, repo_id, file_name, revision):

|

|

||||||

if not peers:

|

|

||||||

logger.info("No active peers to search")

|

|

||||||

return []

|

|

||||||

|

|

||||||

logger.info("Will check the following peers:")

|

|

||||||

logger.info(Peer.print_peers(peers))

|

|

||||||

|

|

||||||

avails = await do_search(peers, repo_id, revision, file_name)

|

|

||||||

|

|

||||||

logger.info("Peers who have the model:")

|

|

||||||

logger.info(Peer.print_peers(avails))

|

|

||||||

|

|

||||||

return avails

|

|

||||||

|

|

||||||

|

|

||||||

async def get_model_etag(endpoint, repo_id, filename, revision='main'):

|

|

||||||

url = hf_hub_url(

|

|

||||||

repo_id=repo_id,

|

|

||||||

filename=filename,

|

|

||||||

revision=revision,

|

|

||||||

endpoint=endpoint

|

|

||||||

)

|

|

||||||

|

|

||||||

metadata = get_hf_file_metadata(url)

|

|

||||||

return metadata.etag

|

|

||||||

|

|

||||||

|

|

||||||

async def notify_peer_change(timeout=2):

|

|

||||||

try:

|

|

||||||

port = load_local_service_port()

|

|

||||||

except LookupError:

|

|

||||||

return

|

|

||||||

|

|

||||||

url = f"http://{LOCAL_HOST}:{port}" + HFFS_API_PEER_CHANGE

|

|

||||||

|

|

||||||

try:

|

|

||||||

async with timeout_sess(timeout) as session:

|

|

||||||

async with session.get(url) as response:

|

|

||||||

if response.status != 200:

|

|

||||||

logger.debug(f"Peer change http status: {response.status}")

|

|

||||||

except TimeoutError:

|

|

||||||

pass # silently ignore timeout

|

|

||||||

except aiohttp.client_exceptions.ClientConnectionError:

|

|

||||||

logger.error("Connect local service failed, please check service!")

|

|

||||||

except Exception as e:

|

|

||||||

logger.error(f"Peer change error: {e}")

|

|

||||||

logger.error("Please check the error, usually caused by local service not start!")

|

|

||||||

|

|

||||||

|

|

||||||

async def get_service_status():

|

|

||||||

port = load_local_service_port()

|

|

||||||

url = f"http://{LOCAL_HOST}:{port}" + HFFS_API_STATUS

|

|

||||||

timeout = 5

|

|

||||||

|

|

||||||

try:

|

|

||||||

async with timeout_sess(timeout) as session:

|

|

||||||

async with session.get(url) as response:

|

|

||||||

if response.status != 200:

|

|

||||||

raise ValueError(f"Server response not 200 OK! status: {response.status}")

|

|

||||||

else:

|

|

||||||

return await response.json()

|

|

||||||

except (TimeoutError, ConnectionError, aiohttp.client_exceptions.ClientConnectionError):

|

|

||||||

raise ConnectionError("Connect server failed or timeout")

|

|

||||||

|

|

||||||

|

|

||||||

async def post_stop_service():

|

|

||||||

port = load_local_service_port()

|

|

||||||

url = f"http://{LOCAL_HOST}:{port}" + HFFS_API_STOP

|

|

||||||

timeout = 5

|

|

||||||

|

|

||||||

try:

|

|

||||||

async with timeout_sess(timeout) as session:

|

|

||||||

async with session.post(url) as response:

|

|

||||||

if response.status != 200:

|

|

||||||

raise ValueError(f"Server response not 200 OK! status: {response.status}")

|

|

||||||

except (TimeoutError, ConnectionError, aiohttp.client_exceptions.ClientConnectionError):

|

|

||||||

raise ConnectionError("Connect server failed or timeout")

|

|

||||||

|

|

@ -0,0 +1,206 @@

|

||||||

|

"""Daemon client for connecting with (self or other) Daemons."""

|

||||||

|

|

||||||

|

from __future__ import annotations

|

||||||

|

|

||||||

|

import asyncio

|

||||||

|

import logging

|

||||||

|

import time

|

||||||

|

from contextlib import asynccontextmanager

|

||||||

|

from typing import AsyncContextManager, AsyncIterator, List

|

||||||

|

|

||||||

|

import aiohttp

|

||||||

|

from huggingface_hub import ( # type: ignore[import-untyped]

|

||||||

|

get_hf_file_metadata,

|

||||||

|

hf_hub_url,

|

||||||

|

)

|

||||||

|

|

||||||

|

from hffs.common.context import HffsContext

|

||||||

|

from hffs.common.api_settings import (

|

||||||

|

API_DAEMON_PEERS_ALIVE,

|

||||||

|

API_DAEMON_PEERS_CHANGE,

|

||||||

|

API_DAEMON_RUNNING,

|

||||||

|

API_DAEMON_STOP,

|

||||||

|

API_FETCH_FILE_CLIENT,

|

||||||

|

API_FETCH_REPO_FILE_LIST,

|

||||||

|

API_PEERS_PROBE,

|

||||||

|

TIMEOUT_DAEMON,

|

||||||

|

TIMEOUT_PEERS,

|

||||||

|

ApiType,

|

||||||

|

)

|

||||||

|

from hffs.common.peer import Peer

|

||||||

|

from hffs.common.repo_files import RepoFileList

|

||||||

|

|

||||||

|

logger = logging.getLogger(__name__)

|

||||||

|

|

||||||

|

|

||||||

|

HTTP_STATUS_OK = 200

|

||||||

|

|

||||||

|

|

||||||

|

def _http_session() -> aiohttp.ClientSession:

|

||||||

|

return aiohttp.ClientSession()

|

||||||

|

|

||||||

|

|

||||||

|

def _api_url(peer: Peer, api: ApiType) -> str:

|

||||||

|

return f"http://{peer.ip}:{peer.port}{api}"

|

||||||

|

|

||||||

|

|

||||||

|

@asynccontextmanager

|

||||||

|

async def _quiet_request(

|

||||||

|

sess: aiohttp.ClientSession,

|

||||||

|

req: AsyncContextManager,

|

||||||

|

) -> AsyncIterator[aiohttp.ClientResponse | None]:

|

||||||

|

try:

|

||||||

|

async with sess, req as resp:

|

||||||

|

yield resp

|

||||||

|

except (

|

||||||

|

aiohttp.ClientError,

|

||||||

|

asyncio.exceptions.TimeoutError,

|

||||||

|

TimeoutError,

|

||||||

|

ConnectionError,

|

||||||

|

RuntimeError,

|

||||||

|

) as e:

|

||||||

|

logger.debug("HTTP Error: %s", e)

|

||||||

|

yield None

|

||||||

|

|

||||||

|

|

||||||

|

@asynccontextmanager

|

||||||

|

async def _quiet_get(

|

||||||

|

url: str,

|

||||||

|

timeout: aiohttp.ClientTimeout,

|

||||||

|

) -> AsyncIterator[aiohttp.ClientResponse | None]:

|

||||||

|

sess = _http_session()

|

||||||

|

req = sess.get(url, timeout=timeout)

|

||||||

|

async with _quiet_request(sess, req) as resp:

|

||||||

|

try:

|

||||||

|

yield resp

|

||||||

|

except (OSError, ValueError, RuntimeError) as e:

|

||||||

|

logger.debug("Failed to get response: %s", e)

|

||||||

|

yield None

|

||||||

|

|

||||||

|

|

||||||

|

@asynccontextmanager

|

||||||

|

async def _quiet_head(

|

||||||

|

url: str,

|

||||||

|

timeout: aiohttp.ClientTimeout,

|

||||||

|

) -> AsyncIterator[aiohttp.ClientResponse | None]:

|

||||||

|

sess = _http_session()

|

||||||

|

req = sess.head(url, timeout=timeout)

|

||||||

|

async with _quiet_request(sess, req) as resp:

|

||||||

|

try:

|

||||||

|

yield resp

|

||||||

|

except (OSError, ValueError, RuntimeError) as e:

|

||||||

|

logger.debug("Failed to get response: %s", e)

|

||||||

|

yield None

|

||||||

|

|

||||||

|

|

||||||

|

async def ping(target: Peer) -> Peer:

|

||||||

|

"""Ping a peer to check if it is alive."""

|

||||||

|

url = _api_url(target, API_PEERS_PROBE)

|

||||||

|

async with _quiet_get(url, TIMEOUT_PEERS) as resp:

|

||||||

|

target.alive = resp is not None and resp.status == HTTP_STATUS_OK

|

||||||

|

target.epoch = int(time.time())

|

||||||

|

return target

|

||||||

|

|

||||||

|

|

||||||

|

async def stop_daemon() -> bool:

|

||||||

|

"""Stop a daemon service."""

|

||||||

|

url = _api_url(HffsContext.get_daemon(), API_DAEMON_STOP)

|

||||||

|

async with _quiet_get(url, TIMEOUT_DAEMON) as resp:

|

||||||

|

return resp is not None and resp.status == HTTP_STATUS_OK

|

||||||

|

|

||||||

|

|

||||||

|

async def is_daemon_running() -> bool:

|

||||||

|

"""Check if daemon is running."""

|

||||||

|

url = _api_url(HffsContext.get_daemon(), API_DAEMON_RUNNING)

|

||||||

|

async with _quiet_get(url, TIMEOUT_DAEMON) as resp:

|

||||||

|

return resp is not None and resp.status == HTTP_STATUS_OK

|

||||||

|

|

||||||

|

|

||||||

|

async def get_alive_peers() -> List[Peer]:

|

||||||

|

"""Get a list of alive peers."""

|

||||||

|

url = _api_url(HffsContext.get_daemon(), API_DAEMON_PEERS_ALIVE)

|

||||||

|

async with _quiet_get(url, TIMEOUT_DAEMON) as resp:

|

||||||

|

if not resp:

|

||||||

|

return []

|

||||||

|

return [Peer(**peer) for peer in await resp.json()]

|

||||||

|

|

||||||

|

|

||||||

|

async def notify_peers_change() -> bool:

|

||||||

|

"""Notify peers about a change in peer list."""

|

||||||

|

url = _api_url(HffsContext.get_daemon(), API_DAEMON_PEERS_CHANGE)

|

||||||

|

async with _quiet_get(url, TIMEOUT_DAEMON) as resp:

|

||||||

|

return resp is not None and resp.status == HTTP_STATUS_OK

|

||||||

|

|

||||||

|

|

||||||

|

async def check_file_exist(

|

||||||

|

peer: Peer,

|

||||||

|

repo_id: str,

|

||||||

|

file_name: str,

|

||||||

|

revision: str,

|

||||||

|

) -> tuple[Peer, bool]:

|

||||||

|

"""Check if the peer has target file."""

|

||||||

|

url = _api_url(

|

||||||

|

peer,

|

||||||

|

API_FETCH_FILE_CLIENT.format(

|

||||||

|

repo=repo_id,

|

||||||

|

revision=revision,

|

||||||

|

file_name=file_name,

|

||||||

|

),

|

||||||

|

)

|

||||||

|

async with _quiet_head(url, TIMEOUT_PEERS) as resp:

|

||||||

|

return (peer, resp is not None and resp.status == HTTP_STATUS_OK)

|

||||||

|

|

||||||

|

|

||||||

|

async def get_file_etag(

|

||||||

|

endpoint: str,

|

||||||

|

repo_id: str,

|

||||||

|

file_name: str,

|

||||||

|

revision: str,

|

||||||

|

) -> str | None:

|

||||||

|

"""Get the ETag of a file."""

|

||||||

|

url = hf_hub_url(

|

||||||

|

repo_id=repo_id,

|

||||||

|

filename=file_name,

|

||||||

|

revision=revision,

|

||||||

|

endpoint=endpoint,

|

||||||

|

)

|

||||||

|

|

||||||

|

try:

|

||||||

|

metadata = get_hf_file_metadata(url)

|

||||||

|

if metadata:

|

||||||

|

return metadata.etag

|

||||||

|

except (OSError, ValueError):

|

||||||

|

logger.debug(

|

||||||

|

"Failed to get ETag: %s, %s, %s, %s",

|

||||||

|

endpoint,

|

||||||

|

repo_id,

|

||||||

|

file_name,

|

||||||

|

revision,

|

||||||

|

)

|

||||||

|

return None

|

||||||

|

|

||||||

|

|

||||||

|

async def check_repo_exist() -> tuple[Peer, bool]:

|

||||||

|

"""Check if the peer has target model."""

|

||||||

|

raise NotImplementedError

|

||||||

|

|

||||||

|

|

||||||

|

async def get_repo_file_list(

|

||||||

|

peer: Peer,

|

||||||

|

repo_id: str,

|

||||||

|

revision: str,

|

||||||

|

) -> RepoFileList | None:

|

||||||

|

"""Load the target model from a peer."""

|

||||||

|

user, model = repo_id.strip().split("/")

|

||||||

|

url = _api_url(

|

||||||

|

peer,

|

||||||

|

API_FETCH_REPO_FILE_LIST.format(

|

||||||

|

user=user,

|

||||||

|

model=model,

|

||||||

|

revision=revision,

|

||||||

|

),

|

||||||

|

)

|

||||||

|

async with _quiet_get(url, TIMEOUT_PEERS) as resp:

|

||||||

|

if not resp or resp.status != HTTP_STATUS_OK:

|

||||||

|

return None

|

||||||

|

return await resp.json()

|

||||||

|

|

@ -0,0 +1,162 @@

|

||||||

|

"""Model management related commands."""

|

||||||

|

|

||||||

|

from __future__ import annotations

|

||||||

|

|

||||||

|

import logging

|

||||||

|

from typing import TYPE_CHECKING, List

|

||||||

|

|

||||||

|

from prettytable import PrettyTable

|

||||||

|

|

||||||

|

from hffs.client import model_controller

|

||||||

|

|

||||||

|

if TYPE_CHECKING:

|

||||||

|

from argparse import Namespace

|

||||||

|

|

||||||

|

from hffs.client.model_controller import FileInfo, RepoInfo

|

||||||

|

|

||||||

|

logger = logging.getLogger(__name__)

|

||||||

|

|

||||||

|

|

||||||

|

def _tablize(names: List[str], rows: List[List[str]]) -> None:

|

||||||

|

table = PrettyTable()

|

||||||

|

table.field_names = names

|

||||||

|

table.add_rows(rows)

|

||||||

|

logger.info(table)

|

||||||

|

|

||||||

|

|

||||||

|

def _tablize_files(files: List[FileInfo]) -> None:

|

||||||

|

if not files:

|

||||||

|

logger.info("No files found.")

|

||||||

|

else:

|

||||||

|

names = ["REFS", "COMMIT", "FILE", "SIZE", "PATH"]

|

||||||

|

rows = [

|

||||||

|

[

|

||||||

|

",".join(f.refs),

|

||||||

|

f.commit_8,

|

||||||

|

str(f.file_name),

|

||||||

|

str(f.size_on_disk_str),

|

||||||

|

str(f.file_path),

|

||||||

|

]

|

||||||

|

for f in files

|

||||||

|

]

|

||||||

|

_tablize(names, rows)

|

||||||

|

|

||||||

|

|

||||||

|

def _tablize_repos(repos: List[RepoInfo]) -> None:

|

||||||

|

if not repos:

|

||||||

|

logger.info("No repos found.")

|

||||||

|

else:

|

||||||

|

names = ["REPO ID", "SIZE", "NB FILES", "LOCAL PATH"]

|

||||||

|

rows = [

|

||||||

|

[

|

||||||

|

r.repo_id,

|

||||||

|

f"{r.size_str:>12}",

|

||||||

|

str(r.nb_files),

|

||||||

|

str(

|

||||||

|

r.repo_path,

|

||||||

|

),

|

||||||

|

]

|

||||||

|

for r in repos

|

||||||

|

]

|

||||||

|

_tablize(names, rows)

|

||||||

|

|

||||||

|

|

||||||

|

def _ls(args: Namespace) -> None:

|

||||||

|

if args.repo:

|

||||||

|

files = model_controller.file_list(args.repo)

|

||||||

|

_tablize_files(files)

|

||||||

|

else:

|

||||||

|

repos = model_controller.repo_list()

|

||||||

|

_tablize_repos(repos)

|

||||||

|

|

||||||

|

|

||||||

|

async def _add(args: Namespace) -> None:

|

||||||

|

if args.file is None and args.revision == "main":

|

||||||

|

msg = (

|

||||||

|

"In order to keep repo version integrity, when add a repo,"

|

||||||

|

"You must specify the commit hash (i.e. 8775f753) with -v option."

|

||||||

|

)

|

||||||

|

logger.info(msg)

|

||||||

|

return

|

||||||

|

|

||||||

|

if args.file:

|

||||||

|

target = f"File {args.repo}/{args.file}"

|

||||||

|

success = await model_controller.file_add(

|

||||||

|

args.repo,

|

||||||

|

args.file,

|

||||||

|

args.revision,

|

||||||

|

)

|

||||||

|

else:

|

||||||

|

target = f"Model {args.repo}"

|

||||||

|

success = await model_controller.repo_add(

|

||||||

|

args.repo,

|

||||||

|

args.revision,

|

||||||

|

)

|

||||||

|

|

||||||

|

if success:

|

||||||

|

logger.info("%s added.", target)

|

||||||

|

else:

|

||||||

|

logger.info("%s failed to add.", target)

|

||||||

|

|

||||||

|

|

||||||

|

def _rm(args: Namespace) -> None:

|

||||||

|

if args.file:

|

||||||

|

if not args.revision:

|

||||||

|

logger.info("Remove file failed, must specify the revision!")

|

||||||

|

return

|

||||||

|

|

||||||

|

target = "File"

|

||||||

|

success = model_controller.file_rm(

|

||||||

|

args.repo,

|

||||||

|

args.file,

|

||||||

|

args.revision,

|

||||||

|

)

|

||||||

|

else:

|

||||||

|

target = "Model"

|

||||||

|

success = model_controller.repo_rm(

|

||||||

|

args.repo,

|

||||||

|

args.revision,

|

||||||

|

)

|

||||||

|

|

||||||

|

if success:

|

||||||

|

logger.info("%s remove is done.", target)

|

||||||

|

else:

|

||||||

|

logger.info("%s failed to remove.", target)

|

||||||

|

|

||||||

|

|

||||||

|

async def _search(args: Namespace) -> None:

|

||||||

|

if args.file:

|

||||||

|

target = "file"

|

||||||

|

peers = await model_controller.file_search(

|

||||||

|

args.repo,

|

||||||

|

args.file,

|

||||||

|

args.revision,

|

||||||

|

)

|

||||||

|

else:

|

||||||

|

target = "model"

|

||||||

|

peers = await model_controller.repo_search()

|

||||||

|

|

||||||

|

if peers:

|

||||||

|

logger.info(

|

||||||

|

"Peers that have target %s:\n[%s]",

|

||||||

|

target,

|

||||||

|

",".join(

|

||||||

|

[f"{p.ip}:{p.port}" for p in peers],

|

||||||

|

),

|

||||||

|

)

|

||||||

|

else:

|

||||||

|

logger.info("NO peer has target %s.", target)

|

||||||

|

|

||||||

|

|

||||||

|

async def exec_cmd(args: Namespace) -> None:

|

||||||

|

"""Execute command."""

|

||||||

|

if args.model_command == "ls":

|

||||||

|

_ls(args)

|

||||||

|

elif args.model_command == "add":

|

||||||

|

await _add(args)

|

||||||

|

elif args.model_command == "rm":

|

||||||

|

_rm(args)

|

||||||

|

elif args.model_command == "search":

|

||||||

|

await _search(args)

|

||||||

|

else:

|

||||||

|

raise NotImplementedError

|

||||||

|

|

@ -0,0 +1,389 @@

|

||||||

|

"""Manage models."""

|

||||||

|

|

||||||

|

from __future__ import annotations

|

||||||

|

|

||||||

|

import asyncio

|

||||||

|

import logging

|

||||||

|

from dataclasses import dataclass, field

|

||||||

|

from typing import TYPE_CHECKING, Any, Coroutine, List, TypeVar

|

||||||

|

|

||||||

|

from huggingface_hub import hf_hub_download # type: ignore[import-untyped]

|

||||||

|

from huggingface_hub.utils import GatedRepoError # type: ignore[import-untyped]

|

||||||

|

|

||||||

|

from hffs.client import http_request as request

|

||||||

|

from hffs.common import hf_wrapper

|

||||||

|

from hffs.common.context import HffsContext

|

||||||

|

from hffs.common.etag import save_etag

|

||||||

|

from hffs.common.repo_files import RepoFileList, load_file_list, save_file_list

|

||||||

|

|

||||||

|

if TYPE_CHECKING:

|

||||||

|

from pathlib import Path

|

||||||

|

|

||||||

|

from hffs.common.peer import Peer

|

||||||

|

|

||||||

|

logger = logging.getLogger(__name__)

|

||||||

|

|

||||||

|

|

||||||

|

T = TypeVar("T")

|

||||||

|

|

||||||

|

|

||||||

|

async def _safe_gather(

|

||||||

|

tasks: List[Coroutine[Any, Any, T]],

|

||||||

|

) -> List[T]:

|

||||||

|

results = await asyncio.gather(*tasks, return_exceptions=True)

|

||||||

|

return [r for r in results if not isinstance(r, BaseException)]

|

||||||

|

|

||||||

|

|

||||||

|

async def file_search(

|

||||||

|

repo_id: str,

|

||||||

|

file_name: str,

|

||||||