first commit

This commit is contained in:

parent

863dc72da6

commit

ed5b16ab15

|

|

@ -1,355 +1,27 @@

|

||||||

# ---> VisualStudio

|

.idea/

|

||||||

## Ignore Visual Studio temporary files, build results, and

|

|

||||||

## files generated by popular Visual Studio add-ons.

|

|

||||||

##

|

|

||||||

## Get latest from https://github.com/github/gitignore/blob/master/VisualStudio.gitignore

|

|

||||||

|

|

||||||

# User-specific files

|

.markdown/

|

||||||

*.rsuser

|

|

||||||

*.suo

|

|

||||||

*.user

|

|

||||||

*.userosscache

|

|

||||||

*.sln.docstates

|

|

||||||

|

|

||||||

# User-specific files (MonoDevelop/Xamarin Studio)

|

outputs/*.csv

|

||||||

*.userprefs

|

|

||||||

|

|

||||||

# Mono auto generated files

|

outputs/predict_plot/*.jpg

|

||||||

mono_crash.*

|

|

||||||

|

|

||||||

# Build results

|

outputs/predict_plot/*.png

|

||||||

[Dd]ebug/

|

|

||||||

[Dd]ebugPublic/

|

|

||||||

[Rr]elease/

|

|

||||||

[Rr]eleases/

|

|

||||||

x64/

|

|

||||||

x86/

|

|

||||||

[Aa][Rr][Mm]/

|

|

||||||

[Aa][Rr][Mm]64/

|

|

||||||

bld/

|

|

||||||

[Bb]in/

|

|

||||||

[Oo]bj/

|

|

||||||

[Ll]og/

|

|

||||||

[Ll]ogs/

|

|

||||||

|

|

||||||

# Visual Studio 2015/2017 cache/options directory

|

outputs/predict_plot/*.tif

|

||||||

.vs/

|

|

||||||

# Uncomment if you have tasks that create the project's static files in wwwroot

|

|

||||||

#wwwroot/

|

|

||||||

|

|

||||||

# Visual Studio 2017 auto generated files

|

lightning_logs/

|

||||||

Generated\ Files/

|

|

||||||

|

|

||||||

# MSTest test Results

|

src/__pycache__/

|

||||||

[Tt]est[Rr]esult*/

|

|

||||||

[Bb]uild[Ll]og.*

|

|

||||||

|

|

||||||

# NUnit

|

.history

|

||||||

*.VisualState.xml

|

|

||||||

TestResult.xml

|

|

||||||

nunit-*.xml

|

|

||||||

|

|

||||||

# Build Results of an ATL Project

|

src/data/__pycache__/

|

||||||

[Dd]ebugPS/

|

|

||||||

[Rr]eleasePS/

|

|

||||||

dlldata.c

|

|

||||||

|

|

||||||

# Benchmark Results

|

src/metric/__pycache__/

|

||||||

BenchmarkDotNet.Artifacts/

|

|

||||||

|

|

||||||

# .NET Core

|

src/models/__pycache__/

|

||||||

project.lock.json

|

|

||||||

project.fragment.lock.json

|

|

||||||

artifacts/

|

|

||||||

|

|

||||||

# StyleCop

|

src/models/backbone/__pycache__/

|

||||||

StyleCopReport.xml

|

|

||||||

|

|

||||||

# Files built by Visual Studio

|

|

||||||

*_i.c

|

|

||||||

*_p.c

|

|

||||||

*_h.h

|

|

||||||

*.ilk

|

|

||||||

*.meta

|

|

||||||

*.obj

|

|

||||||

*.iobj

|

|

||||||

*.pch

|

|

||||||

*.pdb

|

|

||||||

*.ipdb

|

|

||||||

*.pgc

|

|

||||||

*.pgd

|

|

||||||

*.rsp

|

|

||||||

*.sbr

|

|

||||||

*.tlb

|

|

||||||

*.tli

|

|

||||||

*.tlh

|

|

||||||

*.tmp

|

|

||||||

*.tmp_proj

|

|

||||||

*_wpftmp.csproj

|

|

||||||

*.log

|

|

||||||

*.vspscc

|

|

||||||

*.vssscc

|

|

||||||

.builds

|

|

||||||

*.pidb

|

|

||||||

*.svclog

|

|

||||||

*.scc

|

|

||||||

|

|

||||||

# Chutzpah Test files

|

|

||||||

_Chutzpah*

|

|

||||||

|

|

||||||

# Visual C++ cache files

|

|

||||||

ipch/

|

|

||||||

*.aps

|

|

||||||

*.ncb

|

|

||||||

*.opendb

|

|

||||||

*.opensdf

|

|

||||||

*.sdf

|

|

||||||

*.cachefile

|

|

||||||

*.VC.db

|

|

||||||

*.VC.VC.opendb

|

|

||||||

|

|

||||||

# Visual Studio profiler

|

|

||||||

*.psess

|

|

||||||

*.vsp

|

|

||||||

*.vspx

|

|

||||||

*.sap

|

|

||||||

|

|

||||||

# Visual Studio Trace Files

|

|

||||||

*.e2e

|

|

||||||

|

|

||||||

# TFS 2012 Local Workspace

|

|

||||||

$tf/

|

|

||||||

|

|

||||||

# Guidance Automation Toolkit

|

|

||||||

*.gpState

|

|

||||||

|

|

||||||

# ReSharper is a .NET coding add-in

|

|

||||||

_ReSharper*/

|

|

||||||

*.[Rr]e[Ss]harper

|

|

||||||

*.DotSettings.user

|

|

||||||

|

|

||||||

# TeamCity is a build add-in

|

|

||||||

_TeamCity*

|

|

||||||

|

|

||||||

# DotCover is a Code Coverage Tool

|

|

||||||

*.dotCover

|

|

||||||

|

|

||||||

# AxoCover is a Code Coverage Tool

|

|

||||||

.axoCover/*

|

|

||||||

!.axoCover/settings.json

|

|

||||||

|

|

||||||

# Coverlet is a free, cross platform Code Coverage Tool

|

|

||||||

coverage*[.json, .xml, .info]

|

|

||||||

|

|

||||||

# Visual Studio code coverage results

|

|

||||||

*.coverage

|

|

||||||

*.coveragexml

|

|

||||||

|

|

||||||

# NCrunch

|

|

||||||

_NCrunch_*

|

|

||||||

.*crunch*.local.xml

|

|

||||||

nCrunchTemp_*

|

|

||||||

|

|

||||||

# MightyMoose

|

|

||||||

*.mm.*

|

|

||||||

AutoTest.Net/

|

|

||||||

|

|

||||||

# Web workbench (sass)

|

|

||||||

.sass-cache/

|

|

||||||

|

|

||||||

# Installshield output folder

|

|

||||||

[Ee]xpress/

|

|

||||||

|

|

||||||

# DocProject is a documentation generator add-in

|

|

||||||

DocProject/buildhelp/

|

|

||||||

DocProject/Help/*.HxT

|

|

||||||

DocProject/Help/*.HxC

|

|

||||||

DocProject/Help/*.hhc

|

|

||||||

DocProject/Help/*.hhk

|

|

||||||

DocProject/Help/*.hhp

|

|

||||||

DocProject/Help/Html2

|

|

||||||

DocProject/Help/html

|

|

||||||

|

|

||||||

# Click-Once directory

|

|

||||||

publish/

|

|

||||||

|

|

||||||

# Publish Web Output

|

|

||||||

*.[Pp]ublish.xml

|

|

||||||

*.azurePubxml

|

|

||||||

# Note: Comment the next line if you want to checkin your web deploy settings,

|

|

||||||

# but database connection strings (with potential passwords) will be unencrypted

|

|

||||||

*.pubxml

|

|

||||||

*.publishproj

|

|

||||||

|

|

||||||

# Microsoft Azure Web App publish settings. Comment the next line if you want to

|

|

||||||

# checkin your Azure Web App publish settings, but sensitive information contained

|

|

||||||

# in these scripts will be unencrypted

|

|

||||||

PublishScripts/

|

|

||||||

|

|

||||||

# NuGet Packages

|

|

||||||

*.nupkg

|

|

||||||

# NuGet Symbol Packages

|

|

||||||

*.snupkg

|

|

||||||

# The packages folder can be ignored because of Package Restore

|

|

||||||

**/[Pp]ackages/*

|

|

||||||

# except build/, which is used as an MSBuild target.

|

|

||||||

!**/[Pp]ackages/build/

|

|

||||||

# Uncomment if necessary however generally it will be regenerated when needed

|

|

||||||

#!**/[Pp]ackages/repositories.config

|

|

||||||

# NuGet v3's project.json files produces more ignorable files

|

|

||||||

*.nuget.props

|

|

||||||

*.nuget.targets

|

|

||||||

|

|

||||||

# Microsoft Azure Build Output

|

|

||||||

csx/

|

|

||||||

*.build.csdef

|

|

||||||

|

|

||||||

# Microsoft Azure Emulator

|

|

||||||

ecf/

|

|

||||||

rcf/

|

|

||||||

|

|

||||||

# Windows Store app package directories and files

|

|

||||||

AppPackages/

|

|

||||||

BundleArtifacts/

|

|

||||||

Package.StoreAssociation.xml

|

|

||||||

_pkginfo.txt

|

|

||||||

*.appx

|

|

||||||

*.appxbundle

|

|

||||||

*.appxupload

|

|

||||||

|

|

||||||

# Visual Studio cache files

|

|

||||||

# files ending in .cache can be ignored

|

|

||||||

*.[Cc]ache

|

|

||||||

# but keep track of directories ending in .cache

|

|

||||||

!?*.[Cc]ache/

|

|

||||||

|

|

||||||

# Others

|

|

||||||

ClientBin/

|

|

||||||

~$*

|

|

||||||

*~

|

|

||||||

*.dbmdl

|

|

||||||

*.dbproj.schemaview

|

|

||||||

*.jfm

|

|

||||||

*.pfx

|

|

||||||

*.publishsettings

|

|

||||||

orleans.codegen.cs

|

|

||||||

|

|

||||||

# Including strong name files can present a security risk

|

|

||||||

# (https://github.com/github/gitignore/pull/2483#issue-259490424)

|

|

||||||

#*.snk

|

|

||||||

|

|

||||||

# Since there are multiple workflows, uncomment next line to ignore bower_components

|

|

||||||

# (https://github.com/github/gitignore/pull/1529#issuecomment-104372622)

|

|

||||||

#bower_components/

|

|

||||||

|

|

||||||

# RIA/Silverlight projects

|

|

||||||

Generated_Code/

|

|

||||||

|

|

||||||

# Backup & report files from converting an old project file

|

|

||||||

# to a newer Visual Studio version. Backup files are not needed,

|

|

||||||

# because we have git ;-)

|

|

||||||

_UpgradeReport_Files/

|

|

||||||

Backup*/

|

|

||||||

UpgradeLog*.XML

|

|

||||||

UpgradeLog*.htm

|

|

||||||

ServiceFabricBackup/

|

|

||||||

*.rptproj.bak

|

|

||||||

|

|

||||||

# SQL Server files

|

|

||||||

*.mdf

|

|

||||||

*.ldf

|

|

||||||

*.ndf

|

|

||||||

|

|

||||||

# Business Intelligence projects

|

|

||||||

*.rdl.data

|

|

||||||

*.bim.layout

|

|

||||||

*.bim_*.settings

|

|

||||||

*.rptproj.rsuser

|

|

||||||

*- [Bb]ackup.rdl

|

|

||||||

*- [Bb]ackup ([0-9]).rdl

|

|

||||||

*- [Bb]ackup ([0-9][0-9]).rdl

|

|

||||||

|

|

||||||

# Microsoft Fakes

|

|

||||||

FakesAssemblies/

|

|

||||||

|

|

||||||

# GhostDoc plugin setting file

|

|

||||||

*.GhostDoc.xml

|

|

||||||

|

|

||||||

# Node.js Tools for Visual Studio

|

|

||||||

.ntvs_analysis.dat

|

|

||||||

node_modules/

|

|

||||||

|

|

||||||

# Visual Studio 6 build log

|

|

||||||

*.plg

|

|

||||||

|

|

||||||

# Visual Studio 6 workspace options file

|

|

||||||

*.opt

|

|

||||||

|

|

||||||

# Visual Studio 6 auto-generated workspace file (contains which files were open etc.)

|

|

||||||

*.vbw

|

|

||||||

|

|

||||||

# Visual Studio LightSwitch build output

|

|

||||||

**/*.HTMLClient/GeneratedArtifacts

|

|

||||||

**/*.DesktopClient/GeneratedArtifacts

|

|

||||||

**/*.DesktopClient/ModelManifest.xml

|

|

||||||

**/*.Server/GeneratedArtifacts

|

|

||||||

**/*.Server/ModelManifest.xml

|

|

||||||

_Pvt_Extensions

|

|

||||||

|

|

||||||

# Paket dependency manager

|

|

||||||

.paket/paket.exe

|

|

||||||

paket-files/

|

|

||||||

|

|

||||||

# FAKE - F# Make

|

|

||||||

.fake/

|

|

||||||

|

|

||||||

# CodeRush personal settings

|

|

||||||

.cr/personal

|

|

||||||

|

|

||||||

# Python Tools for Visual Studio (PTVS)

|

|

||||||

__pycache__/

|

|

||||||

*.pyc

|

|

||||||

|

|

||||||

# Cake - Uncomment if you are using it

|

|

||||||

# tools/**

|

|

||||||

# !tools/packages.config

|

|

||||||

|

|

||||||

# Tabs Studio

|

|

||||||

*.tss

|

|

||||||

|

|

||||||

# Telerik's JustMock configuration file

|

|

||||||

*.jmconfig

|

|

||||||

|

|

||||||

# BizTalk build output

|

|

||||||

*.btp.cs

|

|

||||||

*.btm.cs

|

|

||||||

*.odx.cs

|

|

||||||

*.xsd.cs

|

|

||||||

|

|

||||||

# OpenCover UI analysis results

|

|

||||||

OpenCover/

|

|

||||||

|

|

||||||

# Azure Stream Analytics local run output

|

|

||||||

ASALocalRun/

|

|

||||||

|

|

||||||

# MSBuild Binary and Structured Log

|

|

||||||

*.binlog

|

|

||||||

|

|

||||||

# NVidia Nsight GPU debugger configuration file

|

|

||||||

*.nvuser

|

|

||||||

|

|

||||||

# MFractors (Xamarin productivity tool) working folder

|

|

||||||

.mfractor/

|

|

||||||

|

|

||||||

# Local History for Visual Studio

|

|

||||||

.localhistory/

|

|

||||||

|

|

||||||

# BeatPulse healthcheck temp database

|

|

||||||

healthchecksdb

|

|

||||||

|

|

||||||

# Backup folder for Package Reference Convert tool in Visual Studio 2017

|

|

||||||

MigrationBackup/

|

|

||||||

|

|

||||||

# Ionide (cross platform F# VS Code tools) working folder

|

|

||||||

.ionide/

|

|

||||||

|

|

||||||

|

src/utils/__pycache__/

|

||||||

|

|

@ -0,0 +1,21 @@

|

||||||

|

default:

|

||||||

|

tags:

|

||||||

|

- docker

|

||||||

|

image:

|

||||||

|

name: ufoym/deepo:all-jupyter

|

||||||

|

entrypoint: [""]

|

||||||

|

|

||||||

|

before_script:

|

||||||

|

- pip install -U .

|

||||||

|

- pip config set global.index-url https://mirrors.aliyun.com/pypi/simple/

|

||||||

|

- pip install -r requirements.txt

|

||||||

|

|

||||||

|

stages:

|

||||||

|

- test

|

||||||

|

|

||||||

|

pytest:

|

||||||

|

stage: test

|

||||||

|

script:

|

||||||

|

- pip install -U .[dev]

|

||||||

|

- pytest --cov=./

|

||||||

|

coverage: '/^TOTAL.*\s+(\d+\%)$/'

|

||||||

32

LICENSE

32

LICENSE

|

|

@ -1,17 +1,19 @@

|

||||||

<copyright notice> By obtaining, using, and/or copying this software and/or

|

Copyright (c) [2021] [The Supervised Layout Benchmark]

|

||||||

its associated documentation, you agree that you have read, understood, and

|

|

||||||

will comply with the following terms and conditions:

|

|

||||||

|

|

||||||

Permission to use, copy, modify, and distribute this software and its associated

|

Permission is hereby granted, free of charge, to any person obtaining a copy

|

||||||

documentation for any purpose and without fee is hereby granted, provided

|

of this software and associated documentation files (the "Software"), to deal

|

||||||

that the above copyright notice appears in all copies, and that both that

|

in the Software without restriction, including without limitation the rights

|

||||||

copyright notice and this permission notice appear in supporting documentation,

|

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

||||||

and that the name of the copyright holder not be used in advertising or publicity

|

copies of the Software, and to permit persons to whom the Software is

|

||||||

pertaining to distribution of the software without specific, written permission.

|

furnished to do so, subject to the following conditions:

|

||||||

|

|

||||||

THE COPYRIGHT HOLDER DISCLAIM ALL WARRANTIES WITH REGARD TO THIS SOFTWARE,

|

The above copyright notice and this permission notice shall be included in all

|

||||||

INCLUDING ALL IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS. IN NO EVENT

|

copies or substantial portions of the Software.

|

||||||

SHALL THE COPYRIGHT HOLDER BE LIABLE FOR ANY SPECIAL, INDIRECT OR CONSEQUENTIAL

|

|

||||||

DAMAGES OR ANY DAMAGES WHATSOEVER RESULTING FROM THE LOSS OF USE, DATA OR

|

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

||||||

PROFITS, WHETHER IN AN ACTION OF CONTRACT, NEGLIGENCE OR OTHER TORTIOUS ACTION,

|

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

||||||

ARISING OUT OF OR IN CONNECTION WITH THE USE OR PERFORMANCE OF THIS SOFTWARE.

|

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

||||||

|

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

||||||

|

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

||||||

|

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

||||||

|

SOFTWARE.

|

||||||

93

README.md

93

README.md

|

|

@ -1,20 +1,87 @@

|

||||||

#### 从命令行创建一个新的仓库

|

# supervised_layout_benchmark

|

||||||

|

|

||||||

```bash

|

## Introduction

|

||||||

touch README.md

|

|

||||||

git init

|

|

||||||

git add README.md

|

|

||||||

git commit -m "first commit"

|

|

||||||

git remote add origin https://git.osredm.com/p57201394/supervised_layout_benchmark.git

|

|

||||||

git push -u origin master

|

|

||||||

|

|

||||||

|

This project aims to establish a deep neural network (DNN) surrogate modeling benchmark for the temperature field prediction of heat source layout (HSL-TFP) task, providing a set of representative DNN surrogates as baselines as well as the original code files for easy start and comparison.

|

||||||

|

|

||||||

|

## Running Requirements

|

||||||

|

|

||||||

|

- ### Software

|

||||||

|

|

||||||

|

- python:

|

||||||

|

- cuda:

|

||||||

|

- pytorch:

|

||||||

|

|

||||||

|

- ### Hardware

|

||||||

|

|

||||||

|

- A single GPU with at least 4GB.

|

||||||

|

|

||||||

|

|

||||||

|

## Environment construction

|

||||||

|

|

||||||

|

- ``` pip install -r requirements.txt ```

|

||||||

|

|

||||||

|

## A quick start

|

||||||

|

|

||||||

|

The training, test and visualization can be accessed by running `main.py` file.

|

||||||

|

|

||||||

|

- The data is available at the server address: `\\192.168.2.1\mnt/share1/layout_data/v1.0/data/`(refer to [Readme for samples](https://git.idrl.site/gongzhiqiang/supervised_layout_benchmark/blob/master/samples/README.md)). Remember to modify variable `data_root` in the configuration file `config/config_complex_net.yml` to the right server address.

|

||||||

|

|

||||||

|

- Training

|

||||||

|

|

||||||

|

```python

|

||||||

|

python main.py -m train

|

||||||

```

|

```

|

||||||

|

|

||||||

#### 从命令行推送已经创建的仓库

|

or

|

||||||

|

|

||||||

```bash

|

|

||||||

git remote add origin https://git.osredm.com/p57201394/supervised_layout_benchmark.git

|

|

||||||

git push -u origin master

|

|

||||||

|

|

||||||

|

```python

|

||||||

|

python main.py --mode=train

|

||||||

```

|

```

|

||||||

|

|

||||||

|

- Test

|

||||||

|

|

||||||

|

```python

|

||||||

|

python main.py -m test --test_check_num=21

|

||||||

|

```

|

||||||

|

|

||||||

|

or

|

||||||

|

|

||||||

|

```python

|

||||||

|

python main.py --mode=test --test_check_num=21

|

||||||

|

```

|

||||||

|

|

||||||

|

where variable `test_check_num` is the number of the saved model for test.

|

||||||

|

|

||||||

|

- Prediction visualization

|

||||||

|

|

||||||

|

```python

|

||||||

|

python main.py -m plot --test_check_num=21

|

||||||

|

```

|

||||||

|

|

||||||

|

or

|

||||||

|

```python

|

||||||

|

python main.py --mode=plot --test_check_num=21

|

||||||

|

```

|

||||||

|

|

||||||

|

where variable `test_check_num` is the number of the saved model for plotting.

|

||||||

|

|

||||||

|

## Project architecture

|

||||||

|

|

||||||

|

- `config`: the configuration file

|

||||||

|

- `notebook`: the test file for `notebook`

|

||||||

|

- `outputs`: the output results by `test` and `plot` module. The test results is saved at `outputs/*.csv` and the plotting figures is saved at `outputs/predict_plot/`.

|

||||||

|

- `src`: including surrogate model, training and testing files.

|

||||||

|

- `test.py`: testing files.

|

||||||

|

- `train.py`: training files.

|

||||||

|

- `plot.py`: prediction visualization files.

|

||||||

|

- `data`: data preprocessing and data loading files.

|

||||||

|

- `metric`: evaluation metric file. (For details, see [Readme for metric](https://git.idrl.site/gongzhiqiang/supervised_layout_benchmark/blob/master/src/metric/README.md))

|

||||||

|

- `models`: DNN surrogate models for the HSL-TFP task.

|

||||||

|

- `utils`: useful tool function files.

|

||||||

|

|

||||||

|

## One tiny example

|

||||||

|

|

||||||

|

One tiny example for training and testing can be accessed based on the following instruction.

|

||||||

|

* Some training and testing data are available at `samples/data`.

|

||||||

|

* Based on the original configuration file, run `python main.py` directly for a quick experience of this tiny example.

|

||||||

|

|

@ -0,0 +1,71 @@

|

||||||

|

# supervised_layout_benchmark

|

||||||

|

|

||||||

|

## 介绍

|

||||||

|

|

||||||

|

> 该项目主要用于实现卫星组件热布局不同深度代理模型训练、测试以及热布局预测作图.

|

||||||

|

|

||||||

|

## 环境要求

|

||||||

|

|

||||||

|

- ### 软件要求

|

||||||

|

|

||||||

|

- python:

|

||||||

|

- cuda:

|

||||||

|

- pytorch:

|

||||||

|

|

||||||

|

- ### 硬件要求

|

||||||

|

|

||||||

|

- 大约4GB显存的GPU

|

||||||

|

|

||||||

|

|

||||||

|

## 构建环境

|

||||||

|

|

||||||

|

- ``` pip install -r requirements.txt ```

|

||||||

|

|

||||||

|

## 快速开始

|

||||||

|

|

||||||

|

> 运行训练、测试以及热布局作图统一通过main.py入口.

|

||||||

|

|

||||||

|

- 数据放在服务器`\\192.168.2.1\mnt/share1/layout_data/v1.0/data/`(详见[Readme](https://git.idrl.site/gongzhiqiang/supervised_layout_benchmark/blob/master/samples/README.md)),运行时请修改程序配置文件`config/config_complex_net.yml`中`data_root`输入变量为挂载服务器上数据地址.

|

||||||

|

|

||||||

|

- 训练和测试

|

||||||

|

|

||||||

|

```python

|

||||||

|

python main.py -m train 或者 python main.py --mode=train

|

||||||

|

```

|

||||||

|

|

||||||

|

- 测试

|

||||||

|

|

||||||

|

```python

|

||||||

|

python main.py -m test --test_check_num=21 或者 python main.py --mode=test --test_check_num=21

|

||||||

|

```

|

||||||

|

|

||||||

|

其中`test_check_num`是测试输入模型存储的编号.

|

||||||

|

|

||||||

|

- 热布局预测作图

|

||||||

|

|

||||||

|

```python

|

||||||

|

python main.py -m plot --test_check_num=21 或者 python main.py --mode=plot --test_check_num=21

|

||||||

|

```

|

||||||

|

|

||||||

|

其中`test_check_num`是作图输入模型存储的编号.

|

||||||

|

|

||||||

|

## 项目结构

|

||||||

|

|

||||||

|

- `benchmark`目录存放运行所需所有程序

|

||||||

|

- `config`存放运行配置文件

|

||||||

|

- `notebook`存放`notebook`测试文件

|

||||||

|

- `outputs`用于存放`test`和`plot`作图输出结果,测试的输出结果保存在`outputs/*.csv`,`plot`结果保存在`outputs/predict_plot/`

|

||||||

|

- `src`用于存放模型文件和测试训练文件

|

||||||

|

- `test.py`测试程序

|

||||||

|

- `train.py`训练程序

|

||||||

|

- `plot.py`预测可视化程序

|

||||||

|

- `data`文件夹存放数据预处理和读取程序

|

||||||

|

- `metrics`文件夹存放热布局度量函数,详见[Readme](https://git.idrl.site/gongzhiqiang/supervised_layout_benchmark/blob/master/src/metric/README.md)

|

||||||

|

- `models`热布局深度代理模型所用深度模型

|

||||||

|

- `utils`工具类文件

|

||||||

|

|

||||||

|

## 其他

|

||||||

|

|

||||||

|

* 训练测试examples

|

||||||

|

* 训练样本测试样本存放于`samples/data`中

|

||||||

|

* 原始文件配置环境后,直接运行`python main.py`,即运行example

|

||||||

|

|

@ -0,0 +1,46 @@

|

||||||

|

# config

|

||||||

|

|

||||||

|

# model

|

||||||

|

## support SegNet_AlexNet, SegNet_VGG, SegNet_ResNet18, SegNet_ResNet34, SegNet_ResNet50, SegNet_ResNet101, SegNet_ResNet152

|

||||||

|

## FPN_ResNet18, FPN_ResNet50, FPN_ResNet101, FPN_ResNet34, FPN_ResNet152

|

||||||

|

## FCN_AlexNet, FCN_VGG, FCN_ResNet18, FCN_ResNet50, FCN_ResNet101, FCN_ResNet34, FCN_ResNet152

|

||||||

|

## UNet_VGG

|

||||||

|

model_name: FCN # choose from FPN, FCN, SegNet, UNet

|

||||||

|

backbone: AlexNet # choose from AlexNet, VGG, ResNet18, ResNet50, ResNet101

|

||||||

|

|

||||||

|

# dataset path

|

||||||

|

data_root: samples/data/

|

||||||

|

boundary: one_point # choose from rm_wall, one_point, all_walls

|

||||||

|

|

||||||

|

# train/val set

|

||||||

|

train_list: train/train_val.txt

|

||||||

|

|

||||||

|

# test set

|

||||||

|

## choose the test set: test_0.txt, test_1.txt, test_2.txt, test_3.txt,test_4.txt,test_5.txt,test_6.txt

|

||||||

|

test_list: test/test_0.txt

|

||||||

|

|

||||||

|

# metric for testing

|

||||||

|

## choose from "mae_global", "mae_boundary", "mae_component",

|

||||||

|

## "value_and_pos_error_of_maximum_temperature", "max_tem_spearmanr", "global_image_spearmanr"

|

||||||

|

metric: mae_boundary

|

||||||

|

|

||||||

|

# dataset format: mat or h5

|

||||||

|

data_format: mat

|

||||||

|

batch_size: 2

|

||||||

|

max_epochs: 50

|

||||||

|

lr: 0.001

|

||||||

|

|

||||||

|

# number of gpus to use

|

||||||

|

gpus: 1

|

||||||

|

val_check_interval: 1.0

|

||||||

|

|

||||||

|

# num_workers in dataloader

|

||||||

|

num_workers: 4

|

||||||

|

|

||||||

|

# preprocessing of data

|

||||||

|

## input

|

||||||

|

mean_layout: 0

|

||||||

|

std_layout: 1000

|

||||||

|

## output

|

||||||

|

mean_heat: 298

|

||||||

|

std_heat: 50

|

||||||

|

|

@ -0,0 +1,46 @@

|

||||||

|

# data config for computation of metrics

|

||||||

|

|

||||||

|

## SIZE OF COMPONENTS

|

||||||

|

units:

|

||||||

|

- - 0.016

|

||||||

|

- 0.012

|

||||||

|

- - 0.012

|

||||||

|

- 0.006

|

||||||

|

- - 0.018

|

||||||

|

- 0.009

|

||||||

|

- - 0.018

|

||||||

|

- 0.012

|

||||||

|

- - 0.018

|

||||||

|

- 0.018

|

||||||

|

- - 0.012

|

||||||

|

- 0.012

|

||||||

|

- - 0.018

|

||||||

|

- 0.006

|

||||||

|

- - 0.009

|

||||||

|

- 0.009

|

||||||

|

- - 0.006

|

||||||

|

- 0.024

|

||||||

|

- - 0.006

|

||||||

|

- 0.012

|

||||||

|

- - 0.012

|

||||||

|

- 0.024

|

||||||

|

- - 0.024

|

||||||

|

- 0.024

|

||||||

|

|

||||||

|

## POWERS OF THE COMPONENTS

|

||||||

|

powers:

|

||||||

|

- 4000

|

||||||

|

- 16000

|

||||||

|

- 6000

|

||||||

|

- 8000

|

||||||

|

- 10000

|

||||||

|

- 14000

|

||||||

|

- 16000

|

||||||

|

- 20000

|

||||||

|

- 8000

|

||||||

|

- 16000

|

||||||

|

- 10000

|

||||||

|

- 20000

|

||||||

|

|

||||||

|

## LENGTH OF LAYOUT BOARD

|

||||||

|

length: 0.1

|

||||||

|

|

@ -0,0 +1,6 @@

|

||||||

|

FROM ufoym/deepo:pytorch

|

||||||

|

LABEL maintainer="gongzhiqiang@alumni.sjtu.edu.cn"

|

||||||

|

|

||||||

|

WORKDIR /tmp

|

||||||

|

COPY requirements.txt ./

|

||||||

|

RUN pip install -r requirements.txt

|

||||||

|

|

@ -0,0 +1,65 @@

|

||||||

|

# encoding: utf-8

|

||||||

|

"""

|

||||||

|

This function denotes the main function to train/test/plot

|

||||||

|

Usage:

|

||||||

|

python main.py [FLAGS]

|

||||||

|

|

||||||

|

@author: gongzhiqiang

|

||||||

|

@contact: gongzhiqiang@alumni.sjtu.edu.cn

|

||||||

|

|

||||||

|

@version: 1.0

|

||||||

|

@file: main.py

|

||||||

|

@time: 2020-12-22

|

||||||

|

|

||||||

|

"""

|

||||||

|

from pathlib import Path

|

||||||

|

import configargparse

|

||||||

|

|

||||||

|

from src.LayoutDeepRegression import Model

|

||||||

|

from src import train, test, plot

|

||||||

|

|

||||||

|

|

||||||

|

def main():

|

||||||

|

# default configuration file

|

||||||

|

config_path = Path(__file__).absolute().parent / "config/config.yml"

|

||||||

|

parser = configargparse.ArgParser(default_config_files=[str(config_path)], description="Hyper-parameters.")

|

||||||

|

|

||||||

|

# configuration file

|

||||||

|

parser.add_argument("--config", is_config_file=True, default=False, help="config file path")

|

||||||

|

|

||||||

|

# mode

|

||||||

|

parser.add_argument("-m", "--mode", type=str, default="train", help="model: train or test or plot")

|

||||||

|

|

||||||

|

# args for training

|

||||||

|

parser.add_argument("--gpus", type=int, default=0, help="how many gpus")

|

||||||

|

parser.add_argument("--batch_size", default=16, type=int)

|

||||||

|

parser.add_argument("--max_epochs", default=20, type=int)

|

||||||

|

parser.add_argument("--lr", default="0.01", type=float)

|

||||||

|

parser.add_argument("--resume_from_checkpoint", type=str, help="resume from checkpoint")

|

||||||

|

parser.add_argument("--num_workers", default=2, type=int, help="num_workers in DataLoader")

|

||||||

|

parser.add_argument("--seed", type=int, default=1, help="seed")

|

||||||

|

parser.add_argument("--use_16bit", type=bool, default=False, help="use 16bit precision")

|

||||||

|

parser.add_argument("--profiler", action="store_true", help="use profiler")

|

||||||

|

|

||||||

|

# args for validation

|

||||||

|

parser.add_argument("--val_check_interval", type=float, default=1,

|

||||||

|

help="how often within one training epoch to check the validation set")

|

||||||

|

|

||||||

|

# args for testing

|

||||||

|

parser.add_argument("--test_check_num", default='0', type=str, help="checkpoint for test")

|

||||||

|

parser.add_argument("--test_args", action="store_true", help="print args")

|

||||||

|

|

||||||

|

# args from Model

|

||||||

|

parser = Model.add_model_specific_args(parser)

|

||||||

|

hparams = parser.parse_args()

|

||||||

|

|

||||||

|

# running

|

||||||

|

assert hparams.mode in ["train", "test", "plot"]

|

||||||

|

if hparams.test_args:

|

||||||

|

print(hparams)

|

||||||

|

else:

|

||||||

|

getattr(eval(hparams.mode), "main")(hparams)

|

||||||

|

|

||||||

|

|

||||||

|

if __name__ == '__main__':

|

||||||

|

main()

|

||||||

|

|

@ -0,0 +1,11 @@

|

||||||

|

tqdm==4.42.1

|

||||||

|

scipy==1.4.1

|

||||||

|

pytest==5.3.5

|

||||||

|

numpy==1.18.1

|

||||||

|

matplotlib==3.1.3

|

||||||

|

ConfigArgParse==1.2.3

|

||||||

|

pytorch_lightning==1.1.2

|

||||||

|

PyYAML==5.3.1

|

||||||

|

scikit_learn==0.23.2

|

||||||

|

torch>=1.5.0

|

||||||

|

torchvision==0.8.1

|

||||||

|

|

@ -0,0 +1,131 @@

|

||||||

|

# Datasets for benchmark

|

||||||

|

|

||||||

|

## 介绍

|

||||||

|

|

||||||

|

> 该数据库用于支持热布局温度场预测任务,数据地址:/192.168.2.1/mnt/share1/layout_data/v1.0/data/

|

||||||

|

>

|

||||||

|

> samples中提供数据库的样例

|

||||||

|

|

||||||

|

## 数据库结构

|

||||||

|

|

||||||

|

> 数据库提供三种不同边界:小孔散热、单边散热和四周全散热

|

||||||

|

|

||||||

|

- `data`中存放不同边界数据库

|

||||||

|

- `one_point`小孔散热边界

|

||||||

|

- `train`存放训练数据

|

||||||

|

- `train`训练样本存放文件夹

|

||||||

|

- `train_val.txt`用于网络训练的数据list

|

||||||

|

- `test`存放测试数据

|

||||||

|

- `test`测试样本存放文件夹

|

||||||

|

- `test_*.txt`用于测试的数据list,其中`test_0.txt`、`test_1.txt`、`test_2.txt`、`test_3.txt`、`test_4.txt`、`test_5.txt`、`test_6.txt`分别存放了不同方式采样得到的测试样本

|

||||||

|

- `rm_wall`单边散热边界

|

||||||

|

- `train`

|

||||||

|

- `train`

|

||||||

|

- `train_val.txt`

|

||||||

|

- `test`

|

||||||

|

- `test`

|

||||||

|

- `test_*.txt`

|

||||||

|

- `all_walls`四周全散热边界

|

||||||

|

- `train`

|

||||||

|

- `train`

|

||||||

|

- `train_val.txt`

|

||||||

|

- `test`

|

||||||

|

- `test`

|

||||||

|

- `test_*.txt`

|

||||||

|

|

||||||

|

## 组件介绍

|

||||||

|

|

||||||

|

> 布局区域是`0.1m*0.1m`方形区域,共有12个大小功率不同组件

|

||||||

|

|

||||||

|

* 组件大小、功率

|

||||||

|

|

||||||

|

| 组件 | 长(m) | 宽(m) | 功率($W/m^2$) |

|

||||||

|

| :--: | :---: | :---: | :-----------: |

|

||||||

|

| 1 | 0.016 | 0.012 | 4000 |

|

||||||

|

| 2 | 0.012 | 0.006 | 16000 |

|

||||||

|

| 3 | 0.018 | 0.009 | 6000 |

|

||||||

|

| 4 | 0.018 | 0.012 | 8000 |

|

||||||

|

| 5 | 0.018 | 0.018 | 10000 |

|

||||||

|

| 6 | 0.012 | 0.012 | 14000 |

|

||||||

|

| 7 | 0.018 | 0.006 | 16000 |

|

||||||

|

| 8 | 0.009 | 0.009 | 20000 |

|

||||||

|

| 9 | 0.006 | 0.024 | 8000 |

|

||||||

|

| 10 | 0.006 | 0.012 | 16000 |

|

||||||

|

| 11 | 0.012 | 0.024 | 10000 |

|

||||||

|

| 12 | 0.024 | 0.024 | 20000 |

|

||||||

|

|

||||||

|

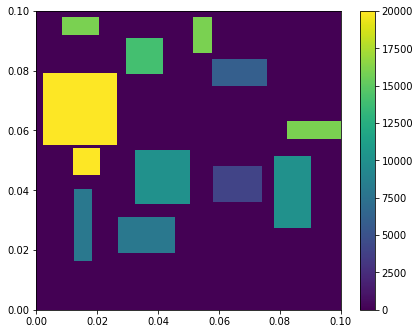

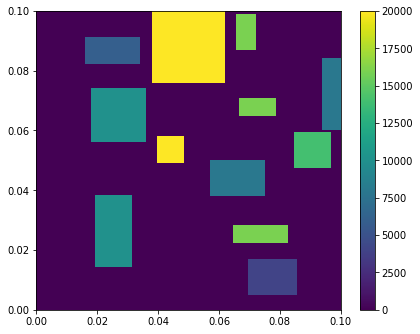

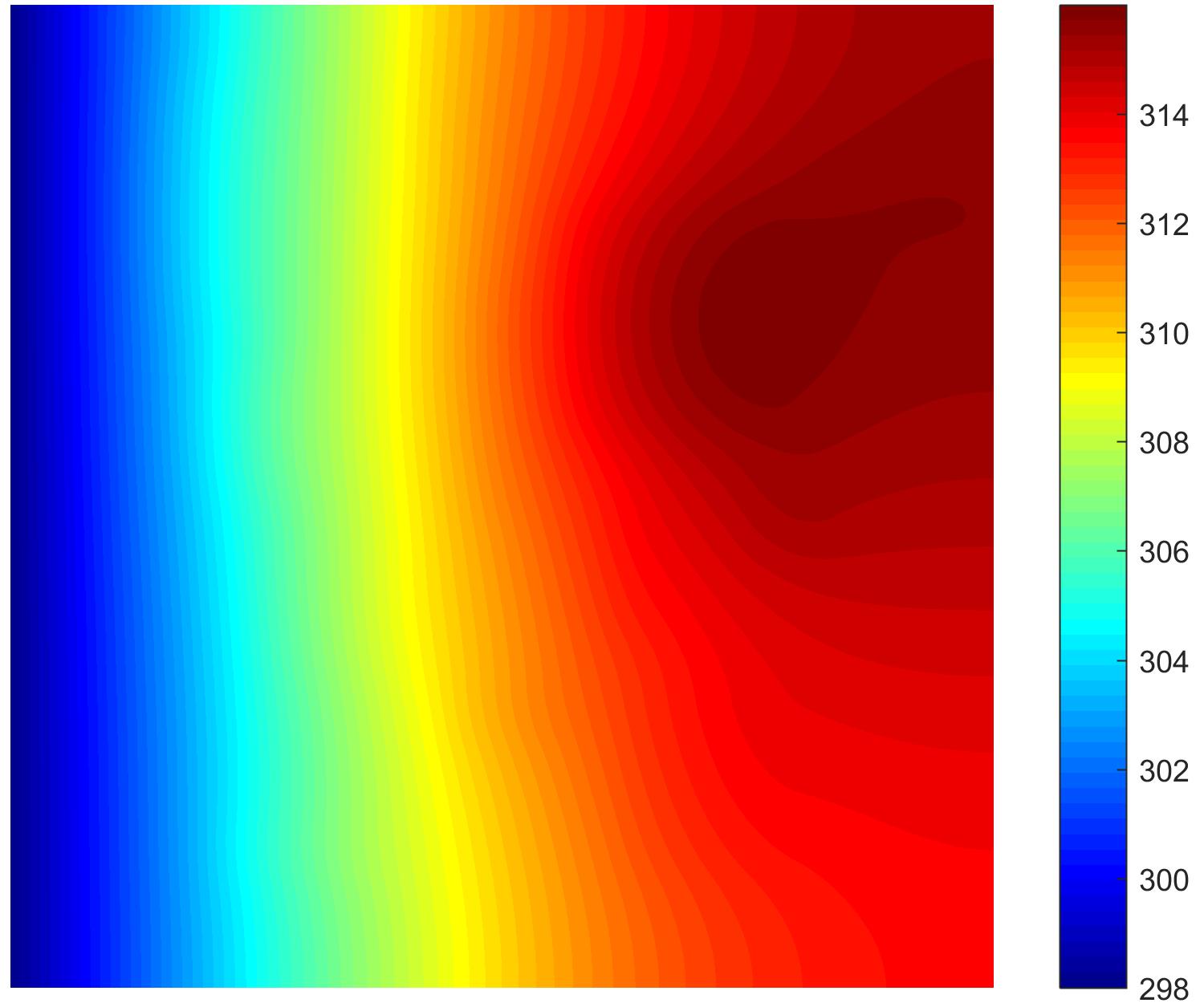

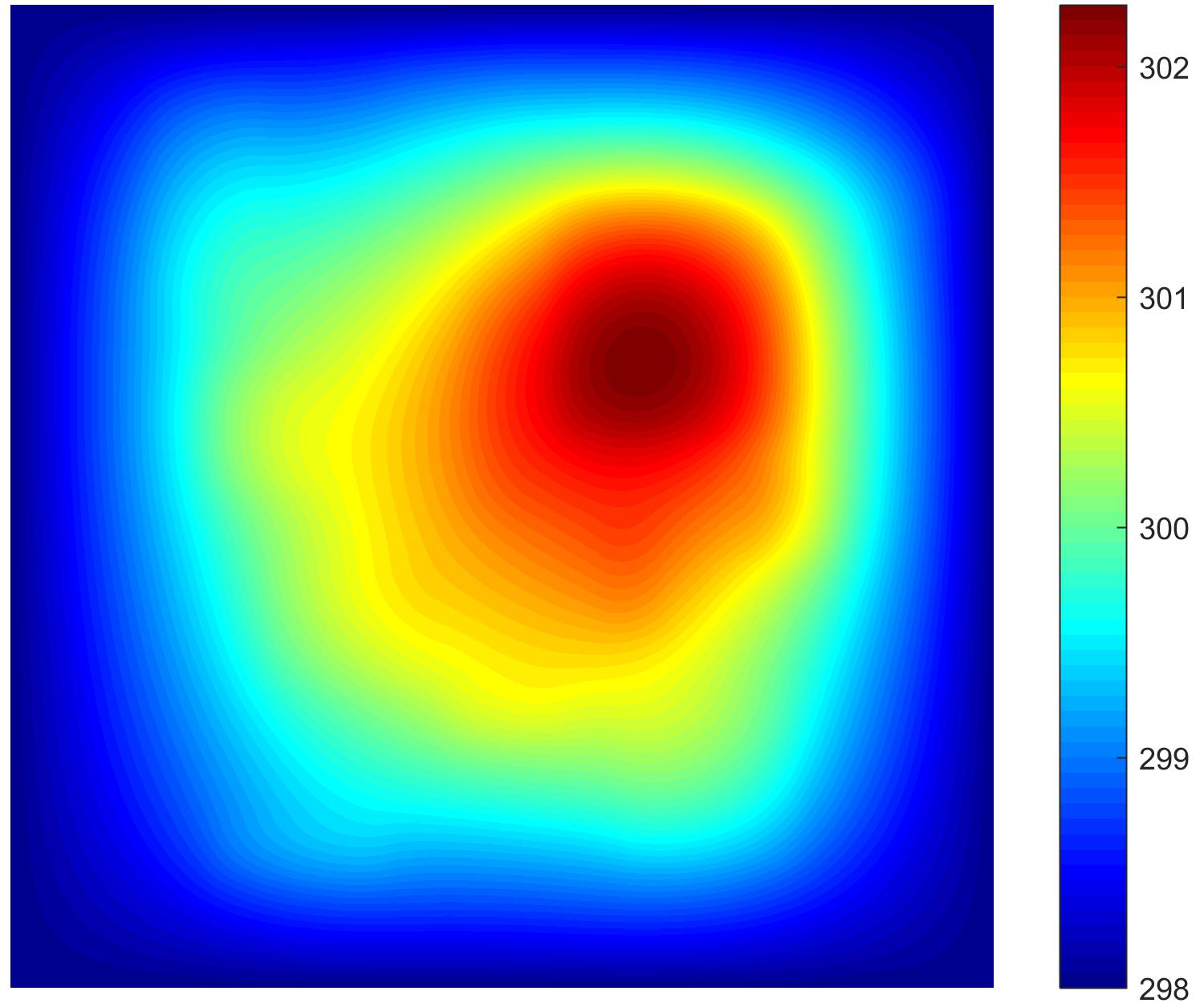

* 组件布局示例

|

||||||

|

|

||||||

|

|  |  |

|

||||||

|

| :-----------------------------------------------------: | :-----------------------------------------------------: |

|

||||||

|

| Example 1 | Example 2 |

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

## 数据库详情

|

||||||

|

|

||||||

|

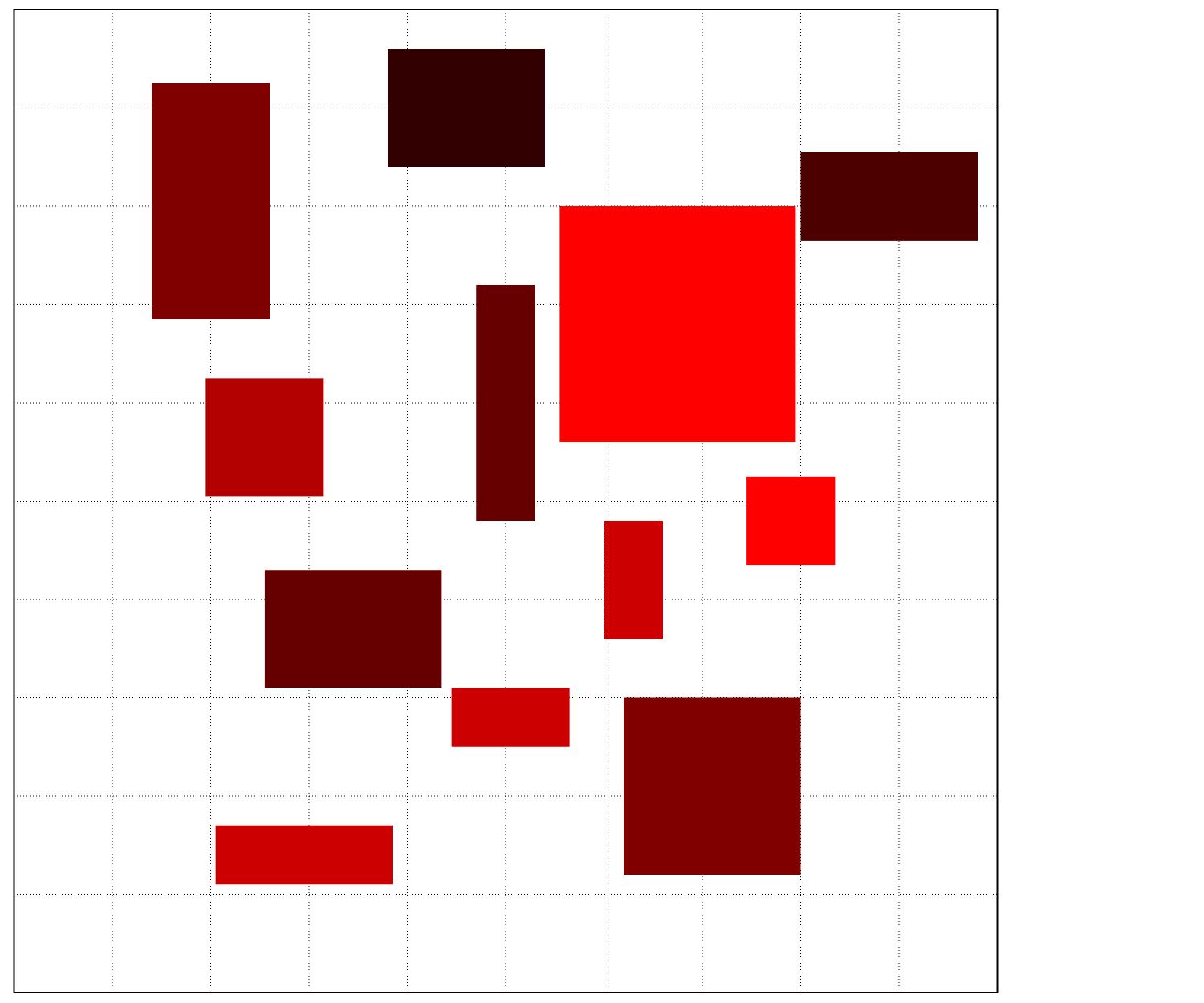

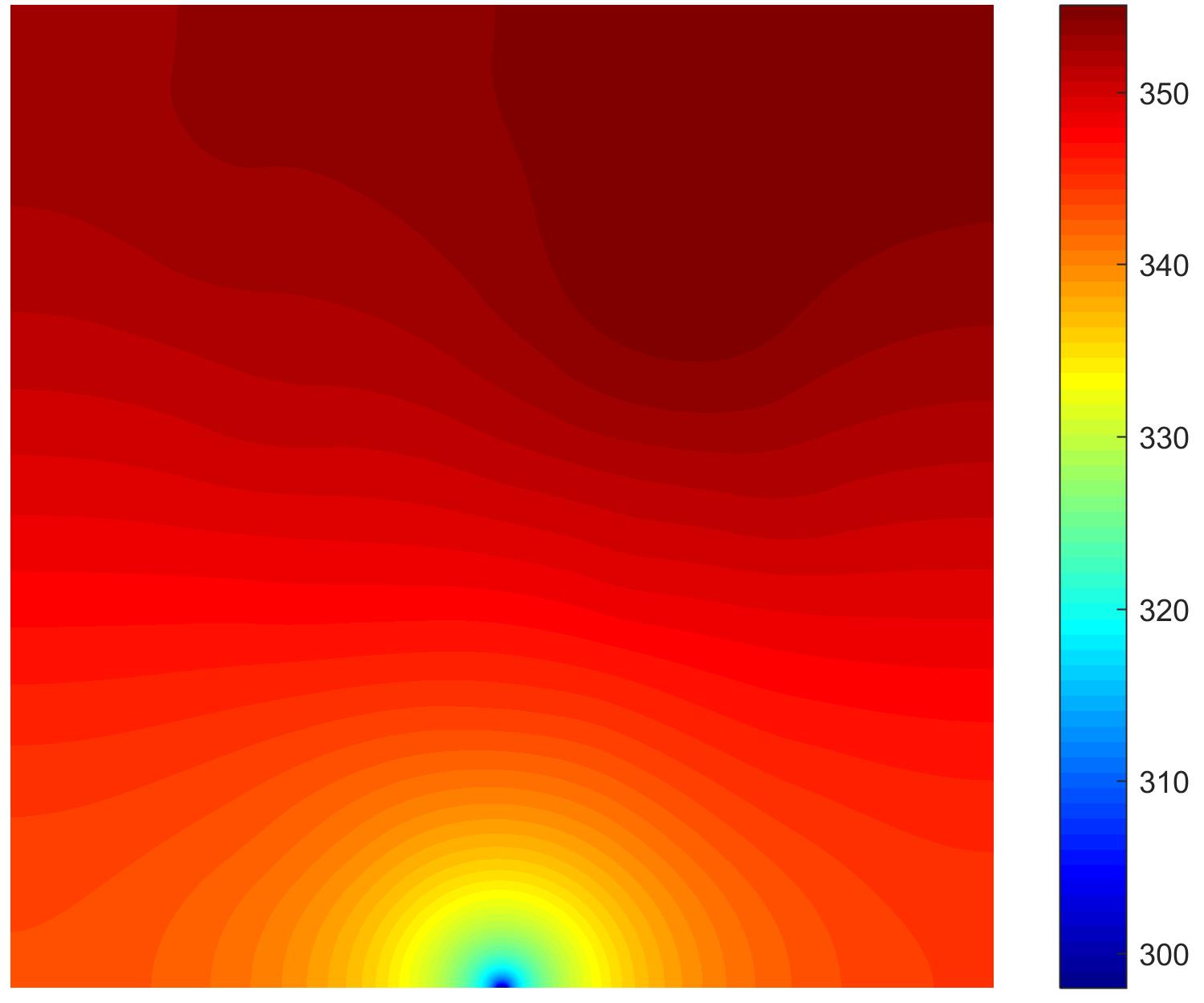

* train包含2000组sequence采样方式生成的训练样本 ,示例如下

|

||||||

|

|

||||||

|

|  |  |  |  |

|

||||||

|

| :----------------------------------------------------------: | :----------------------------------------------------------: | :----------------------------------------------------------: | :----------------------------------------------------------: |

|

||||||

|

| heat layout | one point | rm_wall | all_walls |

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

* test包含不同方式获得的测试样本40000组

|

||||||

|

|

||||||

|

* `test_0.txt`通过sequence采样方式生成的10000组测试样本 ,示例如下

|

||||||

|

|

||||||

|

|  |  |  |

|

||||||

|

| :----------------------------------------------------------: | :----------------------------------------------------------: | :----------------------------------------------------------: |

|

||||||

|

| Example 1 | Example 2 | Example 3 |

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

* `test_1.txt`通过gibbs方式采样生成的10000组测试样本 ,示例如下

|

||||||

|

|

||||||

|

|  |  |  |

|

||||||

|

| :----------------------------------------------------------: | :----------------------------------------------------------: | :----------------------------------------------------------: |

|

||||||

|

| Example 1 | Example 2 | Example 3 |

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

* `test_2.txt`功率相同或相近组件相邻构成的特殊组件布局样本,共有4类情况,每类情况1000组测试样本 ,示例如下

|

||||||

|

|

||||||

|

|  |  |  |  |

|

||||||

|

| :----------------------------------------------------------: | :----------------------------------------------------------: | :----------------------------------------------------------: | :----------------------------------------------------------: |

|

||||||

|

|  |  |  |  |

|

||||||

|

| 8和12号组件 | 2和7和10号组件 | 5和11号组件 | 4和9号组件 |

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

* `test_3.txt`组件布局密集在上半部,1/5区域,2/5区域,3/5区域,4/5区域,或下半部的测试样本,各1000组 ,示例如下

|

||||||

|

|

||||||

|

|  |  |  |  |  |  |

|

||||||

|

| :----------------------------------------------------------: | :----------------------------------------------------------: | :----------------------------------------------------------: | :----------------------------------------------------------: | :----------------------------------------------------------: | :----------------------------------------------------------: |

|

||||||

|

| 上半部 | 1/5区域 | 2/5区域 | 3/5区域 | 4/5区域 | 下半部 |

|

||||||

|

|

||||||

|

* `test_4.txt`组件布局密集在左半部,1/5区域,2/5区域,3/5区域,4/5区域,或右半部的测试样本,各1000组 ,示例如下

|

||||||

|

|

||||||

|

|  |  |  |  |  |  |

|

||||||

|

| :----------------------------------------------------------: | :----------------------------------------------------------: | :----------------------------------------------------------: | :----------------------------------------------------------: | :----------------------------------------------------------: | :----------------------------------------------------------: |

|

||||||

|

| 左半部 | 1/5区域 | 2/5区域 | 3/5区域 | 4/5区域 | 右半部 |

|

||||||

|

|

||||||

|

* `test_5.txt`组件布局在内部较小方形区域测试样本,共考虑100x100区域,120x120区域,140x140区域3种情况,各1000组测试样本 ,示例如下

|

||||||

|

|

||||||

|

|  |  |  |

|

||||||

|

| :----------------------------------------------------------: | :----------------------------------------------------------: | :----------------------------------------------------------: |

|

||||||

|

|  |  |  |

|

||||||

|

| 100x100 | 120x120 | 140x140 |

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

* `test_6.txt`最大功率布局在角落中的特殊样本,共1000组测试样本,示例如下

|

||||||

|

|

||||||

|

|  |  |  |  |

|

||||||

|

| :----------------------------------------------------------: | :----------------------------------------------------------: | :----------------------------------------------------------: | :----------------------------------------------------------: |

|

||||||

|

| 右下角 | 左上角 | 左下角 | 左下角 |

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

## 其他

|

||||||

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

|

|

@ -0,0 +1,8 @@

|

||||||

|

aw_test0_1.mat

|

||||||

|

aw_test0_2.mat

|

||||||

|

aw_test0_3.mat

|

||||||

|

aw_test0_4.mat

|

||||||

|

aw_test0_5.mat

|

||||||

|

aw_test0_6.mat

|

||||||

|

aw_test0_7.mat

|

||||||

|

aw_test0_8.mat

|

||||||

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

|

|

@ -0,0 +1,10 @@

|

||||||

|

aw_train_1.mat

|

||||||

|

aw_train_2.mat

|

||||||

|

aw_train_3.mat

|

||||||

|

aw_train_4.mat

|

||||||

|

aw_train_5.mat

|

||||||

|

aw_train_6.mat

|

||||||

|

aw_train_7.mat

|

||||||

|

aw_train_8.mat

|

||||||

|

aw_train_9.mat

|

||||||

|

aw_train_10.mat

|

||||||

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

|

|

@ -0,0 +1,8 @@

|

||||||

|

op_test0_1.mat

|

||||||

|

op_test0_2.mat

|

||||||

|

op_test0_3.mat

|

||||||

|

op_test0_4.mat

|

||||||

|

op_test0_5.mat

|

||||||

|

op_test0_6.mat

|

||||||

|

op_test0_7.mat

|

||||||

|

op_test0_8.mat

|

||||||

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

|

|

@ -0,0 +1,10 @@

|

||||||

|

op_train_1.mat

|

||||||

|

op_train_2.mat

|

||||||

|

op_train_3.mat

|

||||||

|

op_train_4.mat

|

||||||

|

op_train_5.mat

|

||||||

|

op_train_6.mat

|

||||||

|

op_train_7.mat

|

||||||

|

op_train_8.mat

|

||||||

|

op_train_9.mat

|

||||||

|

op_train_10.mat

|

||||||

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

|

|

@ -0,0 +1,8 @@

|

||||||

|

rw_test0_1.mat

|

||||||

|

rw_test0_2.mat

|

||||||

|

rw_test0_3.mat

|

||||||

|

rw_test0_4.mat

|

||||||

|

rw_test0_5.mat

|

||||||

|

rw_test0_6.mat

|

||||||

|

rw_test0_7.mat

|

||||||

|

rw_test0_8.mat

|

||||||

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

|

|

@ -0,0 +1,10 @@

|

||||||

|

rw_train_1.mat

|

||||||

|

rw_train_2.mat

|

||||||

|

rw_train_3.mat

|

||||||

|

rw_train_4.mat

|

||||||

|

rw_train_5.mat

|

||||||

|

rw_train_6.mat

|

||||||

|

rw_train_7.mat

|

||||||

|

rw_train_8.mat

|

||||||

|

rw_train_9.mat

|

||||||

|

rw_train_10.mat

|

||||||

|

|

@ -0,0 +1,200 @@

|

||||||

|

# encoding: utf-8

|

||||||

|

import math

|

||||||

|

from pathlib import Path

|

||||||

|

|

||||||

|

import torch

|

||||||

|

import torch.nn as nn

|

||||||

|

from torch.utils.data import DataLoader, random_split

|

||||||

|

import torchvision

|

||||||

|

from torch.optim.lr_scheduler import ExponentialLR

|

||||||

|

from pytorch_lightning import LightningModule

|

||||||

|

|

||||||

|

from src.data.layout import LayoutDataset

|

||||||

|

import src.utils.np_transforms as transforms

|

||||||

|

import src.models as models

|

||||||

|

from src.metric.metrics import Metric

|

||||||

|

|

||||||

|

|

||||||

|

class Model(LightningModule):

|

||||||

|

|

||||||

|

def __init__(self, hparams):

|

||||||

|

super().__init__()

|

||||||

|

self.hparams = hparams

|

||||||

|

self._build_model()

|

||||||

|

self.criterion = nn.L1Loss()

|

||||||

|

self.train_dataset = None

|

||||||

|

self.val_dataset = None

|

||||||

|

self.test_dataset = None

|

||||||

|

|

||||||

|

def _build_model(self):

|

||||||

|

model_list = ["SegNet_AlexNet", "SegNet_VGG", "SegNet_ResNet18", "SegNet_ResNet50",

|

||||||

|

"SegNet_ResNet101", "SegNet_ResNet34", "SegNet_ResNet152",

|

||||||

|

"FPN_ResNet18", "FPN_ResNet50", "FPN_ResNet101", "FPN_ResNet34", "FPN_ResNet152",

|

||||||

|

"FCN_AlexNet", "FCN_VGG", "FCN_ResNet18", "FCN_ResNet50", "FCN_ResNet101",

|

||||||

|

"FCN_ResNet34", "FCN_ResNet152",

|

||||||

|

"UNet_VGG"]

|

||||||

|

layout_model = self.hparams.model_name + '_' + self.hparams.backbone

|

||||||

|

assert layout_model in model_list

|

||||||

|

self.model = getattr(models, layout_model)(in_channels=1)

|

||||||

|

|

||||||

|

def forward(self, x):

|

||||||

|

x = self.model(x)

|

||||||

|

x = torch.sigmoid(x)

|

||||||

|

return x

|

||||||

|

|

||||||

|

def __dataloader(self, dataset, shuffle=False):

|

||||||

|

loader = DataLoader(

|

||||||

|

dataset=dataset,

|

||||||

|

shuffle=shuffle,

|

||||||

|

batch_size=self.hparams.batch_size,

|

||||||

|

num_workers=self.hparams.num_workers,

|

||||||

|

)

|

||||||

|

return loader

|

||||||

|

|

||||||

|

def configure_optimizers(self):

|

||||||

|

optimizer = torch.optim.Adam(self.parameters(),

|

||||||

|

lr=self.hparams.lr)

|

||||||

|

scheduler = ExponentialLR(optimizer, gamma=0.99)

|

||||||

|

return [optimizer], [scheduler]

|

||||||

|

|

||||||

|

def prepare_data(self):

|

||||||

|

"""Prepare dataset

|

||||||

|

"""

|

||||||

|

size: int = self.hparams.input_size

|

||||||

|

transform_layout = transforms.Compose(

|

||||||

|

[

|

||||||

|

transforms.Resize(size=(size, size)),

|

||||||

|

transforms.ToTensor(),

|

||||||

|

transforms.Normalize(

|

||||||

|

torch.tensor([self.hparams.mean_layout]),

|

||||||

|

torch.tensor([self.hparams.std_layout]),

|

||||||

|

),

|

||||||

|

]

|

||||||

|

)

|

||||||

|

transform_heat = transforms.Compose(

|

||||||

|

[

|

||||||

|

transforms.Resize(size=(size, size)),

|

||||||

|

transforms.ToTensor(),

|

||||||

|

transforms.Normalize(

|

||||||

|

torch.tensor([self.hparams.mean_heat]),

|

||||||

|

torch.tensor([self.hparams.std_heat]),

|

||||||

|

),

|

||||||

|

]

|

||||||

|

)

|

||||||

|

|

||||||

|

# here only support format "mat"

|

||||||

|

assert self.hparams.data_format == "mat"

|

||||||

|

trainval_dataset = LayoutDataset(

|

||||||

|

self.hparams.data_root,

|

||||||

|

self.hparams.boundary,

|

||||||

|

list_path=self.hparams.train_list,

|

||||||

|

train=True,

|

||||||

|

transform=transform_layout,

|

||||||

|

target_transform=transform_heat,

|

||||||

|

)

|

||||||

|

test_dataset = LayoutDataset(

|

||||||

|

self.hparams.data_root,

|

||||||

|

self.hparams.boundary,

|

||||||

|

list_path=self.hparams.test_list,

|

||||||

|

train=False,

|

||||||

|

transform=transform_layout,

|

||||||

|

target_transform=transform_heat,

|

||||||

|

)

|

||||||

|

|

||||||

|

# split train/val set

|

||||||

|

train_length, val_length = int(len(trainval_dataset) * 0.8), int(len(trainval_dataset) * 0.2)

|

||||||

|

train_dataset, val_dataset = torch.utils.data.random_split(trainval_dataset,

|

||||||

|

[train_length, val_length])

|

||||||

|

|

||||||

|

print(

|

||||||

|

f"Prepared dataset, train:{int(len(train_dataset))},\

|

||||||

|

val:{int(len(val_dataset))}, test:{len(test_dataset)}"

|

||||||

|

)

|

||||||

|

|

||||||

|

# assign to use in dataloaders

|

||||||

|

self.train_dataset = self.__dataloader(train_dataset, shuffle=True)

|

||||||

|

self.val_dataset = self.__dataloader(val_dataset, shuffle=False)

|

||||||

|

self.test_dataset = self.__dataloader(test_dataset, shuffle=False)

|

||||||

|

|

||||||

|

def train_dataloader(self):

|

||||||

|

return self.train_dataset

|

||||||

|

|

||||||

|

def val_dataloader(self):

|

||||||

|

return self.val_dataset

|

||||||

|

|

||||||

|

def test_dataloader(self):

|

||||||

|

return self.test_dataset

|

||||||

|

|

||||||

|

def training_step(self, batch, batch_idx):

|

||||||

|

layout, heat = batch

|

||||||

|

heat_pred = self(layout)

|

||||||

|

loss = self.criterion(heat, heat_pred)

|

||||||

|

self.log("train/training_mae", loss * self.hparams.std_heat)

|

||||||

|

|

||||||

|

if batch_idx == 0:

|

||||||

|

grid = torchvision.utils.make_grid(

|

||||||

|

heat_pred[:4, ...], normalize=True

|

||||||

|

)

|

||||||

|

self.logger.experiment.add_image(

|

||||||

|

"train_pred_heat_field", grid, self.global_step

|

||||||

|

)

|

||||||

|

if self.global_step == 0:

|

||||||

|

grid = torchvision.utils.make_grid(

|

||||||

|

heat[:4, ...], normalize=True

|

||||||

|

)

|

||||||

|

self.logger.experiment.add_image(

|

||||||

|

"train_heat_field", grid, self.global_step

|

||||||

|

)

|

||||||

|

|

||||||

|

return {"loss": loss}

|

||||||

|

|

||||||

|

def validation_step(self, batch, batch_idx):

|

||||||

|

layout, heat = batch

|

||||||

|

heat_pred = self(layout)

|

||||||

|

loss = self.criterion(heat, heat_pred)

|

||||||

|

return {"val_loss": loss}

|

||||||

|

|

||||||

|

def validation_epoch_end(self, outputs):

|

||||||

|

val_loss_mean = torch.stack([x["val_loss"] for x in outputs]).mean()

|

||||||

|

self.log("val/val_mae", val_loss_mean.item() * self.hparams.std_heat)

|

||||||

|

|

||||||

|

def test_step(self, batch, batch_idx):

|

||||||

|

layout, heat = batch

|

||||||

|

heat_pred = self(layout)

|

||||||

|

|

||||||

|

data_config = Path(__file__).absolute().parent.parent / "config/data.yml"

|

||||||

|

layout_metric = Metric(heat_pred, heat, boundary=self.hparams.boundary,

|

||||||

|

layout=layout, data_config=data_config, hparams=self.hparams)

|

||||||

|

assert self.hparams.metric in layout_metric.metrics

|

||||||

|

loss = getattr(layout_metric, self.hparams.metric)()

|

||||||

|

return {"test_loss": loss}

|

||||||

|

|

||||||

|

def test_epoch_end(self, outputs):

|

||||||

|

test_loss_mean = torch.stack([x["test_loss"] for x in outputs]).mean()

|

||||||

|

self.log("test_loss (" + self.hparams.metric +")", test_loss_mean.item())

|

||||||

|

|

||||||

|

@staticmethod

|

||||||

|

def add_model_specific_args(parser): # pragma: no-cover

|

||||||

|

"""Parameters you define here will be available to your model through `self.hparams`.

|

||||||

|

"""

|

||||||

|

# dataset args

|

||||||

|

parser.add_argument("--data_root", type=str, required=True, help="path of dataset")

|

||||||

|

parser.add_argument("--train_list", type=str, required=True, help="path of train dataset list")

|

||||||

|

parser.add_argument("--train_size", default=0.8, type=float, help="train_size in train_test_split")

|

||||||

|

parser.add_argument("--test_list", type=str, required=True, help="path of test dataset list")

|

||||||

|

parser.add_argument("--boundary", type=str, default="rm_wall", help="boundary condition")

|

||||||

|

parser.add_argument("--data_format", type=str, default="mat", choices=["mat", "h5"], help="dataset format")

|

||||||

|

|

||||||

|

# Normalization params

|

||||||

|

parser.add_argument("--mean_layout", default=0, type=float)

|

||||||

|

parser.add_argument("--std_layout", default=1, type=float)

|

||||||

|

parser.add_argument("--mean_heat", default=0, type=float)

|

||||||

|

parser.add_argument("--std_heat", default=1, type=float)

|

||||||

|

|

||||||

|

# Model params (opt)

|

||||||

|

parser.add_argument("--input_size", default=200, type=int)

|

||||||

|

parser.add_argument("--model_name", type=str, default='SegNet', help="the name of chosen model")

|

||||||

|

parser.add_argument("--backbone", type=str, default='ResNet18', help="the used backbone in the regression model")

|

||||||

|

parser.add_argument("--metric", type=str, default='mae_global',

|

||||||

|

help="the used metric for evaluation of testing")

|

||||||

|

return parser

|

||||||

|

|

@ -0,0 +1,41 @@

|

||||||

|

# -*- encoding: utf-8 -*-

|

||||||

|

"""Layout dataset

|

||||||

|

"""

|

||||||

|

import os

|

||||||

|

from .loadresponse import LoadResponse, mat_loader

|

||||||

|

|

||||||

|

|

||||||

|

class LayoutDataset(LoadResponse):

|

||||||

|

"""Layout dataset (mutiple files) generated by 'layout-generator'.

|

||||||

|

"""

|

||||||

|

|

||||||

|

def __init__(

|

||||||

|

self,

|

||||||

|

root,

|

||||||

|

sub_dir,

|

||||||

|

list_path=None,

|

||||||

|

train=True,

|

||||||

|

transform=None,

|

||||||

|

target_transform=None,

|

||||||

|

load_name="F",

|

||||||

|

resp_name="u",

|

||||||

|

):

|

||||||

|

subdir = os.path.join("train", "train") \

|

||||||

|

if train else os.path.join("test", "test")

|

||||||

|

|

||||||

|

# find the path of the list of train/test samples

|

||||||

|

list_path = os.path.join(root, sub_dir, list_path)

|

||||||

|

|

||||||

|

# find the root path of the samples

|

||||||

|

root = os.path.join(root, sub_dir, subdir)

|

||||||

|

|

||||||

|

super().__init__(

|

||||||

|

root,

|

||||||

|

mat_loader,

|

||||||

|

list_path,

|

||||||

|

load_name=load_name,

|

||||||

|

resp_name=resp_name,

|

||||||

|

extensions="mat",

|

||||||

|

transform=transform,

|

||||||

|

target_transform=target_transform,

|

||||||

|

)

|

||||||

|

|

@ -0,0 +1,113 @@

|

||||||

|

# -*- encoding: utf-8 -*-

|

||||||

|

"""Load Response Dataset.

|

||||||

|

"""

|

||||||

|

import os

|

||||||

|

|

||||||

|

import scipy.io as sio

|

||||||

|

import numpy as np

|

||||||

|

from torchvision.datasets import VisionDataset

|

||||||

|

|

||||||

|

|

||||||

|

class LoadResponse(VisionDataset):

|

||||||

|

"""Some Information about LoadResponse dataset"""

|

||||||

|

|

||||||

|

def __init__(

|

||||||

|

self,

|

||||||

|

root,

|

||||||

|

loader,

|

||||||

|

list_path,

|

||||||

|

load_name="F",

|

||||||

|

resp_name="u",

|

||||||

|

extensions=None,

|

||||||

|

transform=None,

|

||||||

|

target_transform=None,

|

||||||

|

is_valid_file=None,

|

||||||

|

):

|

||||||

|

super().__init__(

|

||||||

|

root, transform=transform, target_transform=target_transform

|

||||||

|

)

|

||||||

|

self.list_path = list_path

|

||||||

|

self.loader = loader

|

||||||

|

self.load_name = load_name

|

||||||

|

self.resp_name = resp_name

|

||||||

|

self.extensions = extensions

|

||||||

|

self.sample_files = make_dataset_list(root, list_path, extensions, is_valid_file)

|

||||||

|

|

||||||

|

def __getitem__(self, index):

|

||||||

|

path = self.sample_files[index]

|

||||||

|

load, resp = self.loader(path, self.load_name, self.resp_name)

|

||||||

|

|

||||||

|

if self.transform is not None:

|

||||||

|

load = self.transform(load)

|

||||||

|

if self.target_transform is not None:

|

||||||

|

resp = self.target_transform(resp)

|

||||||

|

return load, resp

|

||||||

|

|

||||||

|

def __len__(self):

|

||||||

|

return len(self.sample_files)

|

||||||

|

|

||||||

|

|

||||||

|

def make_dataset(root_dir, extensions=None, is_valid_file=None):

|

||||||

|

"""make_dataset() from torchvision.

|

||||||

|

"""

|

||||||

|

files = []

|

||||||

|

root_dir = os.path.expanduser(root_dir)

|

||||||

|

if not ((extensions is None) ^ (is_valid_file is None)):

|

||||||

|

raise ValueError(

|

||||||

|

"Both extensions and is_valid_file \

|

||||||

|

cannot be None or not None at the same time"

|

||||||

|

)

|

||||||

|

if extensions is not None:

|

||||||

|

is_valid_file = lambda x: has_allowed_extension(x, extensions)

|

||||||

|

|

||||||

|

assert os.path.isdir(root_dir), root_dir

|

||||||

|

for root, _, fns in sorted(os.walk(root_dir, followlinks=True)):

|

||||||

|

for fn in sorted(fns):

|

||||||

|

path = os.path.join(root, fn)

|

||||||

|

if is_valid_file(path):

|

||||||

|

files.append(path)

|

||||||

|

return files

|

||||||

|

|

||||||

|

|

||||||

|

def make_dataset_list(root_dir, list_path, extensions=None, is_valid_file=None):

|

||||||

|

"""make_dataset() from torchvision.

|

||||||

|