Update README.md

This commit is contained in:

parent

cfab59036c

commit

352fbc18b8

|

|

@ -6,8 +6,7 @@ This is the official implementation and case study of the Full-coverage Vehicle

|

||||||

Source code can be find in [here](https://github.com/idrl-lab/Full-coverage-camouflage-adversarial-attack/tree/gh-pages/src).

|

Source code can be find in [here](https://github.com/idrl-lab/Full-coverage-camouflage-adversarial-attack/tree/gh-pages/src).

|

||||||

|

|

||||||

## Abstract

|

## Abstract

|

||||||

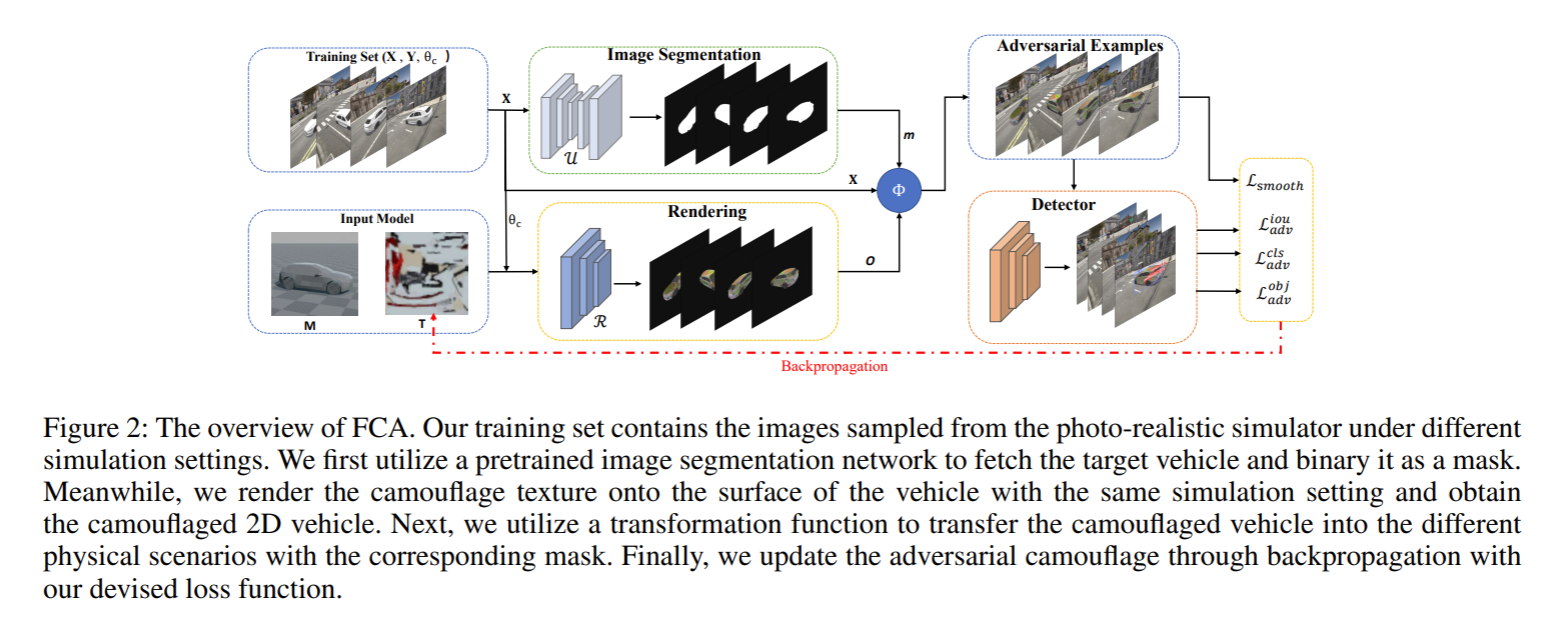

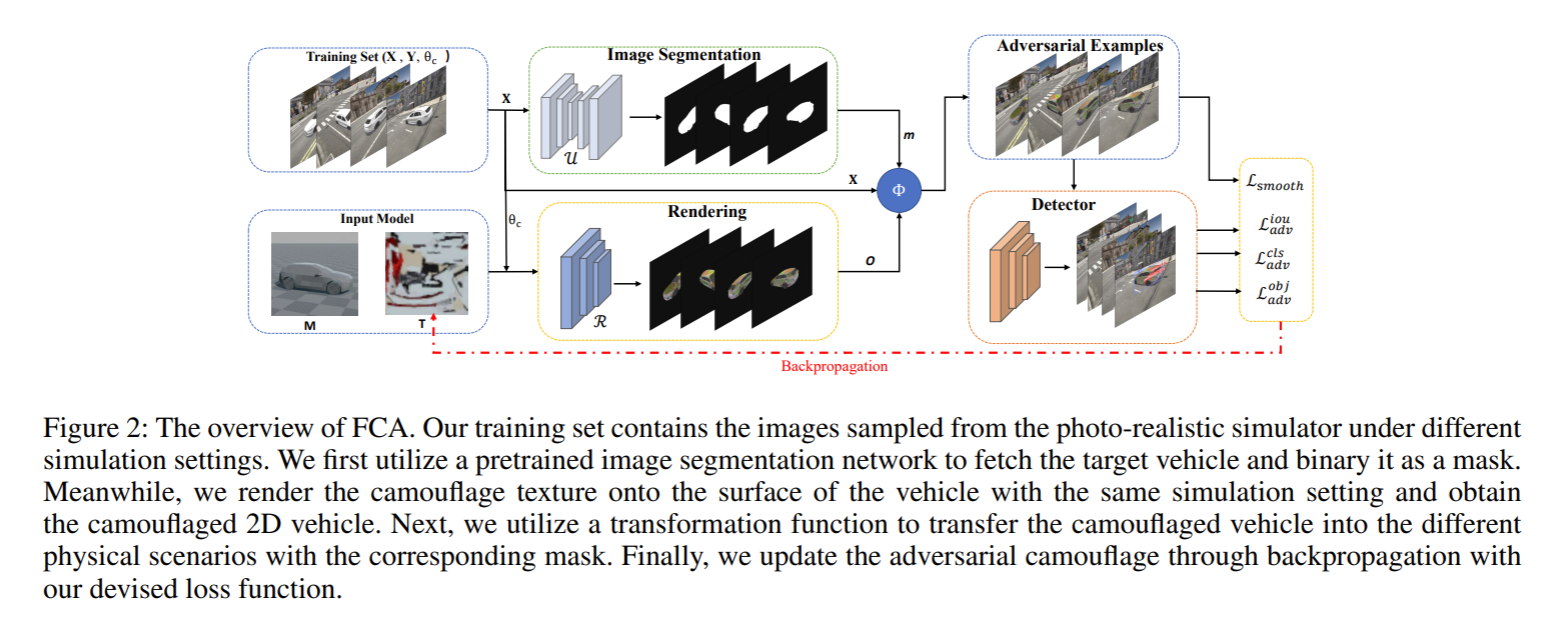

Physical adversarial attacks in object detection have attracted increasing attention. However, most previous works focus on hiding the objects from the detector by generating an individual adversarial patch, which only covers the planar part of the vehicle’s surface and fails to attack the detector in physical scenarios for multi-view, long-distance and partially occluded objects. To bridge the gap between digital attacks and physical attacks, we exploit the full 3D vehicle surface to propose a robust Full-coverage Camouflage Attack (FCA) to fool detectors. Specifically, we first try rendering the non-planar

|

Physical adversarial attacks in object detection have attracted increasing attention. However, most previous works focus on hiding the objects from the detector by generating an individual adversarial patch, which only covers the planar part of the vehicle’s surface and fails to attack the detector in physical scenarios for multi-view, long-distance and partially occluded objects. To bridge the gap between digital attacks and physical attacks, we exploit the full 3D vehicle surface to propose a robust Full-coverage Camouflage Attack (FCA) to fool detectors. Specifically, we first try rendering the nonplanar camouflage texture over the full vehicle surface. To mimic the real-world environment conditions, we then introduce a transformation function to transfer the rendered camouflaged vehicle into a photo realistic scenario. Finally, we design an efficient loss function to optimize the camouflage texture. Experiments show that the full-coverage camouflage attack can not only outperform state-of-the-art methods under various test cases but also generalize to different environments, vehicles, and object detectors. The code of FCA will be available at: https://idrl-lab.github.io/Full-coveragecamouflage adversarial-attack/.

|

||||||

camouflage texture over the full vehicle surface. To mimic the real-world environment conditions, we then introduce a transformation function to transfer the rendered camouflaged vehicle into a photo-realistic scenario. Finally, we design an efficient loss function to optimize the camouflage texture. Experiments show that the full-coverage camouflage attack can not only outperform state-of-the-art methods under various test cases but also generalize to different environments, vehicles, and object detectors.

|

|

||||||

|

|

||||||

## Framework

|

## Framework

|

||||||

|

|

||||||

|

|

|

||||||

Loading…

Reference in New Issue