FCA: Learning a 3D Full-coverage Vehicle Camouflage for Multi-view Physical Adversarial Attack

Case study of the FCA. The code can be find in FCA.

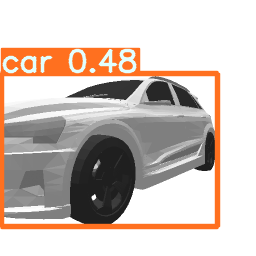

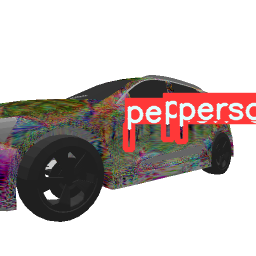

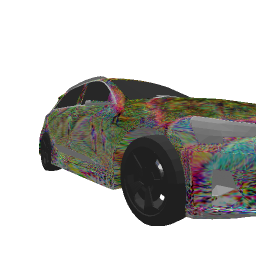

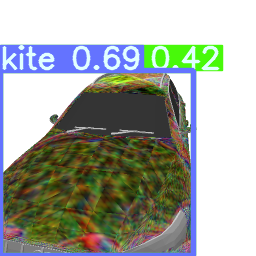

Cases of digital attack

Carmear distance is 3

Carmear distance is 5

Carmear distance is 10

Cases of multi-view robust

The first row is the original detection result. The second row is the camouflaged detection result.

The first row is the original detection result. The second row is the camouflaged detection result.

Ablation study

Different combination of loss terms

As we can see from the Figure, different loss term plays different role in attacking. For example, the camouflaged car generated by obj+smooth (we omit the smooth loss, and denotes as obj) hardly hidden from the detector, while the camouflaged car generated by iou successfully suppress the detecting bounding box of the car region, and finally the camouflaged car generated by cls successfully make the detector to misclassify the car to anther category.

Different initialization ways

| original |

basic initialization |

random initialization |

zero initialization |

|

|

|

|