forked from PulseFocusPlatform/PulseFocusPlatform

Compare commits

89 Commits

master

...

ssschanged

| Author | SHA1 | Date |

|---|---|---|

|

|

e9acf85801 | |

|

|

effc3b88ed | |

|

|

8cc3e2106c | |

|

|

035682c89f | |

|

|

cdc4dfa4a3 | |

|

|

4e0a3ae508 | |

|

|

a0174c457d | |

|

|

0f7628505d | |

|

|

3301d7bfc5 | |

|

|

af2190a70d | |

|

|

67c6d677d8 | |

|

|

c56c9ff0b3 | |

|

|

2d165031b1 | |

|

|

8c5360639b | |

|

|

1f429cfec9 | |

|

|

1f1271da72 | |

|

|

f2eeb9a13e | |

|

|

dee4cb567e | |

|

|

69712f0430 | |

|

|

96076306c9 | |

|

|

7c59186c5c | |

|

|

0794c2ca75 | |

|

|

be9adcf9af | |

|

|

393d1772c0 | |

|

|

d0fd669b38 | |

|

|

3c1314596e | |

|

|

7149690cf0 | |

|

|

5c34a921f8 | |

|

|

29b081e37a | |

|

|

afb796b633 | |

|

|

5d5bb25b66 | |

|

|

61760c4557 | |

|

|

c65783dde3 | |

|

|

e39c7cff8c | |

|

|

c718158d5d | |

|

|

46906d6464 | |

|

|

6a07098983 | |

|

|

52ce53697d | |

|

|

112d7c2abe | |

|

|

03edd45541 | |

|

|

d53ef8f5cc | |

|

|

7ba40027ea | |

|

|

40218a3dfd | |

|

|

6e50becd13 | |

|

|

4941e82583 | |

|

|

4f2b6e65f1 | |

|

|

3952f45e49 | |

|

|

095d7bd7fe | |

|

|

af2a8522d3 | |

|

|

bc3cc3e978 | |

|

|

66812f8687 | |

|

|

e79bca4328 | |

|

|

2a0d071f40 | |

|

|

65f26d0285 | |

|

|

0a208ff95b | |

|

|

94a0f2580a | |

|

|

c1b7ebb919 | |

|

|

ca159b08d6 | |

|

|

f88a80b448 | |

|

|

554cc83b40 | |

|

|

b24be7713c | |

|

|

b7e9967556 | |

|

|

8caeb6944b | |

|

|

3279cc4c82 | |

|

|

9ca4085c6a | |

|

|

9bf14dae80 | |

|

|

40f2168f6f | |

|

|

a5673f134b | |

|

|

61370c1c72 | |

|

|

3acdb7fcc4 | |

|

|

0868c3ca26 | |

|

|

3e19acf86c | |

|

|

b0bebf0846 | |

|

|

5bcdee1fac | |

|

|

3b4ac9cae1 | |

|

|

ab8ffcf61a | |

|

|

d16b7893a5 | |

|

|

becb5ea553 | |

|

|

2ffcc98ac4 | |

|

|

128444f997 | |

|

|

efc062138b | |

|

|

1735bc6975 | |

|

|

1d613070e3 | |

|

|

7f873243cf | |

|

|

e66910c48b | |

|

|

914394829b | |

|

|

aca022de7a | |

|

|

63b8e759b1 | |

|

|

ccd2951448 |

|

|

@ -0,0 +1,3 @@

|

|||

# Default ignored files

|

||||

/shelf/

|

||||

/workspace.xml

|

||||

|

|

@ -0,0 +1,12 @@

|

|||

<?xml version="1.0" encoding="UTF-8"?>

|

||||

<module type="PYTHON_MODULE" version="4">

|

||||

<component name="NewModuleRootManager">

|

||||

<content url="file://$MODULE_DIR$" />

|

||||

<orderEntry type="jdk" jdkName="Python 3.7 (Focus)" jdkType="Python SDK" />

|

||||

<orderEntry type="sourceFolder" forTests="false" />

|

||||

</component>

|

||||

<component name="PyDocumentationSettings">

|

||||

<option name="format" value="GOOGLE" />

|

||||

<option name="myDocStringFormat" value="Google" />

|

||||

</component>

|

||||

</module>

|

||||

|

|

@ -0,0 +1,6 @@

|

|||

<component name="InspectionProjectProfileManager">

|

||||

<settings>

|

||||

<option name="USE_PROJECT_PROFILE" value="false" />

|

||||

<version value="1.0" />

|

||||

</settings>

|

||||

</component>

|

||||

|

|

@ -0,0 +1,4 @@

|

|||

<?xml version="1.0" encoding="UTF-8"?>

|

||||

<project version="4">

|

||||

<component name="ProjectRootManager" version="2" project-jdk-name="Python 3.7 (Focus)" project-jdk-type="Python SDK" />

|

||||

</project>

|

||||

|

|

@ -0,0 +1,8 @@

|

|||

<?xml version="1.0" encoding="UTF-8"?>

|

||||

<project version="4">

|

||||

<component name="ProjectModuleManager">

|

||||

<modules>

|

||||

<module fileurl="file://$PROJECT_DIR$/.idea/PulseFocusPlatform.iml" filepath="$PROJECT_DIR$/.idea/PulseFocusPlatform.iml" />

|

||||

</modules>

|

||||

</component>

|

||||

</project>

|

||||

|

|

@ -0,0 +1,6 @@

|

|||

<?xml version="1.0" encoding="UTF-8"?>

|

||||

<project version="4">

|

||||

<component name="VcsDirectoryMappings">

|

||||

<mapping directory="$PROJECT_DIR$" vcs="Git" />

|

||||

</component>

|

||||

</project>

|

||||

117

README.md

117

README.md

|

|

@ -5,8 +5,7 @@ Pulse Focus Platform脉冲聚焦是面向水底物体图像识别的实时检测

|

|||

脉冲聚焦软件设计了图片和视频两种数据输入下的多物体识别功能。针对图片数据,调用模型进行单张图片预测,随后在前端可视化输出多物体识别结果;针对视频流动态图像数据,首先对视频流数据进行分帧采样,获取采样图片,再针对采样图片进行多物体识别,将采样识别结果进行视频合成,然后在前端可视化输出视频流数据识别结果。为了视频流数据处理的高效性,设计了采样-识别-展示的多线程处理方式,可加快视频流数据处理。

|

||||

|

||||

软件界面简单,易学易用,包含参数的输入选择,程序的运行,算法结果的展示等,源代码公开,算法可修改。

|

||||

|

||||

开发人员:K. Wang、H.P. Yu、J. Li、Z.Y. Zhao、L.F. Zhang、G. Chen、H.T. Li、Z.Q. Wang、Y.G. Han

|

||||

开发人员:K. Wang、H.P. Yu、J. Li、H.T. Li、Z.Q. Wang、Z.Y. Zhao、L.F. Zhang、G. Chen

|

||||

|

||||

## 1. 开发环境配置

|

||||

运行以下命令:

|

||||

|

|

@ -22,119 +21,11 @@ conda env create -f create_env.yaml

|

|||

python main.py

|

||||

```

|

||||

|

||||

## 3. 软硬件运行平台

|

||||

|

||||

(1)配置要求

|

||||

|

||||

<table>

|

||||

<tr>

|

||||

<th>组件</th>

|

||||

<th>配置</th>

|

||||

<th>备注</th>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>系统 </td>

|

||||

<td>Windows 10 家庭中文版 20H2 64位</td>

|

||||

<td>扩展支持Linux和Mac系统</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>处理器</td>

|

||||

<td>处理器类型:

|

||||

酷睿i3兼容处理器或速度更快的处理器

|

||||

处理器速度:

|

||||

最低:1.0GHz

|

||||

建议:2.0GHz或更快

|

||||

</td>

|

||||

<td>不支持ARM、IA64等芯片处理器</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>内存</td>

|

||||

<td>RAM 16.0 GB (15.7 GB 可用)</td>

|

||||

<td></td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>显卡</td>

|

||||

<td>最小:核心显卡

|

||||

推荐:GTX1060或同类型显卡

|

||||

</td>

|

||||

<td></td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>硬盘</td>

|

||||

<td>500G</td>

|

||||

<td></td>

|

||||

</tr>

|

||||

<td>显示器</td>

|

||||

<td>3840×2160像素,高分屏</td>

|

||||

<td></td>

|

||||

</tr>

|

||||

<tr>

|

||||

</tr>

|

||||

<td>软件</td>

|

||||

<td>Anaconda3 2020及以上</td>

|

||||

<td>Python3.7及以上,需手动安装包</td>

|

||||

</tr>

|

||||

</table>

|

||||

(2)手动部署及运行

|

||||

|

||||

推荐的安装步骤如下:

|

||||

|

||||

安装Anaconda3-2020.02-Windows-x86_64或以上版本;

|

||||

手动安装pygame、pymunk、pyyaml、numpy、easydict和pyqt,安装方式推荐参考如下:

|

||||

```

|

||||

pip install -i https://pypi.tuna.tsinghua.edu.cn/simple pygame==2.0.1

|

||||

```

|

||||

将软件模块文件夹拷贝到电脑中(以D盘为例,路径为D:\island-multi_ships)

|

||||

|

||||

## 4. 软件详细介绍

|

||||

软件总体开发系统架构图如下所示。

|

||||

|

||||

|

||||

(1)界面设计

|

||||

平台界面设计如上图所示,界面各组件功能设计如下:

|

||||

|

||||

|

||||

|

||||

* 静态图像导入:用于选择需要进行预测的单张图像,可支持jpg,png,jpeg等格式图像,选择图像后,会在下方界面进行展示。

|

||||

* 动态图像导入:用于选择需要进行预测的单个视频,可支持pm4等格式视频,选择视频后,会在下方界面进行展示。

|

||||

* 信息导出:用于在预测完成后,将预测后的照片,视频导出到具体文件夹下。

|

||||

* 特征选择:由于挑选相关特征。

|

||||

* 预处理方法:由于选择相关预处理方法。

|

||||

* 识别算法:用于选择预测时的所需算法,目前支持YOLO与RCNN两种模型算法。

|

||||

* GPU加速:选择是否使用GPU进行预测加速,对视频预测加速效果明显。

|

||||

* 识别:当相关配置完成后,点击识别选项,会进行预测处理,并将预测后的视频或图像在下方显示。

|

||||

* 训练:目前考虑到GPU等资源限制,未完整开放。

|

||||

* 信息显示:在界面右下角显示类别flv,gx,mbw,object的识别目标个数。

|

||||

|

||||

2)主要功能设计

|

||||

|

||||

设计了图片和视频两种数据输入的多目标识别功能。针对图片数据,调用模型进行单张图片预测,随后在前端可视化输出多目标识别结果;针对视频流动态图像数据,首先对视频流数据进行分帧采样,获取采样图片,再针对采样图片进行多目标识别,将采样识别结果进行视频合成,然后在前段可视化输出视频流数据识别结果。为求视频流数据处理的高效性,设计了采样-识别-展示的多线性处理方式,可加快视频流数据处理。

|

||||

* 侧扫声呐图像多目标识别功能

|

||||

* 侧扫声呐视频多目标识别功能

|

||||

## 5. 软件使用结果

|

||||

Faster-RCNN模型在四种目标物图片上的识别验证结果如下所示:

|

||||

|

||||

|

||||

|

||||

|

||||

YOLOV3模型在四种目标物图片上的识别验证结果如下所示:

|

||||

|

||||

|

||||

|

||||

|

||||

PP-YOLO-BOT模型在四种目标物图片上的识别验证结果如下所示:

|

||||

|

||||

|

||||

|

||||

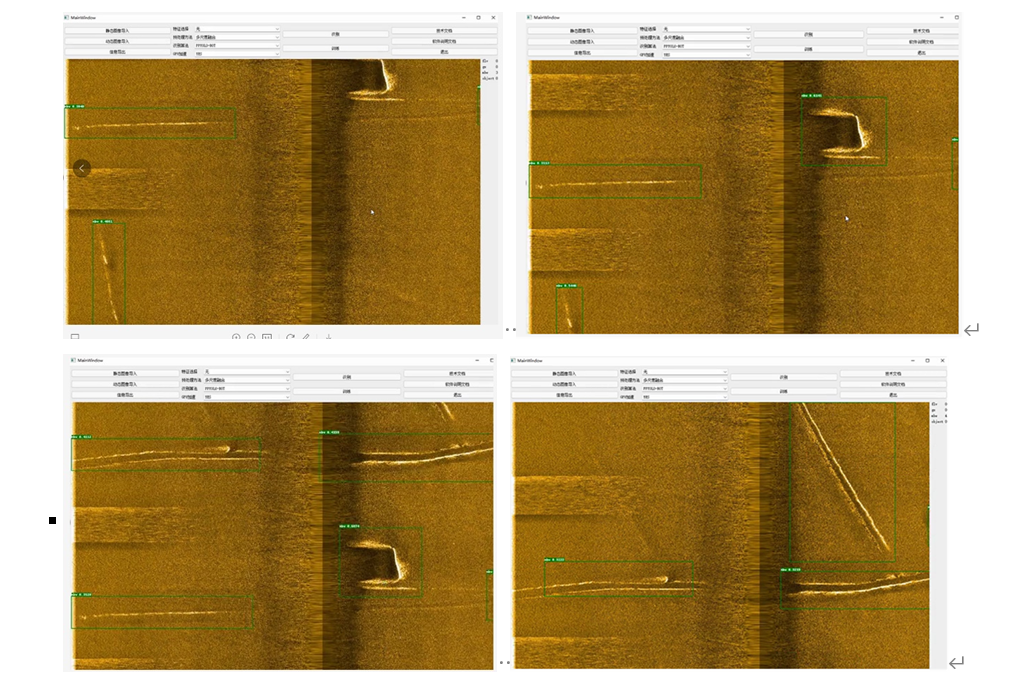

调用PP-YOLO-BOT模型对视频数据进行识别验证,结果如下截图所示:

|

||||

|

||||

|

||||

|

||||

## 6. 其他说明

|

||||

* 使用GPU版本

|

||||

## 3. 一些说明

|

||||

1. 使用GPU版本

|

||||

|

||||

参考百度飞桨paddle官方网站安装

|

||||

|

||||

[安装链接](https://www.paddlepaddle.org.cn/install/quick?docurl=/documentation/docs/zh/install/pip/windows-pip.html)

|

||||

|

||||

* 模型文件全部更新在inference_model中,pic为测试图片

|

||||

2. 模型文件全部更新在inference_model中,pic为测试图片

|

||||

|

|

|

|||

28

SSS_win.py

28

SSS_win.py

|

|

@ -1,11 +1,9 @@

|

|||

# -*- coding: utf-8 -*-

|

||||

|

||||

# Form implementation generated from reading ui file 'SSS_win.ui'

|

||||

#

|

||||

# Created by: PyQt5 UI code generator 5.15.4

|

||||

#

|

||||

# WARNING: Any manual changes made to this file will be lost when pyuic5 is

|

||||

# run again. Do not edit this file unless you know what you are doing.

|

||||

|

||||

|

||||

|

||||

|

||||

from PyQt5 import QtCore, QtGui, QtWidgets

|

||||

|

|

@ -18,18 +16,18 @@ class Ui_MainWindow(object):

|

|||

self.centralwidget = QtWidgets.QWidget(MainWindow)

|

||||

self.centralwidget.setObjectName("centralwidget")

|

||||

self.verticalLayout_5 = QtWidgets.QVBoxLayout(self.centralwidget)

|

||||

self.verticalLayout_5.setObjectName("verticalLayout_5")

|

||||

self.verticalLayout_5.setObjectName("verticalLayout_5")#垂直布局

|

||||

self.verticalLayout = QtWidgets.QVBoxLayout()

|

||||

self.verticalLayout.setObjectName("verticalLayout")

|

||||

self.verticalLayout.setObjectName("verticalLayout")#垂直布局

|

||||

self.horizontalLayout = QtWidgets.QHBoxLayout()

|

||||

self.horizontalLayout.setObjectName("horizontalLayout")

|

||||

self.horizontalLayout.setObjectName("horizontalLayout")#水平布局

|

||||

self.verticalLayout_2 = QtWidgets.QVBoxLayout()

|

||||

self.verticalLayout_2.setObjectName("verticalLayout_2")

|

||||

self.verticalLayout_2.setObjectName("verticalLayout_2")#垂直布局

|

||||

self.tupiandiaoru = QtWidgets.QPushButton(self.centralwidget)

|

||||

self.tupiandiaoru.setObjectName("tupiandiaoru")

|

||||

self.tupiandiaoru.setObjectName("tupiandiaoru")#图片导入

|

||||

self.verticalLayout_2.addWidget(self.tupiandiaoru)

|

||||

self.shipindaoru = QtWidgets.QPushButton(self.centralwidget)

|

||||

self.shipindaoru.setObjectName("shipindaoru")

|

||||

self.shipindaoru.setObjectName("shipindaoru")#视频导入

|

||||

self.verticalLayout_2.addWidget(self.shipindaoru)

|

||||

self.pushButton_xxdaochu = QtWidgets.QPushButton(self.centralwidget)

|

||||

self.pushButton_xxdaochu.setObjectName("pushButton_xxdaochu")

|

||||

|

|

@ -147,13 +145,13 @@ class Ui_MainWindow(object):

|

|||

self.xunlian.clicked.connect(MainWindow.press_xunlian)

|

||||

self.pushButton_tuichu.clicked.connect(MainWindow.exit)

|

||||

self.shipindaoru.clicked.connect(MainWindow.press_movie)

|

||||

self.comboBox_sbsuanfa.activated.connect(MainWindow.moxingxuanze)

|

||||

self.comboBox_GPU.activated.connect(MainWindow.gpu_use)

|

||||

self.comboBox_sbsuanfa.activated['QString'].connect(MainWindow.moxingxuanze)

|

||||

self.comboBox_GPU.activated['QString'].connect(MainWindow.gpu_use)

|

||||

QtCore.QMetaObject.connectSlotsByName(MainWindow)

|

||||

|

||||

def retranslateUi(self, MainWindow):

|

||||

_translate = QtCore.QCoreApplication.translate

|

||||

MainWindow.setWindowTitle(_translate("MainWindow", "脉冲聚焦"))

|

||||

MainWindow.setWindowTitle(_translate("MainWindow", "MainWindow"))

|

||||

self.tupiandiaoru.setText(_translate("MainWindow", "静态图像导入"))

|

||||

self.shipindaoru.setText(_translate("MainWindow", "动态图像导入"))

|

||||

self.pushButton_xxdaochu.setText(_translate("MainWindow", "信息导出"))

|

||||

|

|

@ -162,7 +160,7 @@ class Ui_MainWindow(object):

|

|||

self.comboBox_yclfangfa.setItemText(0, _translate("MainWindow", "多尺度融合"))

|

||||

self.comboBox_yclfangfa.setItemText(1, _translate("MainWindow", "图像增广"))

|

||||

self.comboBox_yclfangfa.setItemText(2, _translate("MainWindow", "图像重塑"))

|

||||

self.label_3.setText(_translate("MainWindow", "聚焦算法"))

|

||||

self.label_3.setText(_translate("MainWindow", "识别算法"))

|

||||

self.comboBox_sbsuanfa.setCurrentText(_translate("MainWindow", "PPYOLO-BOT"))

|

||||

self.comboBox_sbsuanfa.setItemText(0, _translate("MainWindow", "PPYOLO-BOT"))

|

||||

self.comboBox_sbsuanfa.setItemText(1, _translate("MainWindow", "YOLOV3"))

|

||||

|

|

@ -172,7 +170,7 @@ class Ui_MainWindow(object):

|

|||

self.comboBox_GPU.setItemText(0, _translate("MainWindow", "YES"))

|

||||

self.comboBox_GPU.setItemText(1, _translate("MainWindow", "NO"))

|

||||

self.label.setText(_translate("MainWindow", "特征选择"))

|

||||

self.shibie.setText(_translate("MainWindow", "聚焦"))

|

||||

self.shibie.setText(_translate("MainWindow", "识别"))

|

||||

self.xunlian.setText(_translate("MainWindow", "训练"))

|

||||

self.pushButton_jswendang.setText(_translate("MainWindow", "技术文档"))

|

||||

self.pushButton_rjwendang.setText(_translate("MainWindow", "软件说明文档"))

|

||||

|

|

|

|||

Binary file not shown.

7

main.py

7

main.py

|

|

@ -68,7 +68,6 @@ class mywindow(QtWidgets.QMainWindow, Ui_MainWindow):

|

|||

print('cd {}'.format(self.path1))

|

||||

print(

|

||||

'python deploy/python/infer.py --model_dir={} --video_file={} --use_gpu=True'.format(self.model_path, self.Video_fname))

|

||||

# 调用GPU

|

||||

os.system('cd {}'.format(self.path1))

|

||||

os.system(

|

||||

'python deploy/python/infer.py --model_dir={} --image_dir={} --output_dir=./video_output/{} --threshold=0.3 --use_gpu=True'.format(

|

||||

|

|

@ -76,10 +75,8 @@ class mywindow(QtWidgets.QMainWindow, Ui_MainWindow):

|

|||

# print(self.path1+'video_output/'+self.Video_fname.split('/')[-1])

|

||||

# self.cap = cv2.VideoCapture(

|

||||

# self.path1+'video_output/'+self.Video_fname.split('/')[-1])

|

||||

# self.framRate = self.cap.get(cv2.CAP_PROP_FPS)

|

||||

|

||||

# self.framRate = self.cap.get(cv2.CAP_PROP_FPS

|

||||

# th = threading.Thread(target=self.Display)

|

||||

# th.start()

|

||||

|

||||

def Images_Display(self):

|

||||

img_list=[]

|

||||

|

|

@ -138,7 +135,6 @@ class mywindow(QtWidgets.QMainWindow, Ui_MainWindow):

|

|||

# print(xmlFile)

|

||||

# self.label_movie.setPixmap(QtGui.QPixmap(

|

||||

# self.video_image_path+'/'+xmlFile))

|

||||

# time.sleep(0.5)

|

||||

|

||||

def Sorted(self,files):

|

||||

files=[int(i.split('.')[0]) for i in files]

|

||||

|

|

@ -236,7 +232,6 @@ class mywindow(QtWidgets.QMainWindow, Ui_MainWindow):

|

|||

th2 = threading.Thread(target=self.Split)

|

||||

th2.start()

|

||||

# self.th = threading.Thread(target=self.Display)

|

||||

# self.th.start()

|

||||

|

||||

|

||||

|

||||

|

|

|

|||

BIN

pic1/1.png

BIN

pic1/1.png

Binary file not shown.

|

Before Width: | Height: | Size: 26 KiB |

BIN

pic1/2.png

BIN

pic1/2.png

Binary file not shown.

|

Before Width: | Height: | Size: 19 KiB |

BIN

pic1/3.png

BIN

pic1/3.png

Binary file not shown.

|

Before Width: | Height: | Size: 360 KiB |

BIN

pic1/4.png

BIN

pic1/4.png

Binary file not shown.

|

Before Width: | Height: | Size: 350 KiB |

BIN

pic1/5.png

BIN

pic1/5.png

Binary file not shown.

|

Before Width: | Height: | Size: 372 KiB |

BIN

pic1/6.png

BIN

pic1/6.png

Binary file not shown.

|

Before Width: | Height: | Size: 942 KiB |

15

setup.py

15

setup.py

|

|

@ -1,16 +1,12 @@

|

|||

# Copyright (c) 2020 PaddlePaddle Authors. All Rights Reserved.

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

# you may not use this file except in compliance with

|

||||

# You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

# Unless required

|

||||

|

||||

|

||||

|

||||

|

||||

import os.path as osp

|

||||

import glob

|

||||

|

|

@ -37,7 +33,6 @@ def package_model_zoo():

|

|||

|

||||

valid_cfgs = []

|

||||

for cfg in cfgs:

|

||||

# exclude dataset base config

|

||||

if osp.split(osp.split(cfg)[0])[1] not in ['datasets']:

|

||||

valid_cfgs.append(cfg)

|

||||

model_names = [

|

||||

|

|

|

|||

Loading…

Reference in New Issue