forked from p94628173/idrlnet

docs: Init commit

This commit is contained in:

parent

b470be21ca

commit

506c852c5e

|

|

@ -0,0 +1,129 @@

|

||||||

|

# Byte-compiled / optimized / DLL files

|

||||||

|

__pycache__/

|

||||||

|

*.py[cod]

|

||||||

|

*$py.class

|

||||||

|

|

||||||

|

# C extensions

|

||||||

|

*.so

|

||||||

|

|

||||||

|

# Distribution / packaging

|

||||||

|

.Python

|

||||||

|

build/

|

||||||

|

develop-eggs/

|

||||||

|

dist/

|

||||||

|

downloads/

|

||||||

|

eggs/

|

||||||

|

.eggs/

|

||||||

|

lib/

|

||||||

|

lib64/

|

||||||

|

parts/

|

||||||

|

sdist/

|

||||||

|

var/

|

||||||

|

wheels/

|

||||||

|

*.egg-info/

|

||||||

|

.installed.cfg

|

||||||

|

*.egg

|

||||||

|

MANIFEST

|

||||||

|

|

||||||

|

# PyInstaller

|

||||||

|

# Usually these files are written by a python script from a template

|

||||||

|

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

||||||

|

*.manifest

|

||||||

|

*.spec

|

||||||

|

|

||||||

|

# Installer logs

|

||||||

|

pip-log.txt

|

||||||

|

pip-delete-this-directory.txt

|

||||||

|

|

||||||

|

# Unit test / coverage reports

|

||||||

|

htmlcov/

|

||||||

|

.tox/

|

||||||

|

.coverage

|

||||||

|

.coverage.*

|

||||||

|

.cache

|

||||||

|

nosetests.xml

|

||||||

|

coverage.xml

|

||||||

|

*.cover

|

||||||

|

.hypothesis/

|

||||||

|

.pytest_cache/

|

||||||

|

|

||||||

|

# Translations

|

||||||

|

*.mo

|

||||||

|

*.pot

|

||||||

|

|

||||||

|

# Django stuff:

|

||||||

|

*.log

|

||||||

|

local_settings.py

|

||||||

|

db.sqlite3

|

||||||

|

|

||||||

|

# Flask stuff:

|

||||||

|

instance/

|

||||||

|

.webassets-cache

|

||||||

|

|

||||||

|

# Scrapy stuff:

|

||||||

|

.scrapy

|

||||||

|

|

||||||

|

# Sphinx documentation

|

||||||

|

docs/_build/

|

||||||

|

|

||||||

|

# Dash docset

|

||||||

|

docs/dash/

|

||||||

|

|

||||||

|

# PyBuilder

|

||||||

|

target/

|

||||||

|

|

||||||

|

# Jupyter Notebook

|

||||||

|

.ipynb_checkpoints

|

||||||

|

|

||||||

|

# pyenv

|

||||||

|

.python-version

|

||||||

|

|

||||||

|

# celery beat schedule file

|

||||||

|

celerybeat-schedule

|

||||||

|

|

||||||

|

# SageMath parsed files

|

||||||

|

*.sage.py

|

||||||

|

|

||||||

|

# Environments

|

||||||

|

.env

|

||||||

|

.venv

|

||||||

|

env/

|

||||||

|

venv/

|

||||||

|

ENV/

|

||||||

|

env.bak/

|

||||||

|

venv.bak/

|

||||||

|

include/

|

||||||

|

|

||||||

|

# Spyder project settings

|

||||||

|

.spyderproject

|

||||||

|

.spyproject

|

||||||

|

|

||||||

|

# Rope project settings

|

||||||

|

.ropeproject

|

||||||

|

|

||||||

|

# mkdocs documentation

|

||||||

|

/site

|

||||||

|

|

||||||

|

# mypy

|

||||||

|

.mypy_cache/

|

||||||

|

|

||||||

|

# notebooks

|

||||||

|

notebooks/

|

||||||

|

|

||||||

|

# PyCharm related

|

||||||

|

.idea/

|

||||||

|

|

||||||

|

# VSCode

|

||||||

|

.vscode/

|

||||||

|

/share/

|

||||||

|

/etc/

|

||||||

|

/bin/

|

||||||

|

|

||||||

|

# cache

|

||||||

|

*.vtu

|

||||||

|

*.csv

|

||||||

|

*.npz

|

||||||

|

*.ckpt

|

||||||

|

events.out*

|

||||||

|

*.png

|

||||||

|

*.mat

|

||||||

|

|

@ -0,0 +1,27 @@

|

||||||

|

FROM pytorch/pytorch:1.7.0-cuda11.0-cudnn8-devel

|

||||||

|

RUN apt-get update && apt-get install -y openssh-server nfs-common && \

|

||||||

|

echo "PermitRootLogin yes" >> /etc/ssh/sshd_config && \

|

||||||

|

(echo '123456'; echo '123456') | passwd root

|

||||||

|

|

||||||

|

RUN pip install -i https://pypi.mirrors.ustc.edu.cn/simple/ transforms3d \

|

||||||

|

typing \

|

||||||

|

numpy \

|

||||||

|

keras \

|

||||||

|

h5py \

|

||||||

|

pandas \

|

||||||

|

zipfile36 \

|

||||||

|

scikit-optimize \

|

||||||

|

pytest \

|

||||||

|

sphinx \

|

||||||

|

matplotlib \

|

||||||

|

myst_parser \

|

||||||

|

sphinx_rtd_theme==0.5.2 \

|

||||||

|

tensorboard==2.4.1 \

|

||||||

|

sympy==1.5.1 \

|

||||||

|

pyevtk==1.1.1 \

|

||||||

|

flask==1.1.2 \

|

||||||

|

requests==2.25.0 \

|

||||||

|

networkx==2.5.1

|

||||||

|

COPY . /idrlnet/

|

||||||

|

RUN cd /idrlnet && pip install -e .

|

||||||

|

ENTRYPOINT service ssh start && bash

|

||||||

|

|

@ -0,0 +1,202 @@

|

||||||

|

|

||||||

|

Apache License

|

||||||

|

Version 2.0, January 2004

|

||||||

|

http://www.apache.org/licenses/

|

||||||

|

|

||||||

|

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

||||||

|

|

||||||

|

1. Definitions.

|

||||||

|

|

||||||

|

"License" shall mean the terms and conditions for use, reproduction,

|

||||||

|

and distribution as defined by Sections 1 through 9 of this document.

|

||||||

|

|

||||||

|

"Licensor" shall mean the copyright owner or entity authorized by

|

||||||

|

the copyright owner that is granting the License.

|

||||||

|

|

||||||

|

"Legal Entity" shall mean the union of the acting entity and all

|

||||||

|

other entities that control, are controlled by, or are under common

|

||||||

|

control with that entity. For the purposes of this definition,

|

||||||

|

"control" means (i) the power, direct or indirect, to cause the

|

||||||

|

direction or management of such entity, whether by contract or

|

||||||

|

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

||||||

|

outstanding shares, or (iii) beneficial ownership of such entity.

|

||||||

|

|

||||||

|

"You" (or "Your") shall mean an individual or Legal Entity

|

||||||

|

exercising permissions granted by this License.

|

||||||

|

|

||||||

|

"Source" form shall mean the preferred form for making modifications,

|

||||||

|

including but not limited to software source code, documentation

|

||||||

|

source, and configuration files.

|

||||||

|

|

||||||

|

"Object" form shall mean any form resulting from mechanical

|

||||||

|

transformation or translation of a Source form, including but

|

||||||

|

not limited to compiled object code, generated documentation,

|

||||||

|

and conversions to other media types.

|

||||||

|

|

||||||

|

"Work" shall mean the work of authorship, whether in Source or

|

||||||

|

Object form, made available under the License, as indicated by a

|

||||||

|

copyright notice that is included in or attached to the work

|

||||||

|

(an example is provided in the Appendix below).

|

||||||

|

|

||||||

|

"Derivative Works" shall mean any work, whether in Source or Object

|

||||||

|

form, that is based on (or derived from) the Work and for which the

|

||||||

|

editorial revisions, annotations, elaborations, or other modifications

|

||||||

|

represent, as a whole, an original work of authorship. For the purposes

|

||||||

|

of this License, Derivative Works shall not include works that remain

|

||||||

|

separable from, or merely link (or bind by name) to the interfaces of,

|

||||||

|

the Work and Derivative Works thereof.

|

||||||

|

|

||||||

|

"Contribution" shall mean any work of authorship, including

|

||||||

|

the original version of the Work and any modifications or additions

|

||||||

|

to that Work or Derivative Works thereof, that is intentionally

|

||||||

|

submitted to Licensor for inclusion in the Work by the copyright owner

|

||||||

|

or by an individual or Legal Entity authorized to submit on behalf of

|

||||||

|

the copyright owner. For the purposes of this definition, "submitted"

|

||||||

|

means any form of electronic, verbal, or written communication sent

|

||||||

|

to the Licensor or its representatives, including but not limited to

|

||||||

|

communication on electronic mailing lists, source code control systems,

|

||||||

|

and issue tracking systems that are managed by, or on behalf of, the

|

||||||

|

Licensor for the purpose of discussing and improving the Work, but

|

||||||

|

excluding communication that is conspicuously marked or otherwise

|

||||||

|

designated in writing by the copyright owner as "Not a Contribution."

|

||||||

|

|

||||||

|

"Contributor" shall mean Licensor and any individual or Legal Entity

|

||||||

|

on behalf of whom a Contribution has been received by Licensor and

|

||||||

|

subsequently incorporated within the Work.

|

||||||

|

|

||||||

|

2. Grant of Copyright License. Subject to the terms and conditions of

|

||||||

|

this License, each Contributor hereby grants to You a perpetual,

|

||||||

|

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

||||||

|

copyright license to reproduce, prepare Derivative Works of,

|

||||||

|

publicly display, publicly perform, sublicense, and distribute the

|

||||||

|

Work and such Derivative Works in Source or Object form.

|

||||||

|

|

||||||

|

3. Grant of Patent License. Subject to the terms and conditions of

|

||||||

|

this License, each Contributor hereby grants to You a perpetual,

|

||||||

|

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

||||||

|

(except as stated in this section) patent license to make, have made,

|

||||||

|

use, offer to sell, sell, import, and otherwise transfer the Work,

|

||||||

|

where such license applies only to those patent claims licensable

|

||||||

|

by such Contributor that are necessarily infringed by their

|

||||||

|

Contribution(s) alone or by combination of their Contribution(s)

|

||||||

|

with the Work to which such Contribution(s) was submitted. If You

|

||||||

|

institute patent litigation against any entity (including a

|

||||||

|

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

||||||

|

or a Contribution incorporated within the Work constitutes direct

|

||||||

|

or contributory patent infringement, then any patent licenses

|

||||||

|

granted to You under this License for that Work shall terminate

|

||||||

|

as of the date such litigation is filed.

|

||||||

|

|

||||||

|

4. Redistribution. You may reproduce and distribute copies of the

|

||||||

|

Work or Derivative Works thereof in any medium, with or without

|

||||||

|

modifications, and in Source or Object form, provided that You

|

||||||

|

meet the following conditions:

|

||||||

|

|

||||||

|

(a) You must give any other recipients of the Work or

|

||||||

|

Derivative Works a copy of this License; and

|

||||||

|

|

||||||

|

(b) You must cause any modified files to carry prominent notices

|

||||||

|

stating that You changed the files; and

|

||||||

|

|

||||||

|

(c) You must retain, in the Source form of any Derivative Works

|

||||||

|

that You distribute, all copyright, patent, trademark, and

|

||||||

|

attribution notices from the Source form of the Work,

|

||||||

|

excluding those notices that do not pertain to any part of

|

||||||

|

the Derivative Works; and

|

||||||

|

|

||||||

|

(d) If the Work includes a "NOTICE" text file as part of its

|

||||||

|

distribution, then any Derivative Works that You distribute must

|

||||||

|

include a readable copy of the attribution notices contained

|

||||||

|

within such NOTICE file, excluding those notices that do not

|

||||||

|

pertain to any part of the Derivative Works, in at least one

|

||||||

|

of the following places: within a NOTICE text file distributed

|

||||||

|

as part of the Derivative Works; within the Source form or

|

||||||

|

documentation, if provided along with the Derivative Works; or,

|

||||||

|

within a display generated by the Derivative Works, if and

|

||||||

|

wherever such third-party notices normally appear. The contents

|

||||||

|

of the NOTICE file are for informational purposes only and

|

||||||

|

do not modify the License. You may add Your own attribution

|

||||||

|

notices within Derivative Works that You distribute, alongside

|

||||||

|

or as an addendum to the NOTICE text from the Work, provided

|

||||||

|

that such additional attribution notices cannot be construed

|

||||||

|

as modifying the License.

|

||||||

|

|

||||||

|

You may add Your own copyright statement to Your modifications and

|

||||||

|

may provide additional or different license terms and conditions

|

||||||

|

for use, reproduction, or distribution of Your modifications, or

|

||||||

|

for any such Derivative Works as a whole, provided Your use,

|

||||||

|

reproduction, and distribution of the Work otherwise complies with

|

||||||

|

the conditions stated in this License.

|

||||||

|

|

||||||

|

5. Submission of Contributions. Unless You explicitly state otherwise,

|

||||||

|

any Contribution intentionally submitted for inclusion in the Work

|

||||||

|

by You to the Licensor shall be under the terms and conditions of

|

||||||

|

this License, without any additional terms or conditions.

|

||||||

|

Notwithstanding the above, nothing herein shall supersede or modify

|

||||||

|

the terms of any separate license agreement you may have executed

|

||||||

|

with Licensor regarding such Contributions.

|

||||||

|

|

||||||

|

6. Trademarks. This License does not grant permission to use the trade

|

||||||

|

names, trademarks, service marks, or product names of the Licensor,

|

||||||

|

except as required for reasonable and customary use in describing the

|

||||||

|

origin of the Work and reproducing the content of the NOTICE file.

|

||||||

|

|

||||||

|

7. Disclaimer of Warranty. Unless required by applicable law or

|

||||||

|

agreed to in writing, Licensor provides the Work (and each

|

||||||

|

Contributor provides its Contributions) on an "AS IS" BASIS,

|

||||||

|

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

||||||

|

implied, including, without limitation, any warranties or conditions

|

||||||

|

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

||||||

|

PARTICULAR PURPOSE. You are solely responsible for determining the

|

||||||

|

appropriateness of using or redistributing the Work and assume any

|

||||||

|

risks associated with Your exercise of permissions under this License.

|

||||||

|

|

||||||

|

8. Limitation of Liability. In no event and under no legal theory,

|

||||||

|

whether in tort (including negligence), contract, or otherwise,

|

||||||

|

unless required by applicable law (such as deliberate and grossly

|

||||||

|

negligent acts) or agreed to in writing, shall any Contributor be

|

||||||

|

liable to You for damages, including any direct, indirect, special,

|

||||||

|

incidental, or consequential damages of any character arising as a

|

||||||

|

result of this License or out of the use or inability to use the

|

||||||

|

Work (including but not limited to damages for loss of goodwill,

|

||||||

|

work stoppage, computer failure or malfunction, or any and all

|

||||||

|

other commercial damages or losses), even if such Contributor

|

||||||

|

has been advised of the possibility of such damages.

|

||||||

|

|

||||||

|

9. Accepting Warranty or Additional Liability. While redistributing

|

||||||

|

the Work or Derivative Works thereof, You may choose to offer,

|

||||||

|

and charge a fee for, acceptance of support, warranty, indemnity,

|

||||||

|

or other liability obligations and/or rights consistent with this

|

||||||

|

License. However, in accepting such obligations, You may act only

|

||||||

|

on Your own behalf and on Your sole responsibility, not on behalf

|

||||||

|

of any other Contributor, and only if You agree to indemnify,

|

||||||

|

defend, and hold each Contributor harmless for any liability

|

||||||

|

incurred by, or claims asserted against, such Contributor by reason

|

||||||

|

of your accepting any such warranty or additional liability.

|

||||||

|

|

||||||

|

END OF TERMS AND CONDITIONS

|

||||||

|

|

||||||

|

APPENDIX: How to apply the Apache License to your work.

|

||||||

|

|

||||||

|

To apply the Apache License to your work, attach the following

|

||||||

|

boilerplate notice, with the fields enclosed by brackets "[]"

|

||||||

|

replaced with your own identifying information. (Don't include

|

||||||

|

the brackets!) The text should be enclosed in the appropriate

|

||||||

|

comment syntax for the file format. We also recommend that a

|

||||||

|

file or class name and description of purpose be included on the

|

||||||

|

same "printed page" as the copyright notice for easier

|

||||||

|

identification within third-party archives.

|

||||||

|

|

||||||

|

Copyright 2021 idrl.site

|

||||||

|

|

||||||

|

Licensed under the Apache License, Version 2.0 (the "License");

|

||||||

|

you may not use this file except in compliance with the License.

|

||||||

|

You may obtain a copy of the License at

|

||||||

|

|

||||||

|

http://www.apache.org/licenses/LICENSE-2.0

|

||||||

|

|

||||||

|

Unless required by applicable law or agreed to in writing, software

|

||||||

|

distributed under the License is distributed on an "AS IS" BASIS,

|

||||||

|

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||||

|

See the License for the specific language governing permissions and

|

||||||

|

limitations under the License.

|

||||||

|

|

@ -0,0 +1,4 @@

|

||||||

|

include LICENSE

|

||||||

|

include README.md

|

||||||

|

graft docs

|

||||||

|

graft examples

|

||||||

|

|

@ -0,0 +1,82 @@

|

||||||

|

[](https://www.apache.org/licenses/LICENSE-2.0)

|

||||||

|

[](https://python.org)

|

||||||

|

|

||||||

|

## Installation

|

||||||

|

|

||||||

|

### Docker

|

||||||

|

|

||||||

|

```bash

|

||||||

|

git clone https://git.idrl.site/pengwei/idrlnet_public

|

||||||

|

cd idrlnet_public

|

||||||

|

docker build . -t idrlnet_dev

|

||||||

|

docker run -it -p [EXPOSED_SSH_PORT]:22 -v [CURRENT_WORK_DIR]:/root/pinnnet idrlnet_dev:latest bash

|

||||||

|

```

|

||||||

|

|

||||||

|

### Anaconda

|

||||||

|

|

||||||

|

```bash

|

||||||

|

git clone https://git.idrl.site/pengwei/idrlnet_public

|

||||||

|

cd idrlnet_public

|

||||||

|

conda create -n idrlnet_dev python=3.8 -y

|

||||||

|

conda activate idrlnet_dev

|

||||||

|

pip install -r requirements.txt

|

||||||

|

pip install -e .

|

||||||

|

```

|

||||||

|

|

||||||

|

# IDRLnet

|

||||||

|

|

||||||

|

IDRLnet is a machine learning library on top of [Pytorch](https://www.tensorflow.org/). Use IDRLnet if you need a machine

|

||||||

|

learning library that solves both forward and inverse partial differential equations (PDEs) via physics-informed neural

|

||||||

|

networks (PINN). IDRLnet is a flexible framework inspired by [Nvidia Simnet](https://developer.nvidia.com/simnet>).

|

||||||

|

|

||||||

|

## Features

|

||||||

|

|

||||||

|

IDRLnet supports

|

||||||

|

|

||||||

|

- complex domain geometries without mesh generation. Provided geometries include interval, triangle, rectangle, polygon,

|

||||||

|

circle, sphere... Other geometries can be constructed using three boolean operations: union, difference, and

|

||||||

|

intersection;

|

||||||

|

|

||||||

|

- sampling in the interior of the defined geometry or on the boundary with given conditions.

|

||||||

|

|

||||||

|

- enables the user code to be structured. Data sources, operations, constraints are all represented by ``Node``. The graph

|

||||||

|

will be automatically constructed via label symbols of each node. Getting rid of the explicit construction via

|

||||||

|

explicit expressions, users model problems more naturally.

|

||||||

|

|

||||||

|

- solving variational minimization problem;

|

||||||

|

|

||||||

|

- solving integral differential equation;

|

||||||

|

|

||||||

|

- adaptive resampling;

|

||||||

|

|

||||||

|

- recover unknown parameters of PDEs from noisy measurement data.

|

||||||

|

|

||||||

|

It is also easy to customize IDRLnet to meet new demands.

|

||||||

|

|

||||||

|

- Main Dependencies

|

||||||

|

|

||||||

|

- [Matplotlib](https://matplotlib.org/)

|

||||||

|

- [NumPy](http://www.numpy.org/)

|

||||||

|

- [Sympy](https://https://www.sympy.org/)==1.5.1

|

||||||

|

- [pytorch](https://www.tensorflow.org/)>=1.7.0

|

||||||

|

|

||||||

|

## Contributing to IDRLnet

|

||||||

|

|

||||||

|

First off, thanks for taking the time to contribute!

|

||||||

|

|

||||||

|

- **Reporting bugs.** To report a bug, simply open an issue in the GitHub "Issues" section.

|

||||||

|

|

||||||

|

- **Suggesting enhancements.** To submit an enhancement suggestion for IDRLnet, including completely new features and

|

||||||

|

minor improvements to existing functionality, let us know by opening an issue.

|

||||||

|

|

||||||

|

- **Pull requests.** If you made improvements to IDRLnet, fixed a bug, or had a new example, feel free to send us a

|

||||||

|

pull-request.

|

||||||

|

|

||||||

|

- **Asking questions.** To get help on how to use IDRLnet or its functionalities, you can as well open an issue.

|

||||||

|

|

||||||

|

- **Answering questions.** If you know the answer to any question in the "Issues", you are welcomed to answer.

|

||||||

|

|

||||||

|

## The Team

|

||||||

|

|

||||||

|

IDRLnet was originally developed by IDRL lab.

|

||||||

|

|

||||||

|

|

@ -0,0 +1,20 @@

|

||||||

|

# Minimal makefile for Sphinx documentation

|

||||||

|

#

|

||||||

|

|

||||||

|

# You can set these variables from the command line, and also

|

||||||

|

# from the environment for the first two.

|

||||||

|

SPHINXOPTS ?=

|

||||||

|

SPHINXBUILD ?= sphinx-build

|

||||||

|

SOURCEDIR = .

|

||||||

|

BUILDDIR = _build

|

||||||

|

|

||||||

|

# Put it first so that "make" without argument is like "make help".

|

||||||

|

help:

|

||||||

|

@$(SPHINXBUILD) -M help "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

|

||||||

|

|

||||||

|

.PHONY: help Makefile

|

||||||

|

|

||||||

|

# Catch-all target: route all unknown targets to Sphinx using the new

|

||||||

|

# "make mode" option. $(O) is meant as a shortcut for $(SPHINXOPTS).

|

||||||

|

%: Makefile

|

||||||

|

@$(SPHINXBUILD) -M $@ "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

|

||||||

|

|

@ -0,0 +1,87 @@

|

||||||

|

# Configuration file for the Sphinx documentation builder.

|

||||||

|

#

|

||||||

|

# This file only contains a selection of the most common options. For a full

|

||||||

|

# list see the documentation:

|

||||||

|

# https://www.sphinx-doc.org/en/master/usage/configuration.html

|

||||||

|

|

||||||

|

# -- Path setup --------------------------------------------------------------

|

||||||

|

|

||||||

|

# If extensions (or modules to document with autodoc) are in another directory,

|

||||||

|

# add these directories to sys.path here. If the directory is relative to the

|

||||||

|

# documentation root, use os.path.abspath to make it absolute, like shown here.

|

||||||

|

#

|

||||||

|

import os

|

||||||

|

import sys

|

||||||

|

|

||||||

|

sys.path.insert(0, os.path.abspath('..'))

|

||||||

|

|

||||||

|

# -- Project information -----------------------------------------------------

|

||||||

|

|

||||||

|

project = 'idrlnet'

|

||||||

|

copyright = '2021, IDRL'

|

||||||

|

author = 'IDRL'

|

||||||

|

|

||||||

|

# The full version, including alpha/beta/rc tags

|

||||||

|

release = '1.0.4'

|

||||||

|

|

||||||

|

# -- General configuration ---------------------------------------------------

|

||||||

|

|

||||||

|

# Add any Sphinx extension module names here, as strings. They can be

|

||||||

|

# extensions coming with Sphinx (named 'sphinx.ext.*') or your custom

|

||||||

|

# ones.

|

||||||

|

extensions = [

|

||||||

|

"sphinx.ext.autodoc",

|

||||||

|

"sphinx.ext.mathjax",

|

||||||

|

"sphinx.ext.napoleon",

|

||||||

|

"sphinx.ext.viewcode",

|

||||||

|

'myst_parser',

|

||||||

|

'sphinx.ext.autosectionlabel',

|

||||||

|

]

|

||||||

|

|

||||||

|

# Add any paths that contain templates here, relative to this directory.

|

||||||

|

templates_path = ['_templates']

|

||||||

|

|

||||||

|

source_suffix = {

|

||||||

|

'.rst': 'restructuredtext',

|

||||||

|

'.txt': 'markdown',

|

||||||

|

'.md': 'markdown',

|

||||||

|

}

|

||||||

|

# List of patterns, relative to source directory, that match files and

|

||||||

|

# directories to ignore when looking for source files.

|

||||||

|

# This pattern also affects html_static_path and html_extra_path.

|

||||||

|

exclude_patterns = ['_build', 'Thumbs.db', '.DS_Store']

|

||||||

|

|

||||||

|

# -- Options for HTML output -------------------------------------------------

|

||||||

|

|

||||||

|

# The theme to use for HTML and HTML Help pages. See the documentation for

|

||||||

|

# a list of builtin themes.

|

||||||

|

#

|

||||||

|

html_theme = 'sphinx_rtd_theme'

|

||||||

|

|

||||||

|

# Add any paths that contain custom static files (such as style sheets) here,

|

||||||

|

# relative to this directory. They are copied after the builtin static files,

|

||||||

|

# so a file named "default.css" will overwrite the builtin "default.css".

|

||||||

|

html_static_path = ['_static']

|

||||||

|

|

||||||

|

# for MarkdownParser

|

||||||

|

from sphinx_markdown_parser.parser import MarkdownParser

|

||||||

|

|

||||||

|

|

||||||

|

# def setup(app):

|

||||||

|

# # app.add_source_suffix('.md', 'markdown')

|

||||||

|

# # app.add_source_parser(MarkdownParser)

|

||||||

|

# app.add_config_value('markdown_parser_config', {

|

||||||

|

# 'auto_toc_tree_section': 'Content',

|

||||||

|

# 'enable_auto_doc_ref': True,

|

||||||

|

# 'enable_auto_toc_tree': True,

|

||||||

|

# 'enable_eval_rst': True,

|

||||||

|

# 'extensions': [

|

||||||

|

# 'extra',

|

||||||

|

# 'nl2br',

|

||||||

|

# 'sane_lists',

|

||||||

|

# 'smarty',

|

||||||

|

# 'toc',

|

||||||

|

# 'wikilinks',

|

||||||

|

# 'pymdownx.arithmatex',

|

||||||

|

# ],

|

||||||

|

# }, True)

|

||||||

|

|

@ -0,0 +1,48 @@

|

||||||

|

Welcome to idrlnet's documentation!

|

||||||

|

===================================

|

||||||

|

|

||||||

|

.. toctree::

|

||||||

|

:maxdepth: 2

|

||||||

|

|

||||||

|

user/installation

|

||||||

|

user/get_started/tutorial

|

||||||

|

user/cite_idrlnet

|

||||||

|

user/team

|

||||||

|

|

||||||

|

Features

|

||||||

|

--------

|

||||||

|

|

||||||

|

IDRLnet is a machine learning library on top of `Pytorch <https://www.tensorflow.org/>`_. Use IDRLnet if you need a machine

|

||||||

|

learning library that solves both forward and inverse partial differential equations (PDEs) via physics-informed neural

|

||||||

|

networks (PINN). IDRLnet is a flexible framework inspired by `Nvidia Simnet <https://developer.nvidia.com/simnet>`_.

|

||||||

|

|

||||||

|

IDRLnet supports

|

||||||

|

|

||||||

|

- complex domain geometries without mesh generation. Provided geometries include interval, triangle, rectangle, polygon,

|

||||||

|

circle, sphere... Other geometries can be constructed using three boolean operations: union, difference, and

|

||||||

|

intersection;

|

||||||

|

- sampling in the interior of the defined geometry or on the boundary with given conditions.

|

||||||

|

- enables the user code to be structured. Data sources, operations, constraints are all represented by ``Node``. The graph

|

||||||

|

will be automatically constructed via label symbols of each node. Getting rid of the explicit construction via

|

||||||

|

explicit expressions, users model problems more naturally.

|

||||||

|

- solving variational minimization problem;

|

||||||

|

- solving integral differential equation;

|

||||||

|

- adaptive resampling;

|

||||||

|

- recover unknown parameter of PDEs from noisy measurement data.

|

||||||

|

|

||||||

|

API reference

|

||||||

|

=============

|

||||||

|

If you are looking for usage of a specific function, class or method, please refer to the following part.

|

||||||

|

|

||||||

|

.. toctree::

|

||||||

|

:maxdepth: 2

|

||||||

|

|

||||||

|

|

||||||

|

modules/modules

|

||||||

|

|

||||||

|

Indices and tables

|

||||||

|

==================

|

||||||

|

|

||||||

|

* :ref:`genindex`

|

||||||

|

* :ref:`modindex`

|

||||||

|

* :ref:`search`

|

||||||

|

|

@ -0,0 +1,35 @@

|

||||||

|

@ECHO OFF

|

||||||

|

|

||||||

|

pushd %~dp0

|

||||||

|

|

||||||

|

REM Command file for Sphinx documentation

|

||||||

|

|

||||||

|

if "%SPHINXBUILD%" == "" (

|

||||||

|

set SPHINXBUILD=sphinx-build

|

||||||

|

)

|

||||||

|

set SOURCEDIR=.

|

||||||

|

set BUILDDIR=_build

|

||||||

|

|

||||||

|

if "%1" == "" goto help

|

||||||

|

|

||||||

|

%SPHINXBUILD% >NUL 2>NUL

|

||||||

|

if errorlevel 9009 (

|

||||||

|

echo.

|

||||||

|

echo.The 'sphinx-build' command was not found. Make sure you have Sphinx

|

||||||

|

echo.installed, then set the SPHINXBUILD environment variable to point

|

||||||

|

echo.to the full path of the 'sphinx-build' executable. Alternatively you

|

||||||

|

echo.may add the Sphinx directory to PATH.

|

||||||

|

echo.

|

||||||

|

echo.If you don't have Sphinx installed, grab it from

|

||||||

|

echo.http://sphinx-doc.org/

|

||||||

|

exit /b 1

|

||||||

|

)

|

||||||

|

|

||||||

|

%SPHINXBUILD% -M %1 %SOURCEDIR% %BUILDDIR% %SPHINXOPTS% %O%

|

||||||

|

goto end

|

||||||

|

|

||||||

|

:help

|

||||||

|

%SPHINXBUILD% -M help %SOURCEDIR% %BUILDDIR% %SPHINXOPTS% %O%

|

||||||

|

|

||||||

|

:end

|

||||||

|

popd

|

||||||

|

|

@ -0,0 +1,34 @@

|

||||||

|

idrlnet.architecture package

|

||||||

|

============================

|

||||||

|

|

||||||

|

.. automodule:: idrlnet.architecture

|

||||||

|

:members:

|

||||||

|

:undoc-members:

|

||||||

|

:show-inheritance:

|

||||||

|

|

||||||

|

Submodules

|

||||||

|

----------

|

||||||

|

|

||||||

|

idrlnet.architecture.grid module

|

||||||

|

--------------------------------

|

||||||

|

|

||||||

|

.. automodule:: idrlnet.architecture.grid

|

||||||

|

:members:

|

||||||

|

:undoc-members:

|

||||||

|

:show-inheritance:

|

||||||

|

|

||||||

|

idrlnet.architecture.layer module

|

||||||

|

---------------------------------

|

||||||

|

|

||||||

|

.. automodule:: idrlnet.architecture.layer

|

||||||

|

:members:

|

||||||

|

:undoc-members:

|

||||||

|

:show-inheritance:

|

||||||

|

|

||||||

|

idrlnet.architecture.mlp module

|

||||||

|

-------------------------------

|

||||||

|

|

||||||

|

.. automodule:: idrlnet.architecture.mlp

|

||||||

|

:members:

|

||||||

|

:undoc-members:

|

||||||

|

:show-inheritance:

|

||||||

|

|

@ -0,0 +1,42 @@

|

||||||

|

idrlnet.geo\_utils package

|

||||||

|

==========================

|

||||||

|

|

||||||

|

.. automodule:: idrlnet.geo_utils

|

||||||

|

:members:

|

||||||

|

:undoc-members:

|

||||||

|

:show-inheritance:

|

||||||

|

|

||||||

|

Submodules

|

||||||

|

----------

|

||||||

|

|

||||||

|

idrlnet.geo\_utils.geo module

|

||||||

|

-----------------------------

|

||||||

|

|

||||||

|

.. automodule:: idrlnet.geo_utils.geo

|

||||||

|

:members:

|

||||||

|

:undoc-members:

|

||||||

|

:show-inheritance:

|

||||||

|

|

||||||

|

idrlnet.geo\_utils.geo\_builder module

|

||||||

|

--------------------------------------

|

||||||

|

|

||||||

|

.. automodule:: idrlnet.geo_utils.geo_builder

|

||||||

|

:members:

|

||||||

|

:undoc-members:

|

||||||

|

:show-inheritance:

|

||||||

|

|

||||||

|

idrlnet.geo\_utils.geo\_obj module

|

||||||

|

----------------------------------

|

||||||

|

|

||||||

|

.. automodule:: idrlnet.geo_utils.geo_obj

|

||||||

|

:members:

|

||||||

|

:undoc-members:

|

||||||

|

:show-inheritance:

|

||||||

|

|

||||||

|

idrlnet.geo\_utils.sympy\_np module

|

||||||

|

-----------------------------------

|

||||||

|

|

||||||

|

.. automodule:: idrlnet.geo_utils.sympy_np

|

||||||

|

:members:

|

||||||

|

:undoc-members:

|

||||||

|

:show-inheritance:

|

||||||

|

|

@ -0,0 +1,26 @@

|

||||||

|

idrlnet.pde\_op package

|

||||||

|

=======================

|

||||||

|

|

||||||

|

.. automodule:: idrlnet.pde_op

|

||||||

|

:members:

|

||||||

|

:undoc-members:

|

||||||

|

:show-inheritance:

|

||||||

|

|

||||||

|

Submodules

|

||||||

|

----------

|

||||||

|

|

||||||

|

idrlnet.pde\_op.equations module

|

||||||

|

--------------------------------

|

||||||

|

|

||||||

|

.. automodule:: idrlnet.pde_op.equations

|

||||||

|

:members:

|

||||||

|

:undoc-members:

|

||||||

|

:show-inheritance:

|

||||||

|

|

||||||

|

idrlnet.pde\_op.operator module

|

||||||

|

-------------------------------

|

||||||

|

|

||||||

|

.. automodule:: idrlnet.pde_op.operator

|

||||||

|

:members:

|

||||||

|

:undoc-members:

|

||||||

|

:show-inheritance:

|

||||||

|

|

@ -0,0 +1,124 @@

|

||||||

|

idrlnet package

|

||||||

|

===============

|

||||||

|

|

||||||

|

.. automodule:: idrlnet

|

||||||

|

:members:

|

||||||

|

:undoc-members:

|

||||||

|

:show-inheritance:

|

||||||

|

|

||||||

|

Subpackages

|

||||||

|

-----------

|

||||||

|

|

||||||

|

.. toctree::

|

||||||

|

:maxdepth: 4

|

||||||

|

|

||||||

|

idrlnet.architecture

|

||||||

|

idrlnet.geo_utils

|

||||||

|

idrlnet.pde_op

|

||||||

|

|

||||||

|

Submodules

|

||||||

|

----------

|

||||||

|

|

||||||

|

idrlnet.callbacks module

|

||||||

|

------------------------

|

||||||

|

|

||||||

|

.. automodule:: idrlnet.callbacks

|

||||||

|

:members:

|

||||||

|

:undoc-members:

|

||||||

|

:show-inheritance:

|

||||||

|

|

||||||

|

idrlnet.data module

|

||||||

|

-------------------

|

||||||

|

|

||||||

|

.. automodule:: idrlnet.data

|

||||||

|

:members:

|

||||||

|

:undoc-members:

|

||||||

|

:show-inheritance:

|

||||||

|

|

||||||

|

idrlnet.graph module

|

||||||

|

--------------------

|

||||||

|

|

||||||

|

.. automodule:: idrlnet.graph

|

||||||

|

:members:

|

||||||

|

:undoc-members:

|

||||||

|

:show-inheritance:

|

||||||

|

|

||||||

|

idrlnet.header module

|

||||||

|

---------------------

|

||||||

|

|

||||||

|

.. automodule:: idrlnet.header

|

||||||

|

:members:

|

||||||

|

:undoc-members:

|

||||||

|

:show-inheritance:

|

||||||

|

|

||||||

|

idrlnet.net module

|

||||||

|

------------------

|

||||||

|

|

||||||

|

.. automodule:: idrlnet.net

|

||||||

|

:members:

|

||||||

|

:undoc-members:

|

||||||

|

:show-inheritance:

|

||||||

|

|

||||||

|

idrlnet.node module

|

||||||

|

-------------------

|

||||||

|

|

||||||

|

.. automodule:: idrlnet.node

|

||||||

|

:members:

|

||||||

|

:undoc-members:

|

||||||

|

:show-inheritance:

|

||||||

|

|

||||||

|

idrlnet.optim module

|

||||||

|

--------------------

|

||||||

|

|

||||||

|

.. automodule:: idrlnet.optim

|

||||||

|

:members:

|

||||||

|

:undoc-members:

|

||||||

|

:show-inheritance:

|

||||||

|

|

||||||

|

idrlnet.pde module

|

||||||

|

------------------

|

||||||

|

|

||||||

|

.. automodule:: idrlnet.pde

|

||||||

|

:members:

|

||||||

|

:undoc-members:

|

||||||

|

:show-inheritance:

|

||||||

|

|

||||||

|

idrlnet.receivers module

|

||||||

|

------------------------

|

||||||

|

|

||||||

|

.. automodule:: idrlnet.receivers

|

||||||

|

:members:

|

||||||

|

:undoc-members:

|

||||||

|

:show-inheritance:

|

||||||

|

|

||||||

|

idrlnet.shortcut module

|

||||||

|

-----------------------

|

||||||

|

|

||||||

|

.. automodule:: idrlnet.shortcut

|

||||||

|

:members:

|

||||||

|

:undoc-members:

|

||||||

|

:show-inheritance:

|

||||||

|

|

||||||

|

idrlnet.solver module

|

||||||

|

---------------------

|

||||||

|

|

||||||

|

.. automodule:: idrlnet.solver

|

||||||

|

:members:

|

||||||

|

:undoc-members:

|

||||||

|

:show-inheritance:

|

||||||

|

|

||||||

|

idrlnet.torch\_util module

|

||||||

|

--------------------------

|

||||||

|

|

||||||

|

.. automodule:: idrlnet.torch_util

|

||||||

|

:members:

|

||||||

|

:undoc-members:

|

||||||

|

:show-inheritance:

|

||||||

|

|

||||||

|

idrlnet.variable module

|

||||||

|

-----------------------

|

||||||

|

|

||||||

|

.. automodule:: idrlnet.variable

|

||||||

|

:members:

|

||||||

|

:undoc-members:

|

||||||

|

:show-inheritance:

|

||||||

|

|

@ -0,0 +1,7 @@

|

||||||

|

idrlnet

|

||||||

|

=======

|

||||||

|

|

||||||

|

.. toctree::

|

||||||

|

:maxdepth: 4

|

||||||

|

|

||||||

|

idrlnet

|

||||||

|

|

@ -0,0 +1,2 @@

|

||||||

|

# Cite IDRLnet

|

||||||

|

The paper is to appear on Arxiv.

|

||||||

|

|

@ -0,0 +1,231 @@

|

||||||

|

# Solving Simple Poisson Equation

|

||||||

|

|

||||||

|

Inspired by [Nvidia SimNet](https://developer.nvidia.com/simnet),

|

||||||

|

IDRLnet employs symbolic links to construct a computational graph automatically.

|

||||||

|

In this section, we introduce the primary usage of IDRLnet.

|

||||||

|

To solve PINN via IDRLnet, we divide the procedure into several parts:

|

||||||

|

|

||||||

|

1. Define symbols and parameters.

|

||||||

|

1. Define geometry objects.

|

||||||

|

1. Define sampling domains and corresponding constraints.

|

||||||

|

1. Define neural networks and PDEs.

|

||||||

|

1. Define solver and solve.

|

||||||

|

1. Post processing.

|

||||||

|

|

||||||

|

We provide the following example to illustrate the primary usages and features of IDRLnet.

|

||||||

|

|

||||||

|

Consider the 2d Poisson's equation defined on $\Omega=[-1,1]\times[-1,1]$, which satisfies $-\Delta u=1$, with

|

||||||

|

the boundary value conditions:

|

||||||

|

|

||||||

|

$$

|

||||||

|

\begin{align}

|

||||||

|

\frac{\partial u(x, -1)}{\partial n}&=\frac{\partial u(x, 1)}{\partial n}=0 \\

|

||||||

|

u(-1,y)&=u(1, y)=0

|

||||||

|

\end{align}

|

||||||

|

$$

|

||||||

|

|

||||||

|

## Define Symbols

|

||||||

|

For the 2d problem, we define two coordinate symbols `x` and `y`, which will be used in symbolic expressions in IDRLnet.

|

||||||

|

```python

|

||||||

|

x, y = sp.symbols('x y')

|

||||||

|

```

|

||||||

|

Note that variables `x`, `y`, `z`, `t` are reserved inside IDRLnet.

|

||||||

|

The four symbols should only represent the 4 primary coordinates.

|

||||||

|

|

||||||

|

## Define Geometric Objects

|

||||||

|

|

||||||

|

The geometry object is a simple rectangle.

|

||||||

|

```python

|

||||||

|

rec = sc.Rectangle((-1., -1.), (1., 1.))

|

||||||

|

```

|

||||||

|

|

||||||

|

Users can sample points on these geometry objects. The operators `+`, `-`, `&` are also supported.

|

||||||

|

A slightly more complicated example is as follows:

|

||||||

|

```python

|

||||||

|

import numpy as np

|

||||||

|

import idrlnet.shortcut as sc

|

||||||

|

|

||||||

|

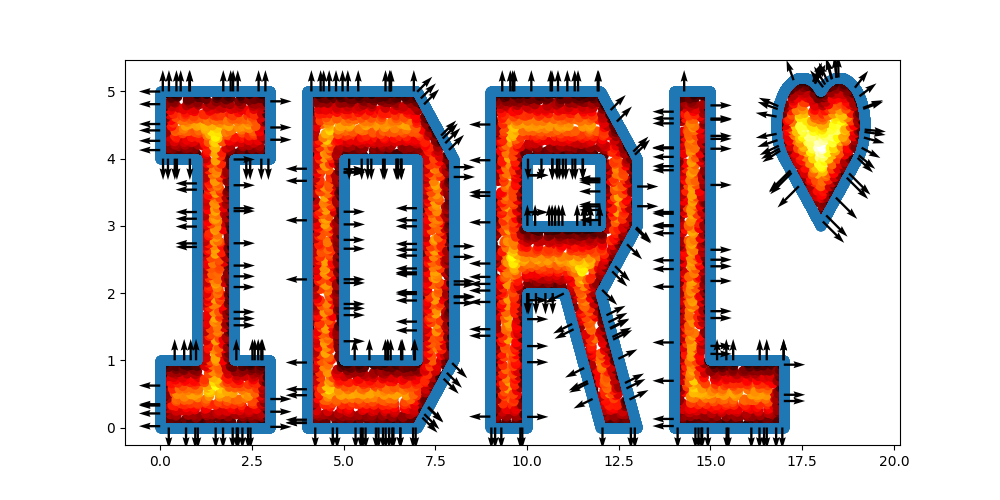

# Define 4 polygons

|

||||||

|

I = sc.Polygon([(0, 0), (3, 0), (3, 1), (2, 1), (2, 4), (3, 4), (3, 5), (0, 5), (0, 4), (1, 4), (1, 1), (0, 1)])

|

||||||

|

D = sc.Polygon([(4, 0), (7, 0), (8, 1), (8, 4), (7, 5), (4, 5)]) - sc.Polygon(([5, 1], [7, 1], [7, 4], [5, 4]))

|

||||||

|

R = sc.Polygon([(9, 0), (10, 0), (10, 2), (11, 2), (12, 0), (13, 0), (12, 2), (13, 3), (13, 4), (12, 5), (9, 5)]) \

|

||||||

|

- sc.Rectangle(point_1=(10., 3.), point_2=(12, 4))

|

||||||

|

L = sc.Polygon([(14, 0), (17, 0), (17, 1), (15, 1), (15, 5), (14, 5)])

|

||||||

|

|

||||||

|

# Define a heart shape.

|

||||||

|

heart = sc.Heart((18, 4), radius=1)

|

||||||

|

|

||||||

|

# Union of the 5 geometry objects

|

||||||

|

geo = (I + D + R + L + heart)

|

||||||

|

|

||||||

|

# interior samples

|

||||||

|

points = geo.sample_interior(density=100, low_discrepancy=True)

|

||||||

|

plt.figure(figsize=(10, 5))

|

||||||

|

plt.scatter(x=points['x'], y=points['y'], c=points['sdf'], cmap='hot')

|

||||||

|

|

||||||

|

# boundary samples

|

||||||

|

points = geo.sample_boundary(density=400, low_discrepancy=True)

|

||||||

|

plt.scatter(x=points['x'], y=points['y'])

|

||||||

|

idx = np.random.choice(points['x'].shape[0], 400, replace=False)

|

||||||

|

|

||||||

|

# Show normal directions on boundary

|

||||||

|

plt.quiver(points['x'][idx], points['y'][idx], points['normal_x'][idx], points['normal_y'][idx])

|

||||||

|

plt.show()

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

## Define Sampling Methods and Constraints

|

||||||

|

Take a 1D fitting task as an example.

|

||||||

|

The data source generates pairs $(x_i, f_i)$. We train a network $u_\theta(x_i)\approx f_i$.

|

||||||

|

Then $f_i$ is the target output of $u_\theta(x_i)$.

|

||||||

|

These targets are called constraints in IDRLnet.

|

||||||

|

|

||||||

|

For the problem, three constraints are presented.

|

||||||

|

|

||||||

|

The constraint

|

||||||

|

|

||||||

|

$$

|

||||||

|

u(-1,y)=u(1, y)=0

|

||||||

|

$$

|

||||||

|

is translated into

|

||||||

|

```python

|

||||||

|

@sc.datanode

|

||||||

|

class LeftRight(sc.SampleDomain):

|

||||||

|

# Due to `name` is not specified, LeftRight will be the name of datanode automatically

|

||||||

|

def sampling(self, *args, **kwargs):

|

||||||

|

# sieve define rules to filter points

|

||||||

|

points = rec.sample_boundary(1000, sieve=((y > -1.) & (y < 1.)))

|

||||||

|

constraints = sc.Variables({'T': 0.})

|

||||||

|

return points, constraints

|

||||||

|

```

|

||||||

|

Then `LeftRight()` is wrapped as an instance of `DataNode`.

|

||||||

|

One can store states in these instances.

|

||||||

|

Alternatively, if users do not need storing states, the code above is equivalent to

|

||||||

|

```python

|

||||||

|

@sc.datanode(name='LeftRight')

|

||||||

|

def leftright(self, *args, **kwargs):

|

||||||

|

points = rec.sample_boundary(1000, sieve=((y > -1.) & (y < 1.)))

|

||||||

|

constraints = sc.Variables({'T': 0.})

|

||||||

|

return points, constraints

|

||||||

|

```

|

||||||

|

Then `sampling()` is wrapped as an instance of `DataNode`.

|

||||||

|

|

||||||

|

The constraint

|

||||||

|

|

||||||

|

$$

|

||||||

|

\frac{\partial u(x, -1)}{\partial n}=\frac{\partial u(x, 1)}{\partial n}=0

|

||||||

|

$$

|

||||||

|

is translated into

|

||||||

|

|

||||||

|

```python

|

||||||

|

@sc.datanode(name="up_down")

|

||||||

|

class UpDownBoundaryDomain(sc.SampleDomain):

|

||||||

|

def sampling(self, *args, **kwargs):

|

||||||

|

points = rec.sample_boundary(1000, sieve=((x > -1.) & (x < 1.)))

|

||||||

|

constraints = sc.Variables({'normal_gradient_T': 0.})

|

||||||

|

return points, constraints

|

||||||

|

```

|

||||||

|

The constraint `normal_gradient_T` will also be one of the output of computable nodes, including `PdeNode` or `NetNode`.

|

||||||

|

|

||||||

|

The last constraint is the PDE itself $-\Delta u=1$:

|

||||||

|

|

||||||

|

```python

|

||||||

|

@sc.datanode(name="heat_domain")

|

||||||

|

class HeatDomain(sc.SampleDomain):

|

||||||

|

def __init__(self):

|

||||||

|

self.points = 1000

|

||||||

|

|

||||||

|

def sampling(self, *args, **kwargs):

|

||||||

|

points = rec.sample_interior(self.points)

|

||||||

|

constraints = sc.Variables({'diffusion_T': 1.})

|

||||||

|

return points, constraints

|

||||||

|

```

|

||||||

|

`diffusion_T` will also be one of the outputs of computable nodes.

|

||||||

|

`self.points` is a stored state and can be varied to control the sampling behaviors.

|

||||||

|

|

||||||

|

## Define Neural Networks and PDEs

|

||||||

|

As mentioned before, neural networks and PDE expressions are encapsulated as `Node` too.

|

||||||

|

The `Node` objects have `inputs`, `derivatives`, `outputs` properties and the `evaluate()` method.

|

||||||

|

According to their inputs, derivatives, and outputs, these nodes will be automatically connected as a computational graph.

|

||||||

|

A topological sort will be applied to the graph to decide the computation order.

|

||||||

|

|

||||||

|

```python

|

||||||

|

net = sc.get_net_node(inputs=('x', 'y',), outputs=('T',), name='net1', arch=sc.Arch.mlp)

|

||||||

|

```

|

||||||

|

This is a simple call to get a neural network with the predefined architecture.

|

||||||

|

As an alternative, one can specify the configurations via

|

||||||

|

```python

|

||||||

|

evaluate = MLP(n_seq=[2, 20, 20, 20, 20, 1)],

|

||||||

|

activation=Activation.swish,

|

||||||

|

initialization=Initializer.kaiming_uniform,

|

||||||

|

weight_norm=True)

|

||||||

|

net = NetNode(inputs=('x', 'y',), outputs=('T',), net=evaluate, name='net1', *args, **kwargs)

|

||||||

|

```

|

||||||

|

which generates a node with

|

||||||

|

- `inputs=('x','y')`,

|

||||||

|

- `derivatives=tuple()`,

|

||||||

|

- `outpus=('T')`

|

||||||

|

```python

|

||||||

|

pde = sc.DiffusionNode(T='T', D=1., Q=0., dim=2, time=False)

|

||||||

|

```

|

||||||

|

generates a node with

|

||||||

|

- `inputs=tuple()`,

|

||||||

|

- `derivatives=('T__x', 'T__y')`,

|

||||||

|

- `outputs=('diffusion_T',)`.

|

||||||

|

|

||||||

|

```python

|

||||||

|

grad = sc.NormalGradient('T', dim=2, time=False)

|

||||||

|

```

|

||||||

|

generates a node with

|

||||||

|

- `inputs=('normal_x', 'normal_y')`,

|

||||||

|

- `derivatives=('T__x', 'T__y')`,

|

||||||

|

- `outputs=('normal_gradient_T',)`.

|

||||||

|

The string `__` is reserved to represent the derivative operator.

|

||||||

|

If the required derivatives cannot be directly obtained from outputs of other nodes,

|

||||||

|

It will try `autograd` provided by Pytorch with the maximum prefix match from outputs of other nodes.

|

||||||

|

|

||||||

|

## Define A Solver

|

||||||

|

Initialize a solver to bundle all the components and solve the model.

|

||||||

|

```python

|

||||||

|

s = sc.Solver(sample_domains=(HeatDomain(), LeftRight(), UpDownBoundaryDomain()),

|

||||||

|

netnodes=[net],

|

||||||

|

pdes=[pde, grad],

|

||||||

|

max_iter=1000)

|

||||||

|

s.solve()

|

||||||

|

```

|

||||||

|

Before the solver start running, it constructs computational graphs and applies a topological sort to decide the evaluation order.

|

||||||

|

Each sample domain has its independent graph.

|

||||||

|

The procedures will be executed automatically when the solver detects potential changes in graphs.

|

||||||

|

As default, these graphs are also visualized as `png` in the `network` directory named after the corresponding domain.

|

||||||

|

|

||||||

|

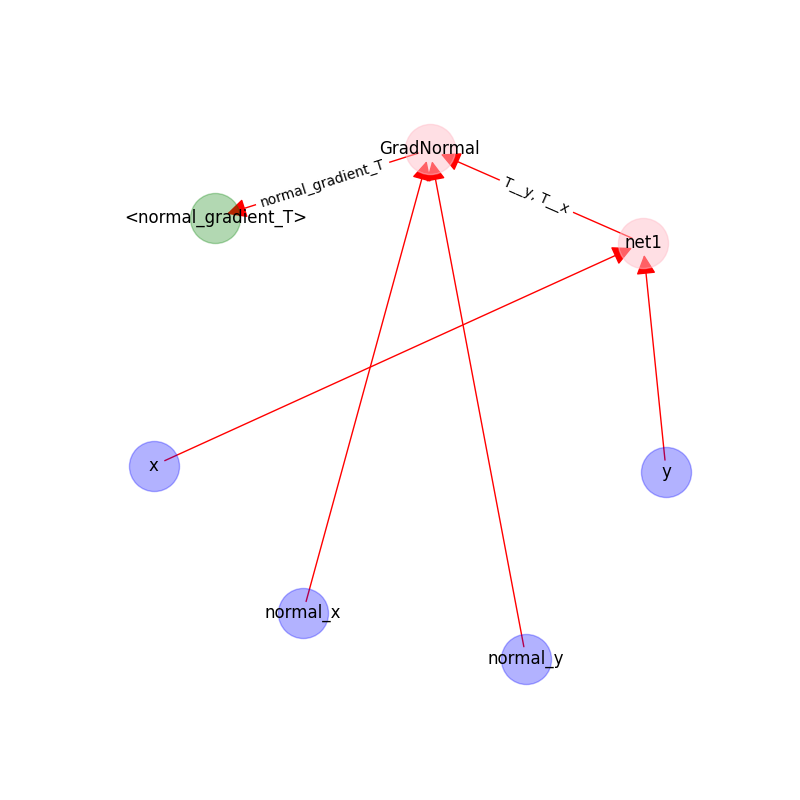

The following figure shows the graph on `UpDownBoundaryDomain`:

|

||||||

|

|

||||||

|

|

||||||

|

- The blue nodes are generated via sampling;

|

||||||

|

- the red nodes are computational;

|

||||||

|

- the green nodes are constraints(targets).

|

||||||

|

|

||||||

|

## Inference

|

||||||

|

We use domain `heat_domain` for inference.

|

||||||

|

First, we increase the density to 10000 via changing the attributes of the domain.

|

||||||

|

Then, `Solver.infer_step()` is called for inference.

|

||||||

|

```python

|

||||||

|

s.set_domain_parameter('heat_domain', {'points': 10000})

|

||||||

|

coord = s.infer_step({'heat_domain': ['x', 'y', 'T']})

|

||||||

|

num_x = coord['heat_domain']['x'].cpu().detach().numpy().ravel()

|

||||||

|

num_y = coord['heat_domain']['y'].cpu().detach().numpy().ravel()

|

||||||

|

num_Tp = coord['heat_domain']['T'].cpu().detach().numpy().ravel()

|

||||||

|

```

|

||||||

|

|

||||||

|

One may also define a separate domain for inference, which generates `constraints={}`, and thus, no computational graphs will be generated on the domain.

|

||||||

|

We will see this later.

|

||||||

|

|

||||||

|

## Performance Issues

|

||||||

|

1. When a domain is contained by `Solver.sample_domains`, the `sampling()` will be called every iteration.

|

||||||

|

Users should avoid including redundant domains.

|

||||||

|

Future versions will ignore domains with `constraints={}` in training steps.

|

||||||

|

2. The current version samples points in memory.

|

||||||

|

When GPU devices are enabled, data exchange between the memory and GPU devices might hinder the performance.

|

||||||

|

In future versions, we will sample points directly in GPU devices if available.

|

||||||

|

|

||||||

|

See `examples/simple_poisson`.

|

||||||

|

|

@ -0,0 +1,72 @@

|

||||||

|

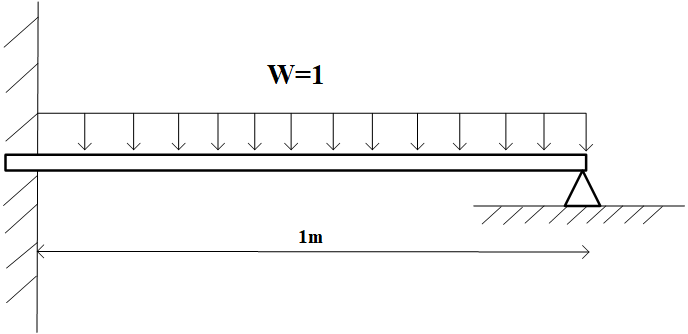

# Euler–Bernoulli beam

|

||||||

|

We consider the Euler–Bernoulli beam equation,

|

||||||

|

|

||||||

|

$$

|

||||||

|

\begin{align}

|

||||||

|

\frac{\partial^{2}}{\partial x^{2}}\left(\frac{\partial^{2} u}{\partial x^{2}}\right)=-1 \\

|

||||||

|

u|_{x=0}=0, u^{\prime}|_{x=0}=0, \\

|

||||||

|

u^{\prime \prime}|_{x=1}=0, u^{\prime \prime \prime}|_{x=1}=0,

|

||||||

|

\end{align}

|

||||||

|

$$

|

||||||

|

which models the following beam with external forces.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

## Expression Node

|

||||||

|

The Euler-Bernoulli beam equation is not implemented inside IDRLnet.

|

||||||

|

Users may add the equation to `idrlnet.pde_op.equations`.

|

||||||

|

However, one may also define the differential equation via symbol expressions directly.

|

||||||

|

|

||||||

|

First, we define a function symbol in the symbol definition part.

|

||||||

|

```python

|

||||||

|

x = sp.symbols('x')

|

||||||

|

y = sp.Function('y')(x)

|

||||||

|

```

|

||||||

|

In the PDE definition part, we add these PDE nodes:

|

||||||

|

|

||||||

|

```python

|

||||||

|

pde1 = sc.ExpressionNode(name='dddd_y', expression=y.diff(x).diff(x).diff(x).diff(x) + 1)

|

||||||

|

pde2 = sc.ExpressionNode(name='d_y', expression=y.diff(x))

|

||||||

|

pde3 = sc.ExpressionNode(name='dd_y', expression=y.diff(x).diff(x))

|

||||||

|

pde4 = sc.ExpressionNode(name='ddd_y', expression=y.diff(x).diff(x).diff(x))

|

||||||

|

```

|

||||||

|

These are instances of `idrl.pde.PdeNode`, which are also computational nodes.

|

||||||

|

For example, `pde1` is an instance of `Node` with

|

||||||

|

- `inputs=tuple()`;

|

||||||

|

- `derivatives=(y__x__x__x__x, )`;

|

||||||

|

- `outputs=('dddd_y',)`.

|

||||||

|

|

||||||

|

The four PDE nodes match the following operators, respectively:

|

||||||

|

- $dy^4/d^4x+1$;

|

||||||

|

- $dy/dx$;

|

||||||

|

- $dy^2/d^2x$;

|

||||||

|

- $dy^3/d^3x$.

|

||||||

|

|

||||||

|

## Seperate Inference Domain

|

||||||

|

In this example, we define a domain specified for inference.

|

||||||

|

```python

|

||||||

|

@sc.datanode(name='infer')

|

||||||

|

class Infer(sc.SampleDomain):

|

||||||

|

def sampling(self, *args, **kwargs):

|

||||||

|

return {'x': np.linspace(0, 1, 1000).reshape(-1, 1)}, {}

|

||||||

|

```

|

||||||

|

Its instance is not be passed to the solver initializer,

|

||||||

|

which may improve the performance since Infer().sampling

|

||||||

|

After the solving procedure ends, we change the `sample_domains` of the solver,

|

||||||

|

|

||||||

|

```python

|

||||||

|

solver.sample_domains = (Infer(),)

|

||||||

|

```

|

||||||

|

which triggers the regeneration of the computational graph. Then `solver.infer_step()` is called.

|

||||||

|

|

||||||

|

```python

|

||||||

|

points = solver.infer_step({'infer': ['x', 'y']})

|

||||||

|

xs = points['infer']['x'].detach().cpu().numpy().ravel()

|

||||||

|

y_pred = points['infer']['y'].detach().cpu().numpy().ravel()

|

||||||

|

```

|

||||||

|

|

||||||

|

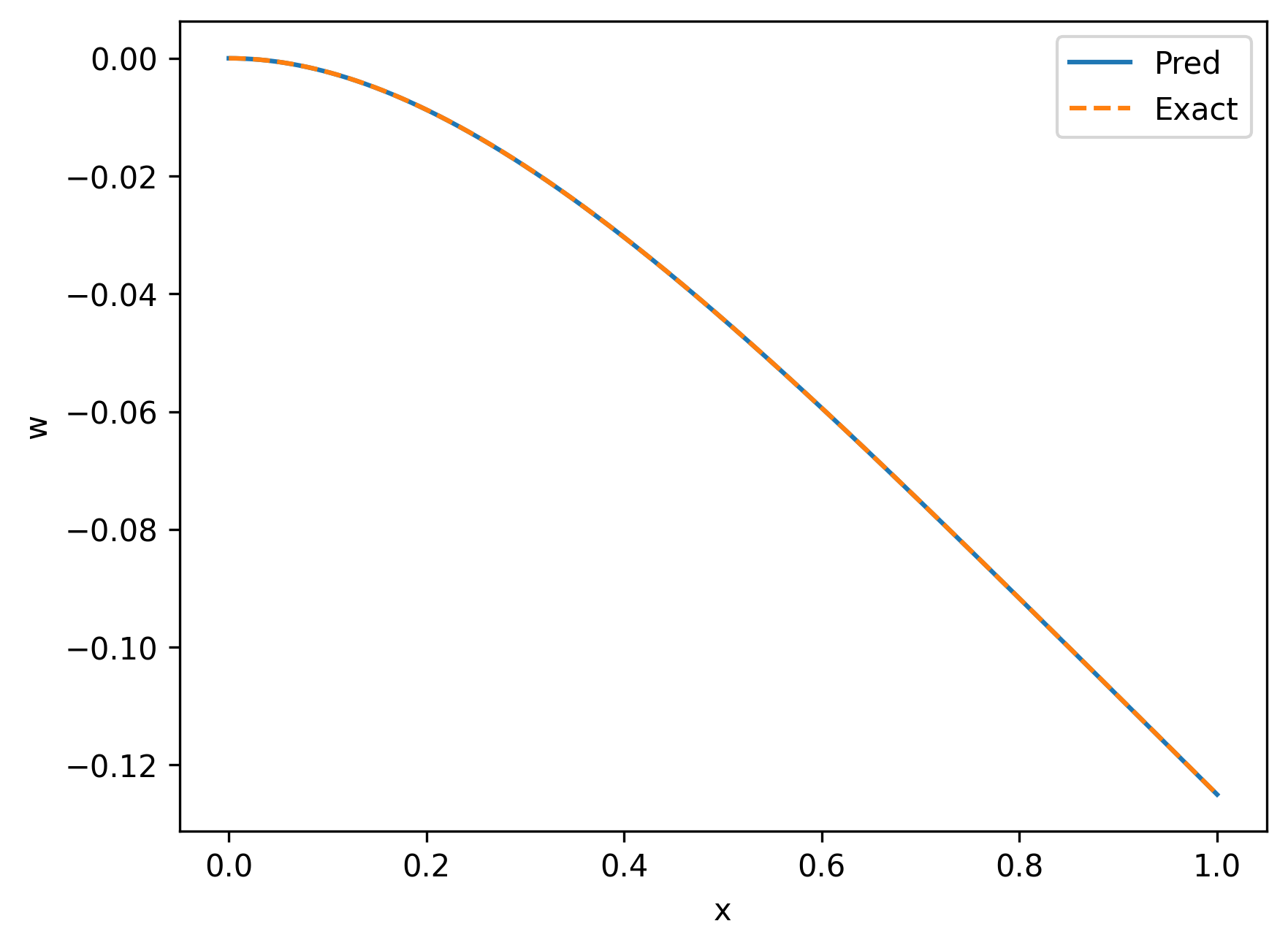

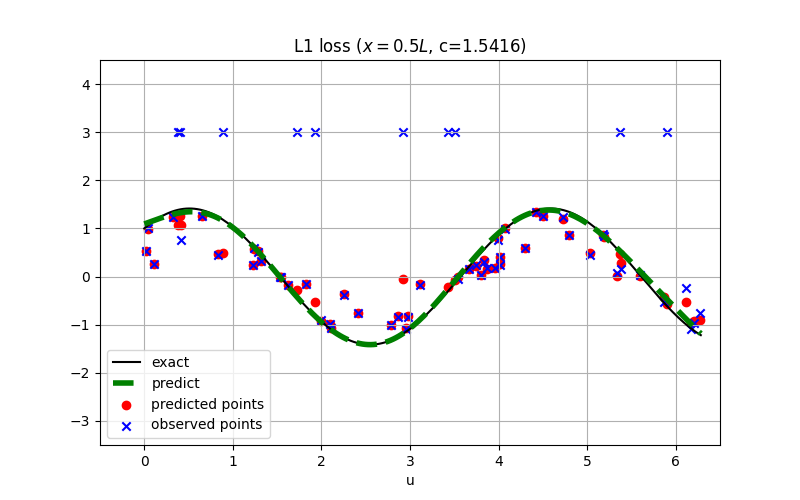

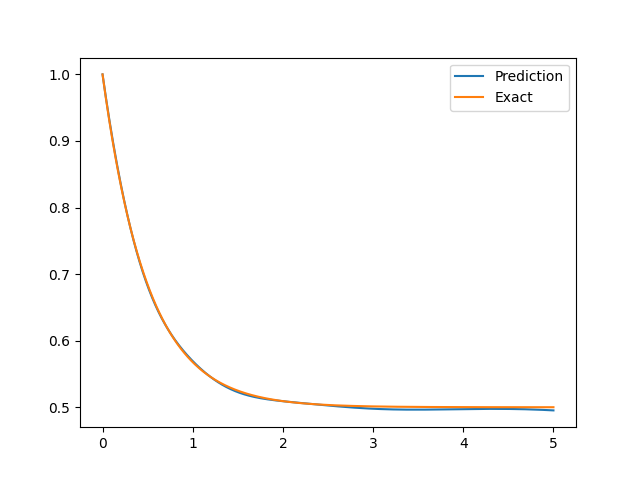

The result is shown as follows.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

See `examples/euler_beam`.

|

||||||

|

|

@ -0,0 +1,58 @@

|

||||||

|

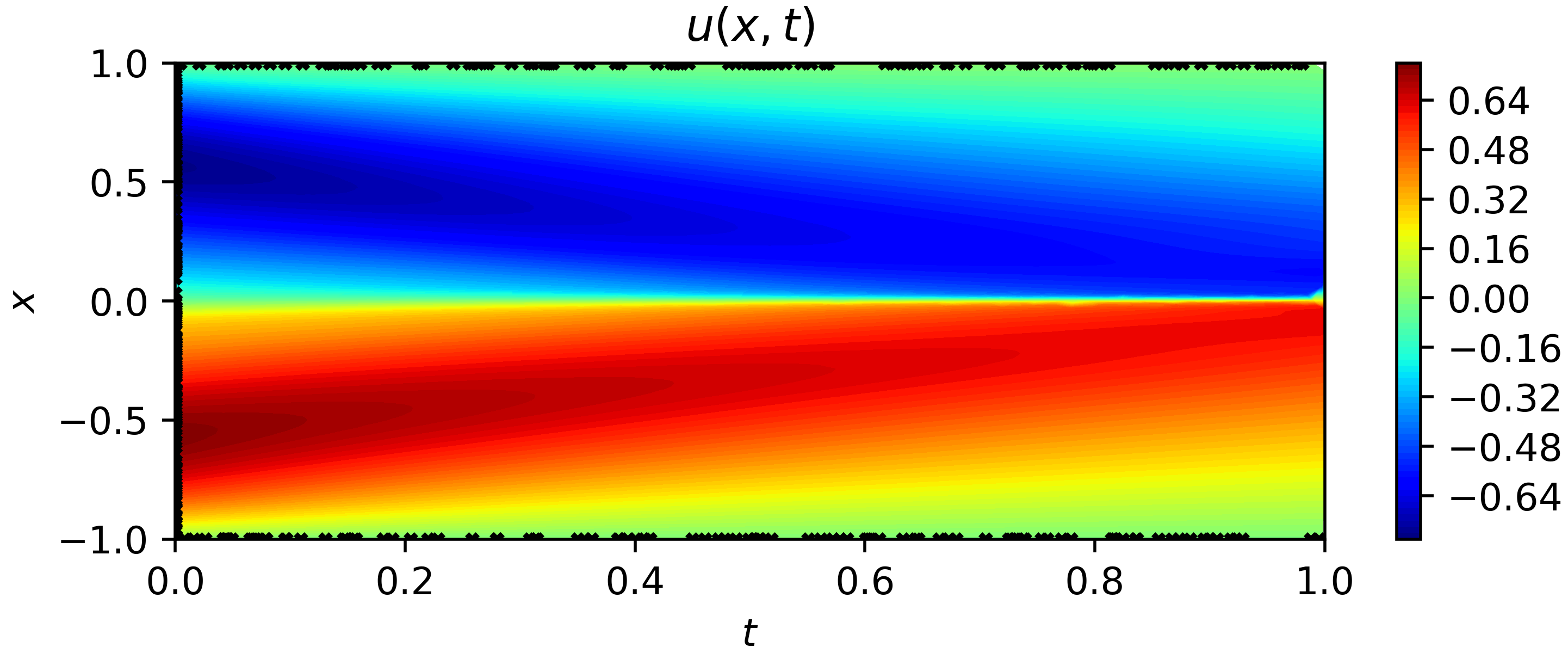

# Burgers' Equation

|

||||||

|

Burgers' equation is formulated as following:

|

||||||

|

|

||||||

|

$$

|

||||||

|

\begin{equation}

|

||||||

|

\frac{\partial u}{\partial t}+u \frac{\partial u}{\partial x}=\nu \frac{\partial^{2} u}{\partial x^{2}}

|

||||||

|

\end{equation}

|

||||||

|

$$

|

||||||

|

We have added the template of the equation into `idrlnet.pde_op.equations`.

|

||||||

|